At the core of the trust formula, the reliability, honesty, and competence of insurance planners are crucial for building trust. Yet how insurance companies can quantify these factors objectively remains a major challenge. By establishing trust scores and trust levels, improvements in customer relations, sales performance, satisfaction, and competitiveness can be expected.

The purpose of this article is to present the basic reasoning and exploration of “making trust AI-driven.” In a human–machine collaboration environment, this provide support for humans; in a machine-based environment, it serves as guideline and regulation for AI. This is my fascinating learning journey together with GPT-4 — a process that moved from nothing to something, through initial exploration, iterative dialogue, and joint construction, transforming abstract concepts into digital form and refining a thinking framework for solving complex problems. Although the conclusions here have reference value, I suggest readers focus more on the process: to imagine innovative solutions, and to consider how to collaborate with artificial intelligence by building new thinking frameworks to solve their own problems.

What is trust? It is a highly abstract concept, yet so close to our daily lives. Why do we need trust? No one would deny that all transactions are built on trust, especially in insurance. This is not only because insurance is an intangible product, but also because its transactions involve a time gap — unlike “cash on delivery.” Therefore, trust is particularly important for insurance.

Insurance relies on “sales,” and primarily on “face-to-face sales.” The current insurance market is still dominated by company agents. Even in multi-channel sales, apart from pure online sales and telemarketing, bancassurance and broker channels are also based mainly on “people + face-to-face interaction.” Today’s difficulties and transformation challenges in the insurance industry may have their stage-specific inevitabilities, but technological trends still bring a glimmer of hope. Personally, I believe the primary problem in the insurance industry today comes from the sales side, with lack of trust being the core issue. Under the same thinking framework and system structure, merely relying on technological empowerment to rebuild consumer trust in agents and insurance companies is, frankly speaking, difficult. In the past we have shared many articles discussing the importance of building trust. Especially with the advent of the AI era, when human–machine collaboration has become a trend and machines are gradually replacing humans in many areas, how should we face such a scenario? How should we “effectively manage” trust? This has always been a question on my mind.

Therefore, how to make the abstract concept of trust more concrete — not limited to conceptual analysis and written description, but able to be digitalized, structured, and even AI-driven — is the focus of this article. Using insurance planning as the scenario, the views on trust and the final constructed “trust framework” in this text were developed through strategic, dialogue-based prompting with OpenAI’s GPT-4, combined with existing theories and logical reasoning. Although these views and results are based on current research and theory, their combination and application in different scenarios still carry originality and innovation. I encourage readers to think critically about these viewpoints, and to consider how they might apply to more diverse real-world situations. Furthermore, this highly experimental attempt does not fully cover all relevant knowledge and needs and still requires further exploration and validation by professionals.

This article includes two types of visual inserts to distinguish between human prompts and AI responses:

- Gray background with black text represents the prompts or instructions I provided to the AI.

- Blue background with blue text represents the AI’s responses.

These visual cues are designed to help readers follow the flow of interaction and better understand how the trust framework was co-developed through iterative dialogue.

To support this exploration, the article includes 12 visual inserts that document the process of building a trust framework through iterative dialogue with AI. These visuals are not standalone illustrations—they form a clear and progressive logic path for understanding how trust can be made AI-driven. From conceptual exploration to quantification, from system design to scenario-based application, each figure contributes to a modular and adaptable trust framework. Together, they demonstrate how AI can not only execute trust logic, but also participate in shaping, adjusting, and contextualizing it across different environments.

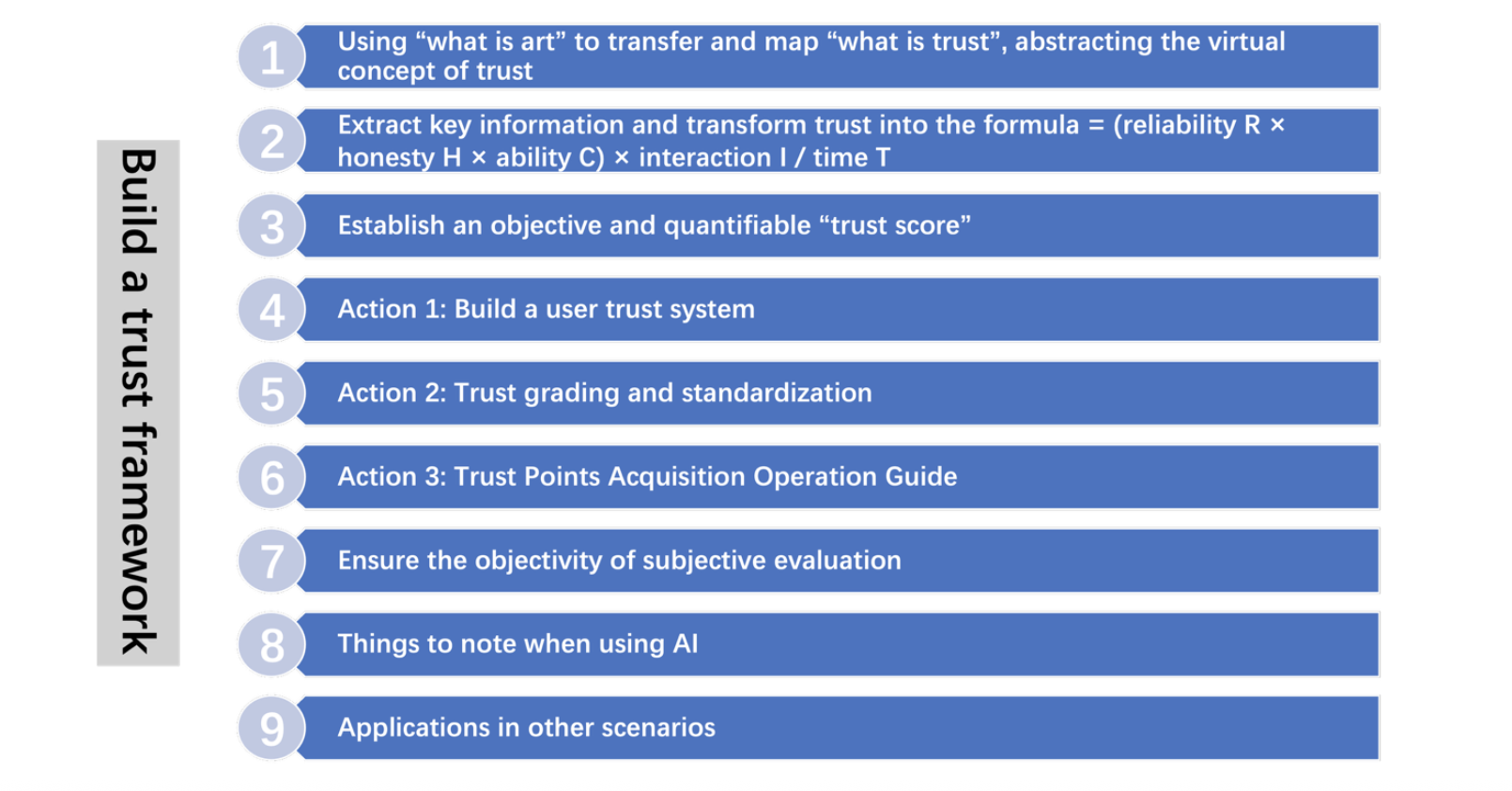

Below is an overview of the entire process of “building the trust framework.”

Figure 1. A Nine-Step Framework for Building Trust in AI Systems

As illustrated in Figure 1, the nine steps outline the process of constructing the entire trust framework.

I. Iterative Exploration Process

1.1 From vague to concrete

For a long time, our understanding of the question “How can trust be made AI-driven?” was vague, let alone having a concrete answer — and even today, the results cannot truly be called a final “answer.” Our knowledge of trust came only from theories and concepts found in books, along with subjective impressions shaped by personal life experiences. There was no consistency in understanding, much less any generalization. Because trust is deeply entangled with human relationships and subjective perceptions, we once assumed that digitizing trust was an impossible task. Unknowingly, we even “assumed” viewpoints such as: trust can only be described in words; trust must be built through process and details; trust cannot be quantified objectively.

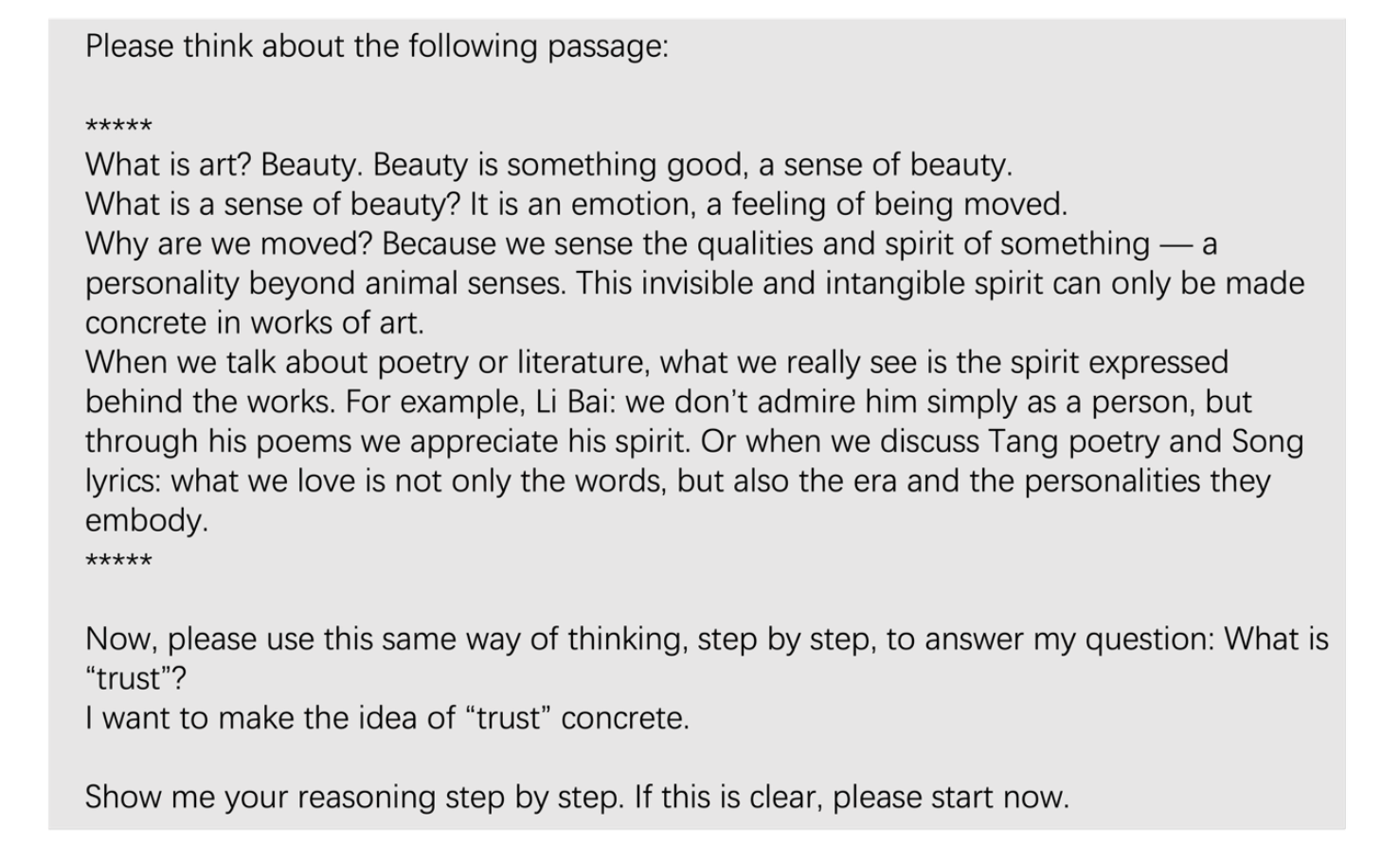

When I came across Helen Keller’s description of her understanding of colors (Note 1), my rigid thinking was jolted. Later one day, I accidentally saw a written expression of “beauty” and “art” (see prompt illustration below). Suddenly, inspiration struck — could we try transferring the problem, and “use beauty to map the presentation of trust”? Perhaps this could allow AI to concretize the difficult and abstract concept of trust. Once it is concretized, subsequent digitalization and structuring would be relatively easier.

As my dialogue with GPT-4 continued, the questions and answers gradually became more concrete and in-depth. It was an iterative process. Each round of dialogue built on the previous one, where human and machine together clarified and refined the problem, jointly exploring and constructing an understanding of the concept of “trust.” Views emerged, expanded, and improved in the process.

Looking back now, many prompting strategies introduced in the past — such as Chain of Thought (COT), OPRO, knowledge generation, step-back prompting, etc. — together with advancements in AI capability, such as emotional intelligence, all transformed seemingly impossible barriers into goals that could be achieved.

1.2 Starting Point: Building a Thinking Framework

To obtain a concrete result for a virtual concept (such as trust), the process begins with abstraction, and abstraction starts from extracting the key information of the virtual concept.

This entire exploration journey began when I entered the following prompt of “from beauty to trust” (Note 2):

Figure 2. Prompt for Using the Concept of Beauty to Explore and Define Trust

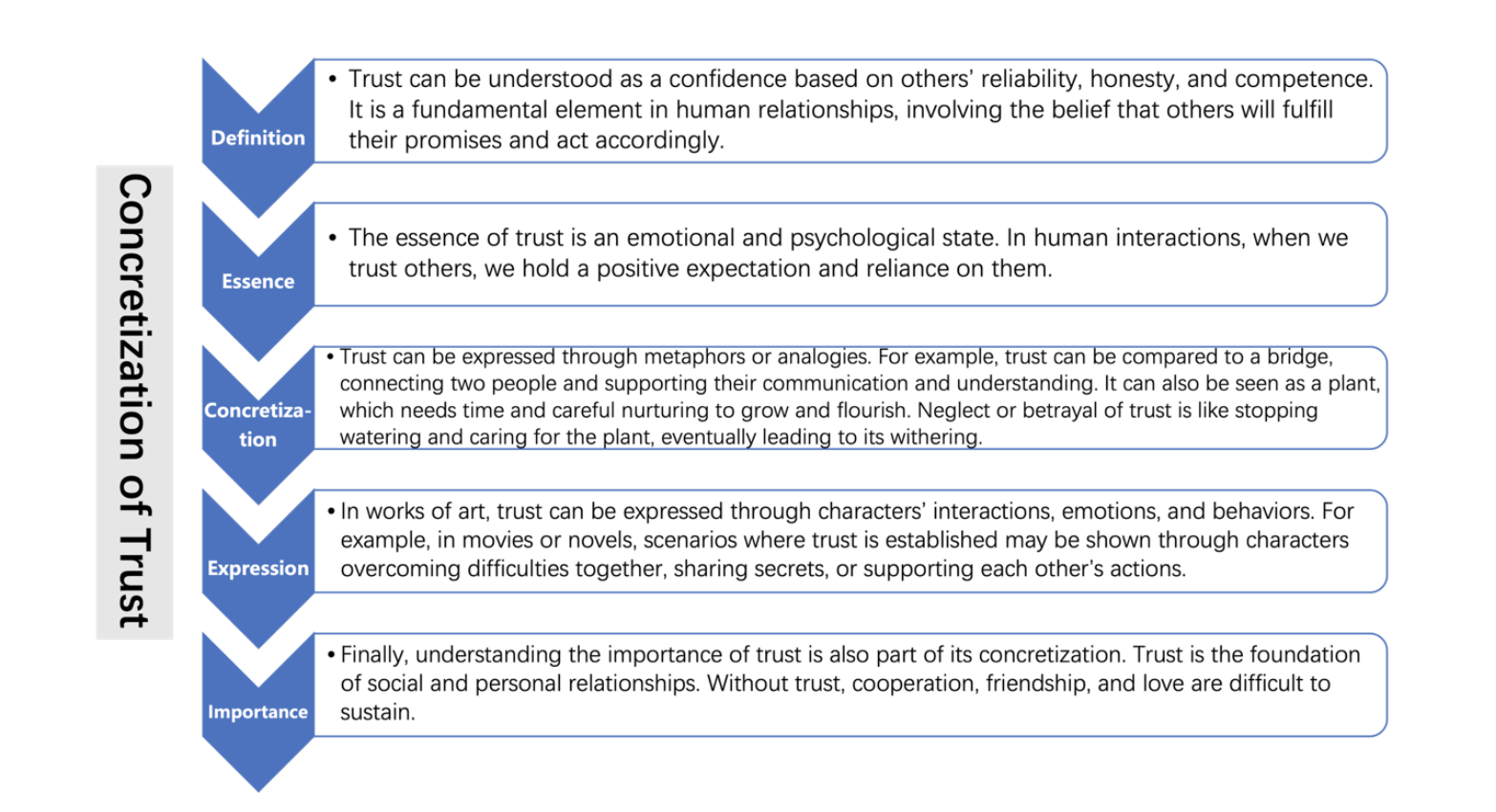

After receiving the above question, based on the way of thinking I provided, GPT-4 demonstrated impressive reasoning ability in concretizing the concept of “trust.” Its process of “making trust concrete” was as follows:

Figure 3. Concretizing Trust Through Definition, Metaphor, and Social Relevance

From the above response, some key pieces of information already emerged, mentioning many possible keywords for trust elements, such as reliability, honesty, competence, time, interaction, emotion, expectation, dependency, scenario, etc. Although still not clear enough, I felt — it seemed I had asked the right question!

Next, two things needed to be done with these keywords: condense them again to abstract form, and then reason based on abstraction. When the experiment ended, I realized these two steps were consistent with the “Step-Back Prompting” methodology I had just learned.

In handling the first step, my thought was: GPT-4 has a vast knowledge base and letting it select the most essential keywords would certainly be more objective than if I did it myself. What I needed to do was to tell it how to abstract. Thus, I gave GPT-4 the task of condensing the keywords, while my purpose for abstraction was to build a “trust thinking framework.” I needed a method that not only represented this virtual concept of trust, but also could, through reasoning, later achieve digitalization and quantification. I thought, perhaps a “formula” could solve this tricky problem.

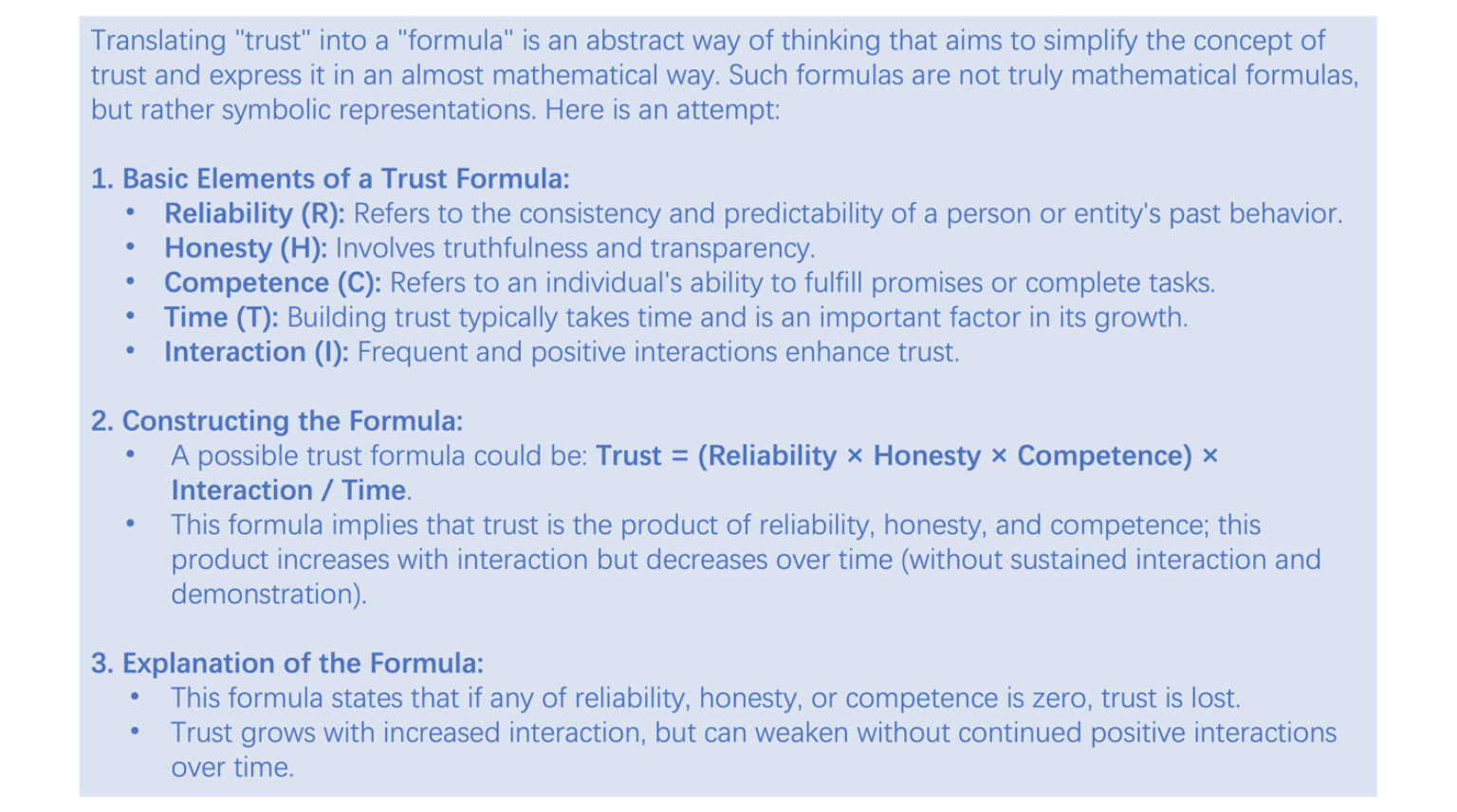

Although I had no confidence in how GPT-4 would respond, I asked the next question, right after its previous output (noting that context continuity is very important for GPT-4): “Can trust be transformed into a formula?”

GPT-4’s response was surprising! It really output a formula. At the same time, it explained that expressing trust as a formula is highly simplified and abstract, and that trust is in fact a complex human emotion and psychological state that cannot be fully described by a formula. Instead, the formula serves as a thinking tool to help us understand and analyze the different components of trust. I understood its point, but with a formula, the second step of reasoning became possible. Below is GPT-4’s output of the “trust formula.” I personally think the elements it identified are key, theoretically supported, comprehensive, and logically consistent (Note 3). I present the “trust formula” in the following original form:

Figure 4. Translating Trust Into a Quantifiable Formula

Overcoming the first hurdle, we have the trust formula:

Trust = (Reliability × Honesty × Competence) × Time / Interaction

Among these, the most critical elements are reliability, honesty, and competence, while time and interaction act as positive or negative forces influencing trust.

1.3 Process: Reasoning to Find the Answer

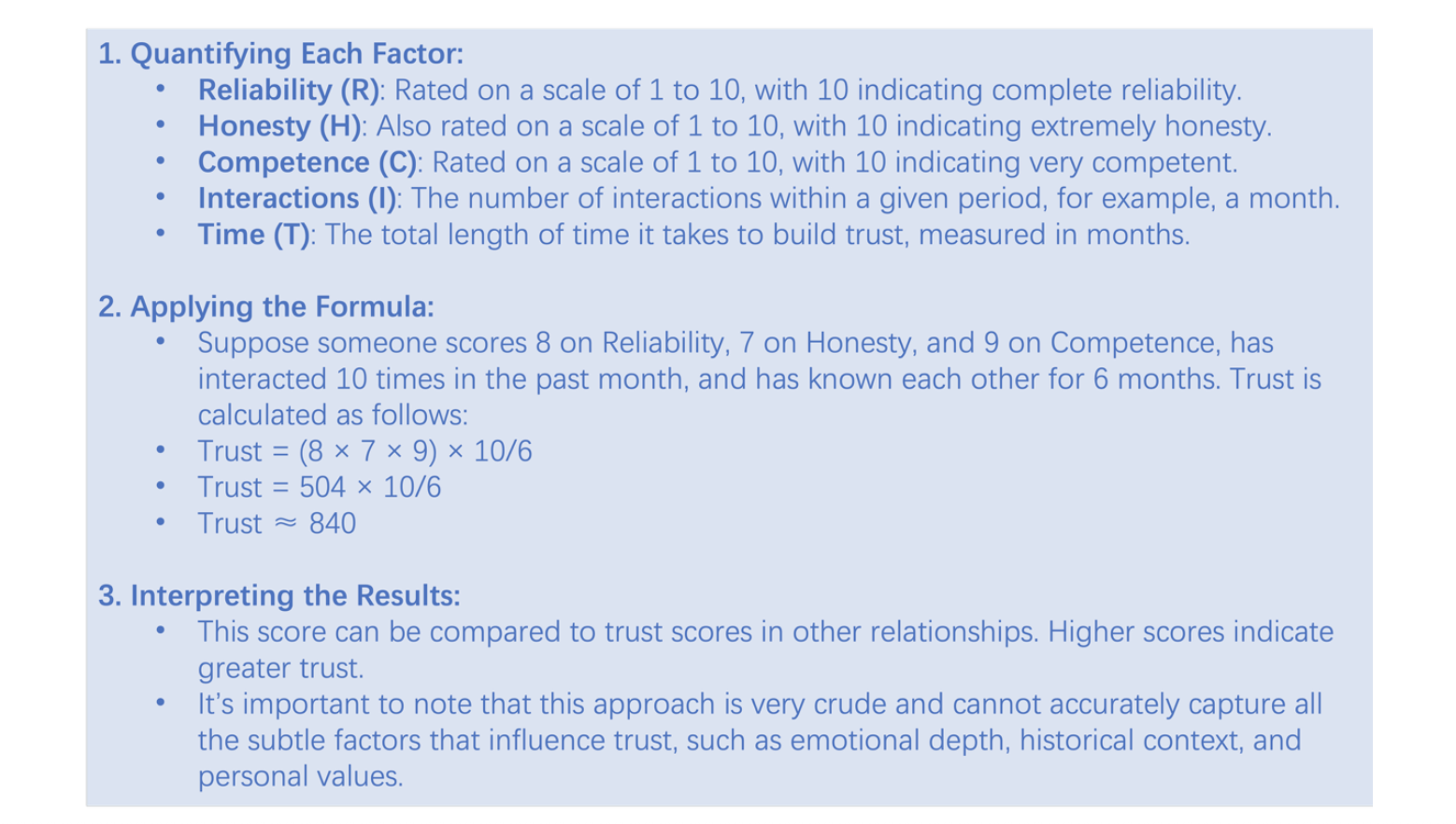

Having only a trust formula could not solve the problem; we also needed a method to “quantify trust.” To attempt digitalization, GPT-4 suggested a simplified method: assigning a quantitative value to each factor in the formula.

With this quantification method to overcome the barrier of digitalization, we need a “password,” which would not only indicate subjective trust levels but could also evaluate the value of trust, including reviewing our coming action plans and building a user trust system.

Inspired by Ant Group’s “Sesame Credit Score,” I temporarily called this password “Trust Score” (Note 4). Returning to the example above, the calculated trust score was 840.

II. Building a User Trust System

2.1 Final Confirmation and Key Prompts for Building a User Trust System

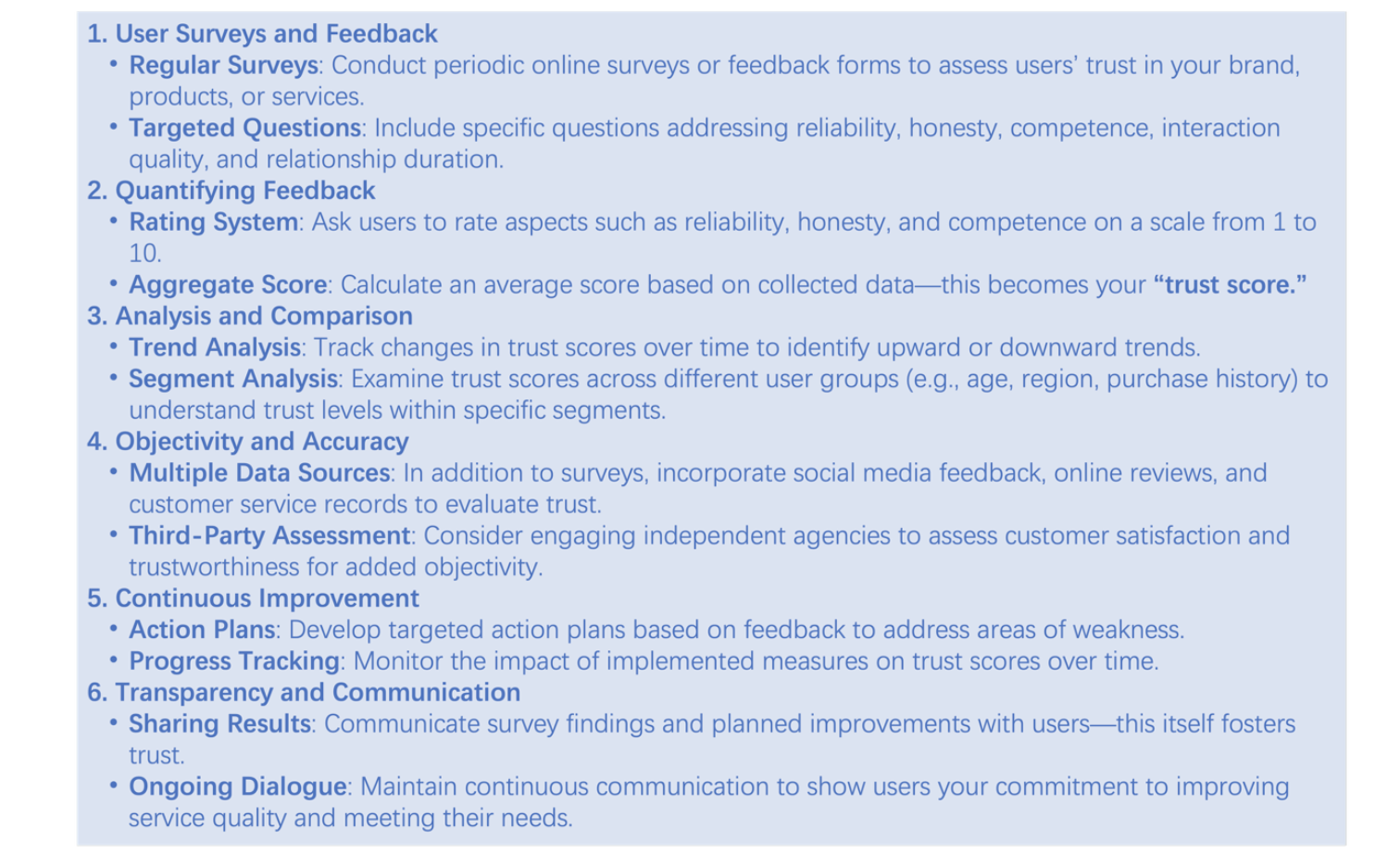

My next question was: “If we want to form a Trust Score based on the trust formula, and each user is an independent individual, how do I know my Trust Score in the user’s mind? And can this Trust Score be objective and accurate enough to serve as an indicator for continuous improvement in the future?”

This question was important because I was clarifying to GPT-4 who was evaluating whom, in preparation for applying it to the insurance planning scenario. And because in real business contexts, we need a quantified understanding of the trust level users place in us. These must be objective and accurate enough to provide a reliable foundation for guiding business strategies and improvement plans.

Since the application scenario has not yet been clearly defined, GPT-4’s response lacks specificity. However, its comprehensiveness and completeness still offer valuable reference points. Regarding the “objectivity of Trust Scores,” here is the simplified version of its response:

Figure 6. Operational Framework for Evaluating and Strengthening User Trust

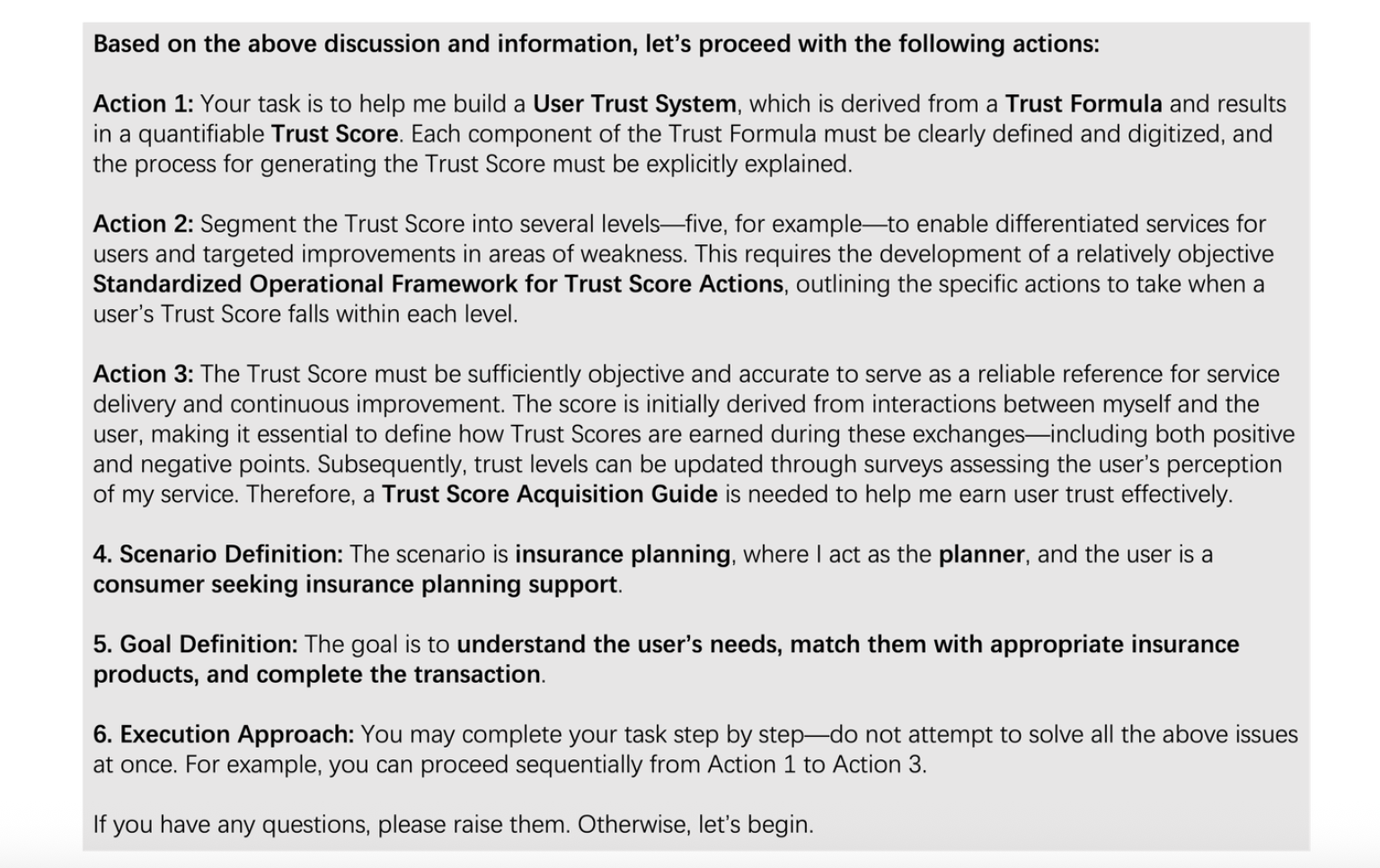

At this point, we had roughly mastered abstraction of the virtual concept of trust (through transfer and mapping), the method for extracting key information (the trust formula), and the feasibility of objectively quantifying trust (the Trust Score). Next, with the goal of “completing the understanding of user needs, matching appropriate insurance products, and completing transactions,” I described a specific scenario to GPT-4 and proposed three action requirements: “Building a user trust system,” “Trust Score grading and standard operating procedures,” and “Operational guidelines for obtaining the Trust Score.” Please see the following "Three Action Prompts for Building User Trust":

Figure 7. Prompt for Constructing a User Trust System in Insurance Planning

2.2 Three Actions for Building a User Trust System

Below is my summary of GPT-4’s “Three Actions for Building a User Trust System,” omitting details, as reference for constructing a trust system and managing trust. Readers are welcome to try any methods mentioned here or copy the prompts and adjust them for their own scenarios.

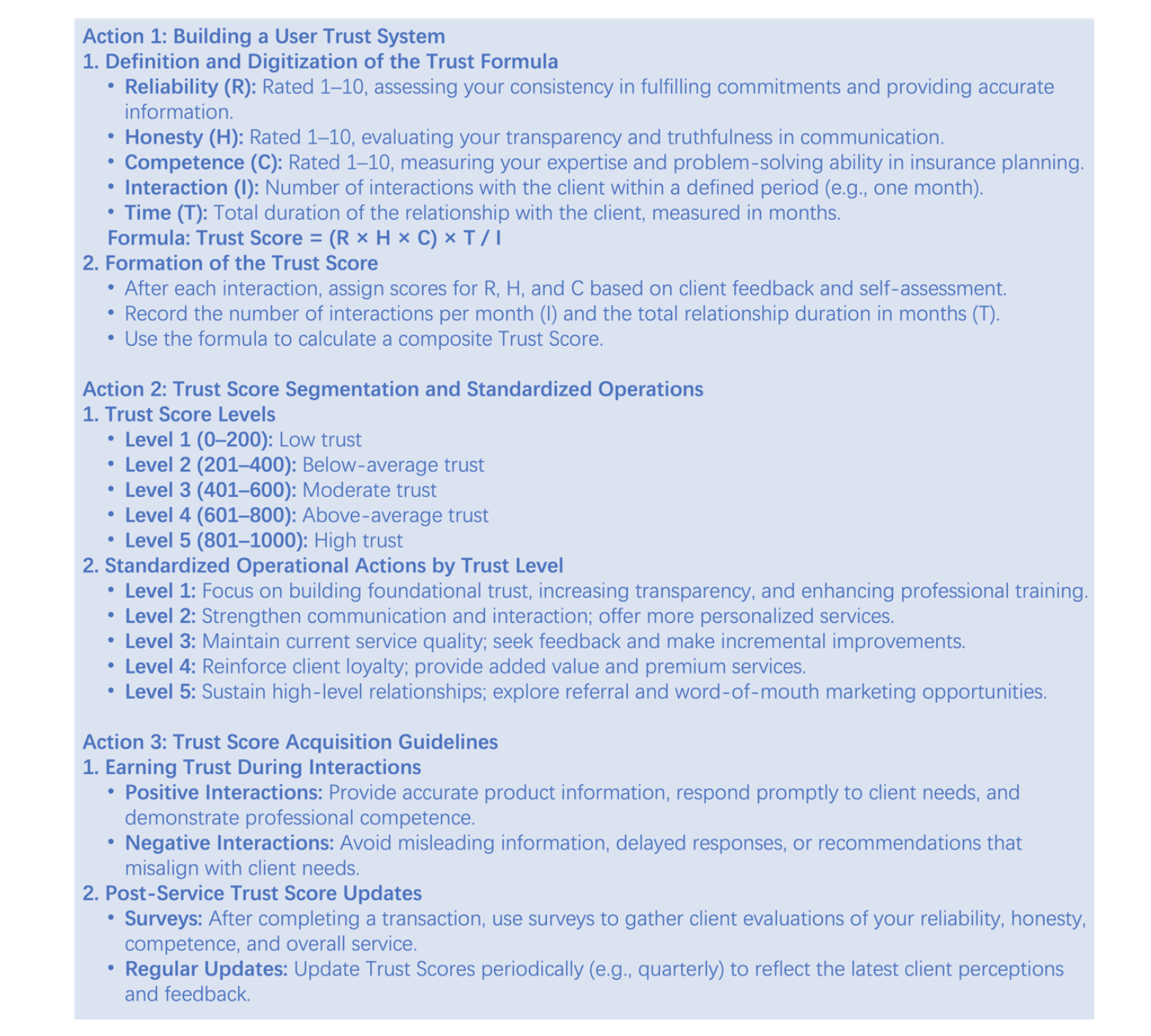

Figure 8. AI-Generated Framework for Building a User Trust System

Action 1: Build a User Trust System.

- Reliability and honesty: evaluated through self-assessment, customer feedback, or direct communication with customers to understand perceptions and satisfaction.

- Competence: improved by regular training and assessments.

- Interaction: record not only frequency but also quality and effectiveness.

- Time: emphasize the importance of building long-term relationships.

- Trust Score: calculate periodically (e.g., monthly or quarterly) to monitor changes in trust levels. Based on results, identify areas needing improvement and set strategies accordingly.

Action 2: Trust Score Levels.

- Different levels correspond to different actions and goals.

- Example: Level 1 (0–200 points) — Low trust.

- Recommended actions: strengthen basic communication skills, improve service transparency, ensure accuracy of information.

- Goal: build basic trust and resolve misunderstandings.

Action 2 (continued): Standard Operating Procedures.

- Regular evaluation: assess each customer’s Trust Score to determine their level.

- Personalized strategy: design and implement service strategies based on trust levels.

- Continuous tracking: track effectiveness and adjust as needed.

Action 3: Guidelines for Obtaining the Trust Score.

- Positive interactions: ensure each interaction is constructive, focused on customer needs, providing professional advice and solutions.

- Avoid negative interactions: prevent delays, inaccurate information, or unsuitable suggestions.

- Post-service updates: collect feedback through surveys, especially on reliability, honesty, and competence.

- Regular updates: update scores based on feedback and interactions.

- Implementation:

- Employee training on how to build and maintain trust in every interaction.

- Monitoring quality of interactions and adjusting based on customer feedback.

III. Ensuring Objectivity of Subjective Evaluation and Notes on AI-Driven Implementation

To fully convey the integrity, transparency, and fairness of the methodological validation process to readers, this chapter presents excerpts of GPT-4's responses.

3.1 How to Ensure Objectivity of Subjective Evaluation

When considering the Trust Score and evaluation methods, readers may already notice that some approaches may carry subjective bias. Therefore, regarding “how to ensure the objectivity of subjective evaluation,” below is an excerpt from GPT-4’s response:

Figure 9. Strategies for Enhancing Objectivity in Subjective Trust Evaluations

We already know AI systems have advantages in handling data and ensuring consistency, but they have limitations in building human emotional trust and understanding complex interpersonal interactions. Therefore, if we want to apply the trust system to AI planners, some key adjustments and special considerations are required.

3.2 Notes on AI-Driven Implementation

To untie the AI knot, we must ask who tied it. GPT-4 suggests that when applying a trust model to AI planners, several key dimensions must be considered:

- Demonstrating competence.

- Transparency and honesty (helping users understand how AI generates suggestions).

- Adaptability and personalization (learning and adapting based on user history and feedback).

- Context awareness (AI must understand and adapt to different user contexts and needs).

By integrating these theoretical elements, we can create a more complete and in-depth trust framework that applies not only to human service providers but also to AI systems. The following excerpts on “AI-Driven Trust” are drawn directly from GPT-4’s responses:

Figure 10. Foundational Components for Building Trust in AI Systems

In addition, GPT-4 offered further suggestions: continuous user education, enhancing user participation (channels for feedback and suggestions), use of social proof (customer recommendations and positive experiences), emphasizing personalized services, use of technology (CRM and data analysis tools), transparent communication (sharing both positive and negative feedback), flexibility and adaptability (customized services and solutions), risk management and compliance (clearly explaining risks and uncertainties), and continuous evaluation and improvement (keeping track of industry trends).

So far, our “trust framework” is roughly complete. It is built on existing theoretical reasoning and logical deduction, with strong internal consistency and theoretical foundation. This framework integrates multiple key factors in building trust, such as reliability, honesty, competence, interaction, and time — all widely recognized in trust theories and research.

IV. Other Suggestions

The above trust framework was developed in the scenario of insurance planning. But whether the framework can stand the test of generalization is still a concern. Every industry and scenario have its own unique characteristics and needs, which may affect how trust is built and maintained. Therefore, while we can provide a general framework, it may need to be adjusted and customized for specific situations.

For example, in healthcare, trust relies more on professional knowledge and privacy protection; in retail, it depends more on product quality and customer service. This is why an effective trust framework must have adaptability and flexibility to fit different environments. Industry knowledge, culture, and expectations are also indispensable in building trust frameworks.

4.1 Other Insurance Scenarios

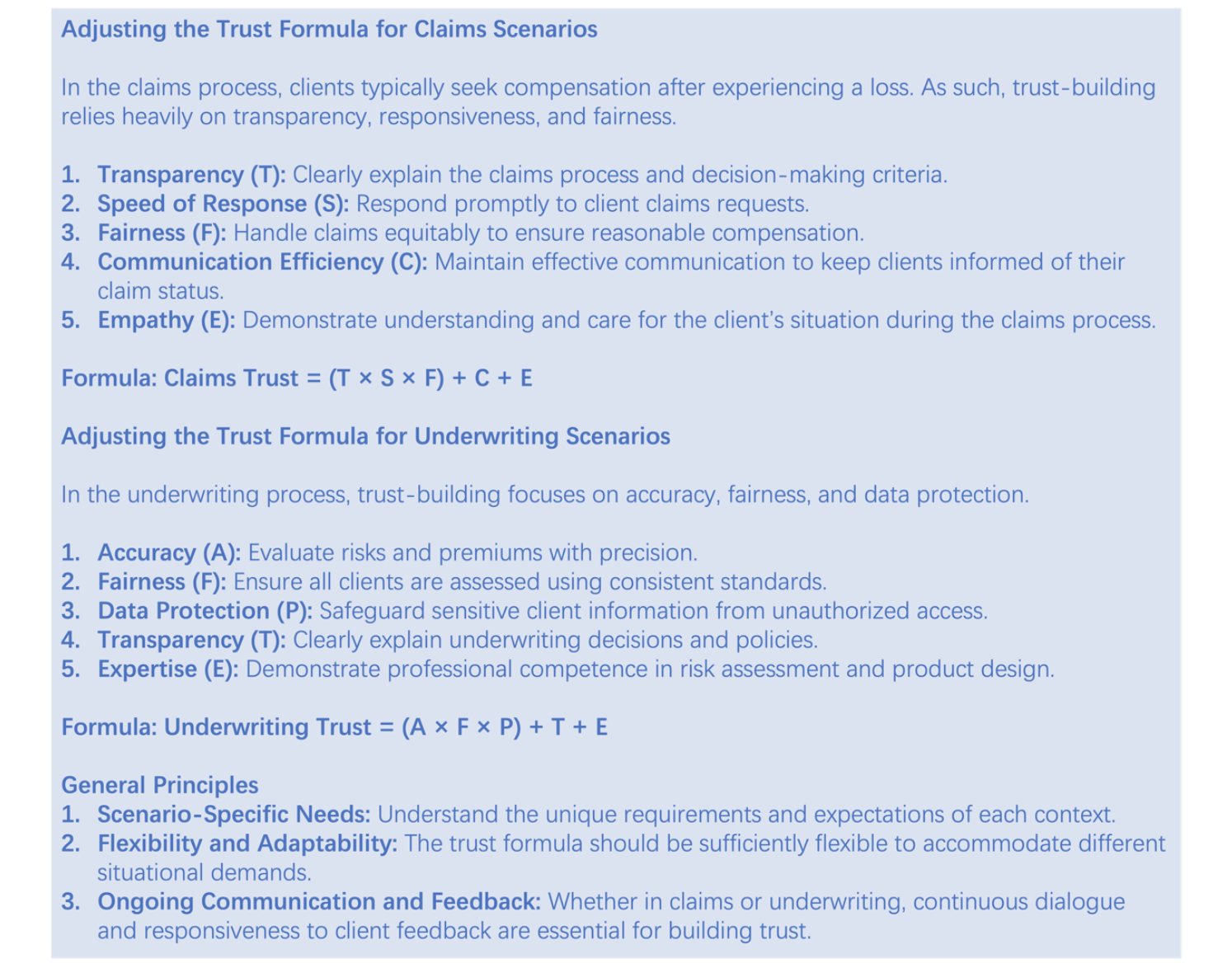

Different insurance scenarios require different trust-building strategies. For example, in claims and underwriting, GPT-4 developed adjusted formulas to fit the unique needs of these contexts. Transparency, fairness, expertise, and effective communication remain essential elements of trust.

Figure 11. Scenario-Based Adjustments to the Trust Formula in Insurance

Different scenarios in the insurance industry may require different trust-building strategies, but transparency, fairness, expertise and effective communication are always key elements in building trust.

4.2 Other Industry Scenarios

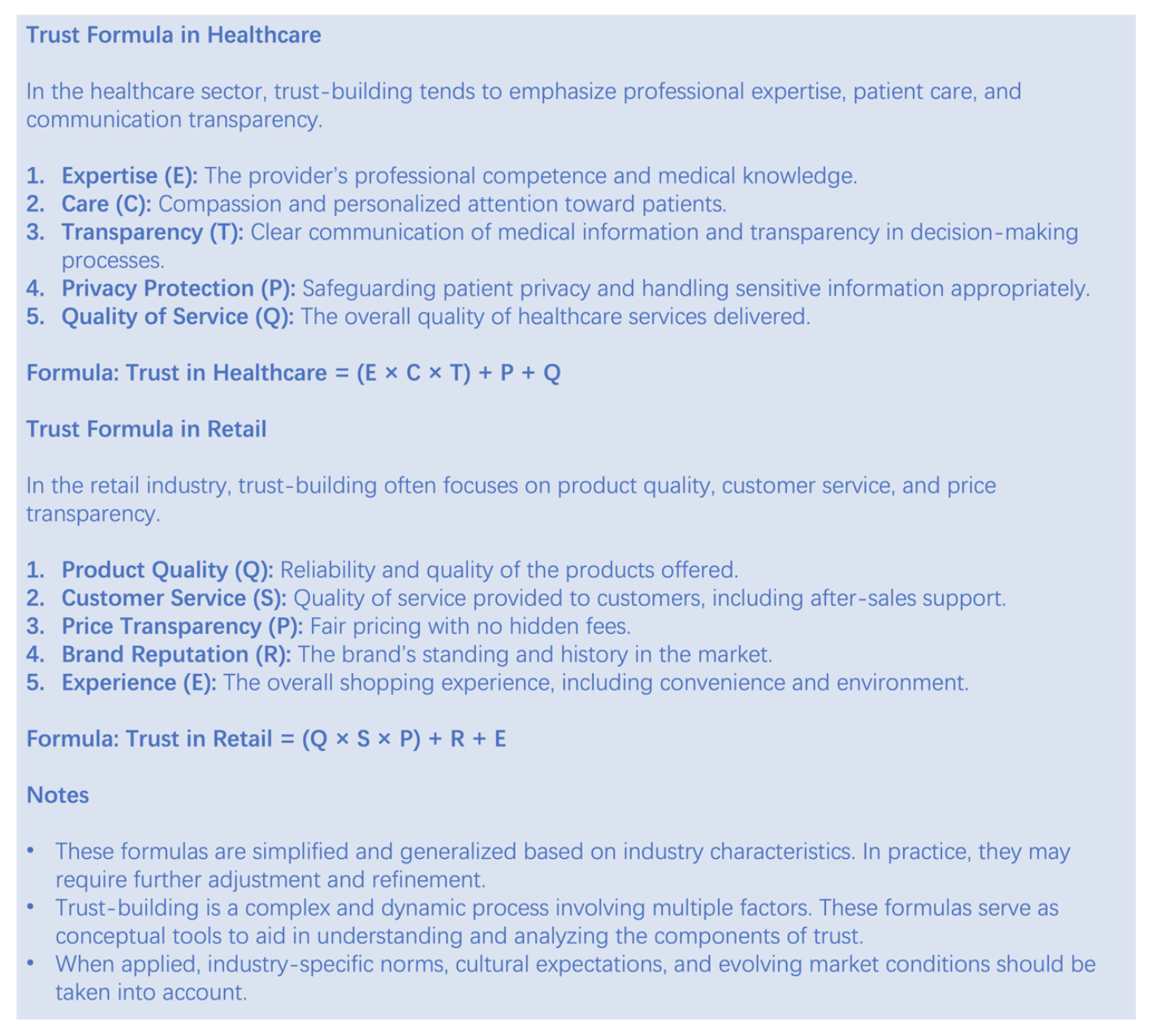

To adapt to different industries, GPT-4 also created examples for healthcare and retail. In healthcare, trust is more tied to expertise, patient care, and communication transparency. In retail, trust emphasizes product quality, service, and price transparency.

Figure 12. Industry-Specific Trust Formulas for Healthcare and Retail

Beyond insurance, healthcare, and retail, other industries such as education, financial services, technology, and online services — as well as areas like corporate management and customer relationship management — can apply similar methods. By adjusting elements, weights, and sequence, each industry can find the most suitable trust formula. For some industries, honesty and transparency may matter more; for others, professional competence and long-term service quality may be emphasized. By understanding industry-specific needs, these factors can be effectively adapted for better trust-building.

V. Conclusion

By constructing a trust system through self-assessment, customer feedback, or direct communication, planners can enhance their reliability and honesty. Companies can use customer Trust Scores and levels to design standardized procedures and personalized strategies, driving decisions with data. Whether as a sales management tool or a way to improve satisfaction and loyalty, this should be helpful. Therefore, building a trust system can not only improve customer relationships and sales performance but also bring long-term benefits to companies — including brand building, competitiveness, employee and customer satisfaction.

In this article, we explored the application of the trust concept in insurance through AI. We discussed methods for building a user trust system, emphasized the importance of ensuring objectivity in subjective evaluations, and highlighted factors to consider when integrating AI. We also explained how to transform trust from an abstract concept into concrete, digitalized practices, and how to apply these principles in different contexts. The key elements of trust include reliability, honesty, competence, interaction, and time.

A major challenge is the objectivity of reliability, honesty, and competence. Although we provided a basic formula, real-world application requires flexibility to fit different situations. Trust-building is a dynamic process that demands ongoing effort and maintenance. This means regular evaluation and adjustment to reflect industry trends and changing customer needs. From examples in different industries, we can see that trust has both universality and specificity. Thus, the trust framework should be seen as a flexible, adaptive tool, not a rigid rule.

In this exploration journey, GPT-4, trained on massive text data, displayed strong contextual understanding, creative thinking, logical reasoning, and interactive dialogue ability — becoming an ideal partner. GPT-4 could track discussion context, adjust responses accordingly, and integrate new viewpoints throughout the process. Its creativity in handling complex problems was impressive, and its interactive dialogue proved that conversational prompting could match structured prompting in problem-solving ability.

This experiment was only a starting point and process for logical reasoning and theoretical validation. Looking ahead, AI’s role in building and maintaining trust in insurance will become increasingly important. With technological progress, we can foresee a smarter insurance ecosystem, where AI not only improves efficiency but also strengthens customer trust through precise data analysis and personalized service. In the future, AI will become humans’ best assistant, understanding customer needs more deeply, predicting market trends, and even supporting complex risk assessments and decision-making. This will change how insurance products are developed and marketed, and will redefine the relationship between insurance companies and customers.

Applying these theories to practice means insurance companies must continuously adapt to new technologies while ensuring ethical and legal compliance. In practice, companies should focus on cultivating employees’ understanding and use of AI, while strengthening communication with customers to ensure technology truly meets their needs and expectations. Companies must also take measures to protect customer data security and privacy — both are critical for maintaining and enhancing trust. Through these efforts, AI will not only be a tool but also a major driving force for making insurance more efficient, transparent, and customer-centered.

Trust is fragile, but it is also essential in all human relationships. In today’s fast-changing world, trust is not only the trigger for completing transactions but also the bond for maintaining long-term relationships. How to make AI a key part of building and maintaining trust is a question every decision-maker and manager must consider.

The insurance industry today urgently needs to find a way out of its current challenges. I hope this experiment can serve as a starting point—testing the waters and offering something that may inspire further exploration.

Further Reading

AI Reshapes Trust in the Insurance Industry. Insurance Thought Leadership (ITL). This article was also selected for promotion by the International Insurance Society, IIS.

References and Notes

- Helen Keller’s reflections on her perception of color: “For me, there are also delicate colors. I have a palette of my own. I will try to explain what I mean: pink reminds me of a baby’s cheek, or a gentle southern breeze. Lilac was my teacher’s favorite color, and it reminds me of faces I have loved and kissed. To me, there are two kinds of red—one is the warm blood of a healthy body; the other is the red of hell and hatred. I prefer the first because it is full of vitality. Likewise, brown comes in two forms: one is the rich, friendly brown of living soil; the other is dark brown, like a worm-eaten tree trunk or a withered hand. Orange gives me a sense of joy and cheerfulness, partly because it is bright, and partly because it gets along well with many other colors. Yellow means richness to me—I think of the sun pouring down, full of life and hope. Green means vitality. The smell brought by warm sunlight reminds me of red; the smell brought by coolness reminds me of green.”

- Source currently unverified. If any copyright concerns arise, please contact the author.

- This formula integrates several key factors in trust-building—such as reliability, honesty, competence, interaction, and time—which are widely recognized across various trust theories and research. For example:

- Mayer, Davis, and Schoorman’s trust model identifies three core components: ability (skills and knowledge in a specific domain), benevolence (the belief that the trustee has good intentions), and integrity (adherence to principles and values).

- Rogers’ extended trust model builds on Mayer’s framework by adding contextual factors (how environment and situation affect trust) and historical interaction (how past experiences shape current trust levels).

- Other relevant theories include social exchange theory and psychological models of trust.

- “Sesame Credit Score” evaluates users from the merchant’s perspective; “Trust Score” evaluates merchants from the user’s perspective. Though their starting points differ, their nature is similar.