"Trust is the glue of life. It's the most essential ingredient in effective communication. It's the foundational principle that holds all relationships."

— Stephen M.R. Covey

Today, with the rapid advancement of AI technology, the role and methods of establishing trust in insurance transactions are undergoing profound changes. The insurance industry is in a period of technology-driven transformation, where AI applications not only improve operational efficiency but also reshape customer interactions and trust-building processes.

Rachel Botsman (Note 1), a expert in contemporary trust studies, explores in her book "Who Can You Trust?" how technology is reshaping human trust relationships. This includes how technology alters our understanding and demand for trust, how sharing economy platforms build trust through user ratings and feedback systems, and how blockchain enables trust without intermediaries. In the article "[Experiment 23] AI and Trust: The Future of the Insurance Industry" (Note 2), we propose a detailed framework for constructing and managing trust, examining how AI can establish and maintain trust with customers in the insurance sector. The content covers iterative exploration, the setup of user trust systems, how to ensure objectivity in subjective evaluations, and considerations for AI implementation. The integration of theory and practice offers new perspectives on "how to build trust in insurance transactions in the AI era."

Using examples from the sharing economy, such as ride-hailing and food delivery, we illustrate that platforms do not provide trust as a product. Customers do not inherently trust service providers but choose platforms for their convenience, speed, and efficiency, opting for new trust mechanisms over traditional ones. Transparency can address information asymmetry, but it is not synonymous with trust. Technology does not replace trust; instead, it can strengthen and inspire it. Technology disrupts traditional definitions and relationships of trust, paving the way for new ones.

Drawing on Botsman's definition of trust and the evolution of trust systems, we introduce the concepts of "trust risk" and "trust leap." We map these ideas to the information asymmetry in insurance transactions and explain why face-to-face and non-face-to-face sales differ significantly in customer acceptance, marketing, and service delivery. Rather than aiming to "rebuild trust," it is more accurate to recognize the contextual and personalized nature of trust.

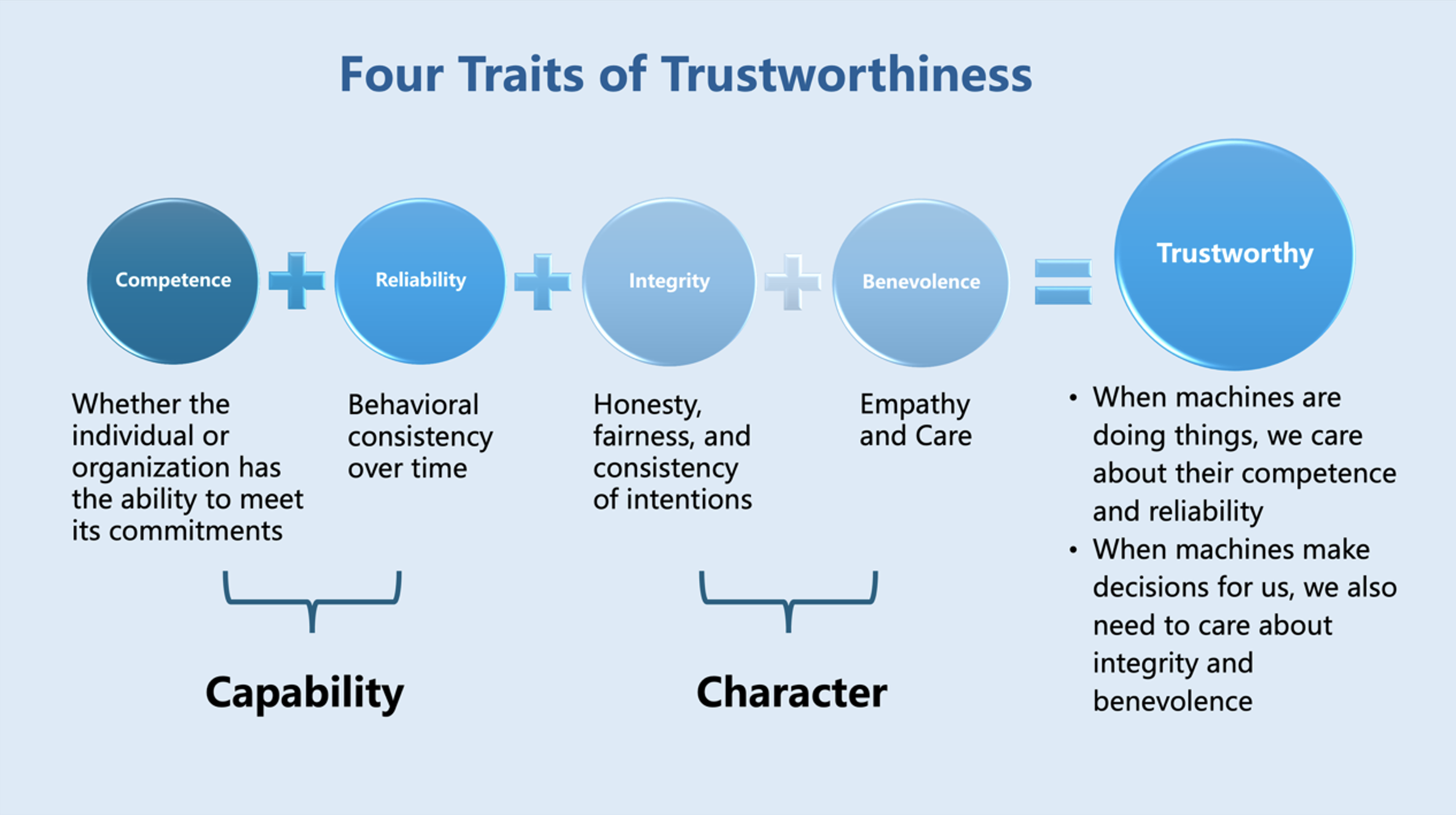

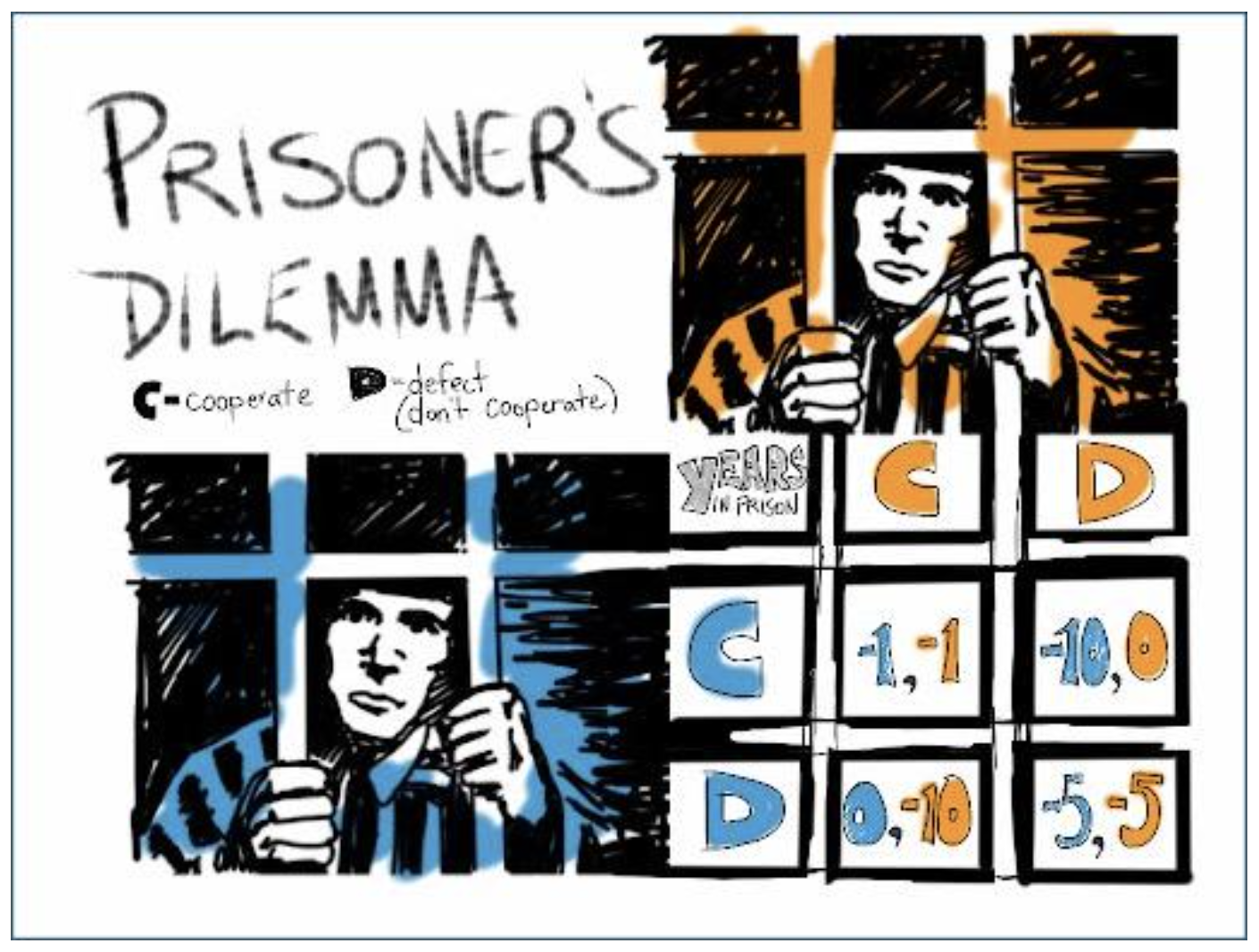

We analyze how to build a trustworthy AI system through four key aspects: "core elements of trust, quantifying trust, technology and transparency, and continuing customer interaction." The best way to address a trust crisis is to make oneself "trustworthy," characterized by four traits: competence, reliability, integrity, and benevolence. From this, we deduce how agents can earn customer trust through "five layers of verification." In quantifying trust, we reiterate the core idea of the "trust formula": Trust = (Reliability × Honesty × Competence) × Time / Interaction. Using the "prisoner's dilemma," we demonstrate that the intent behind transparency is key to solving trust issues. With AI's assistance, continuing customer interaction allows trust to evolve iteratively.

1. Is Technology Replacing Trust?

Most of us in China have used ride-hailing apps like DiDi (provides services like Uber) or ordered food on Meituan (provides services like UberEat). We neither know the drivers nor the restaurants, so why are we willing to consume on these platforms? Is it because of trust?

DiDi's product is not trust but efficiency and transparency. It improves efficiency through optimized transportation services and transparency by displaying passenger ratings for drivers and the number of trips completed. The efficiency, convenience, and speed brought by technology do not enhance trust; instead, we sacrifice trust in service providers to gain these benefits. Transparency, while important, is not equivalent to trust but a means to achieve it. Product and service designs must prioritize transparency, but more crucially, the "intent" behind transparency and whether it aligns with consumers' expectations. For example, is the intent to sell products or to empower consumers to make informed choices? When transparency fails to align with intent, trust is hard to establish.

Returning to our example, we do not know if a DiDi driver is skilled, familiar with the city, or truly reliable. However, these concerns are alleviated by the platform's control mechanisms (rating systems, optimized routes, real-time vehicle tracking, emergency alerts, etc.), which reflect alignment in transparency intent between the platform and users. While these mechanisms limit drivers' freedom, they increase predictability for passengers, fostering a sense of security.

Thus, technology does not evolve trust by providing more information but by restricting our freedom (Note 3). Monitoring technologies make the unknown more predictable, so the focus should not be on rejecting technology but on ensuring it does not replace trust. Instead, technology can strengthen and inspire trust. Traditional definitions and relationships of trust have been disrupted by technology, and new ones are being established.

2. The Definition and Evolution of Trust

In the insurance industry, trust is not only the foundation of transactions but also the bond that sustains long-term customer relationships. To understand trust and how to build it in the AI era, let us first "deconstruct" trust.

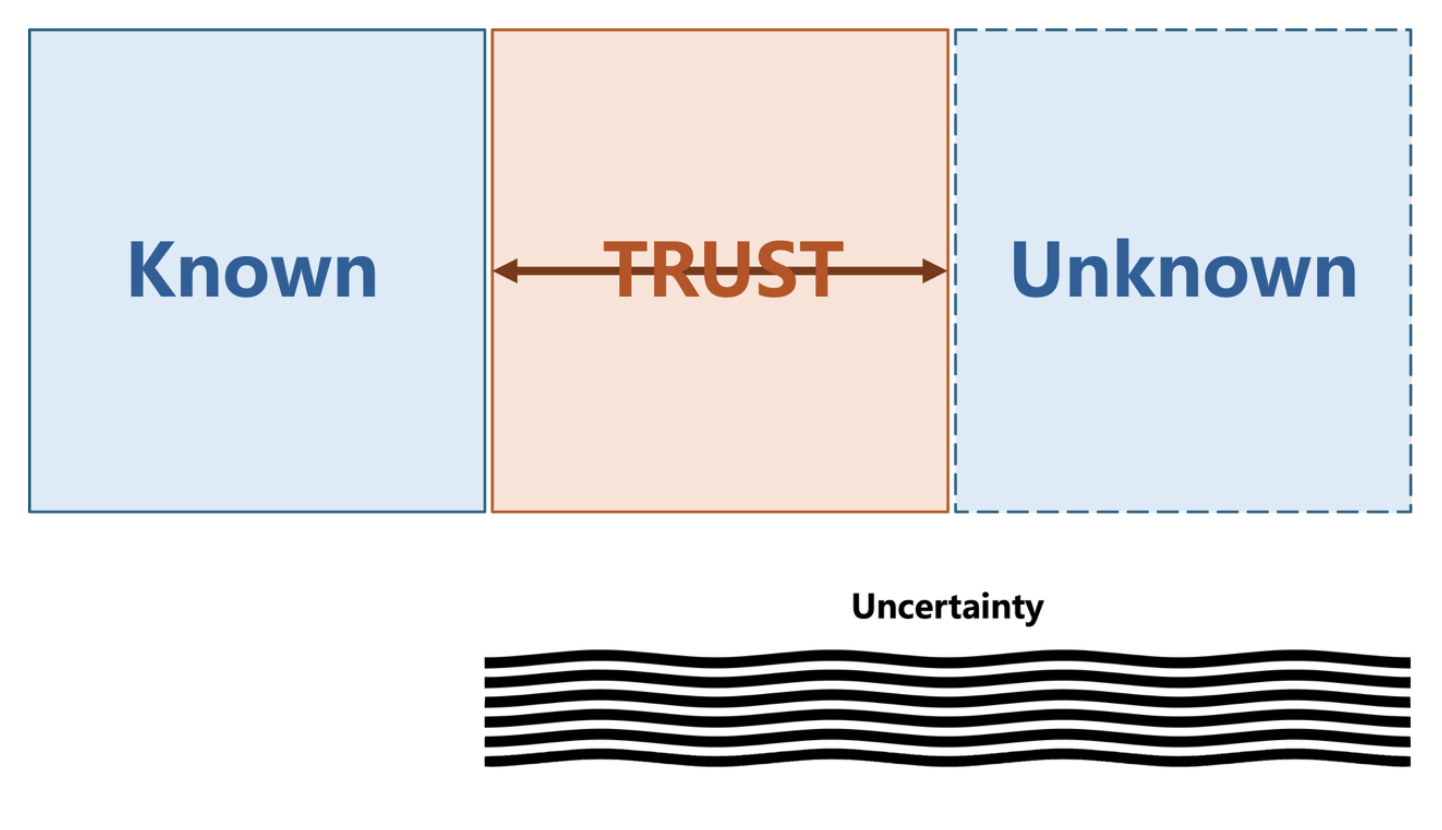

Botsman defines "trust" as a bridge between the known and the unknown, a relationship of confidence we establish with the unfamiliar. This explains why trust is intertwined with fear, hope, and expectation, and why its violation leads to frustration and backlash, such as when internet platforms exploit technology for price discrimination. Trust has evolved from local trust (relying on acquaintances and communities) to institutional trust (relying on corporations and governments) and now to distributed trust (relying on technology platforms and algorithms), as seen in the earlier examples of internet platforms.

As shown below, the uncertainty between "people or things" and "unfamiliar people or things" is "trust risk," and the process of bridging this gap is the "trust leap." Technology is making trust-building increasingly reliant on transparency, reliability, and user feedback systems provided by technology, narrowing the trust risk gap and accelerating the trust leap. For example, when people shift from traditional insurance services to AI-driven platforms, this shift depends on the platform's transparent operations and reliable services, thereby addressing the information asymmetry inherent in traditional insurance sales.

However, as mentioned earlier, users do not choose platforms because they trust them but because platforms create new rules of trust, enabling users to prioritize convenience and efficiency and complete transactions in non-traditional, autonomous ways. Thus, trust is context-dependent and highly subjective, particularly evident in insurance sales. For instance, "face-to-face sales" by agents and bancassurance differ significantly from "non-face-to-face sales" via telemarketing and online channels in terms of customer acceptance, marketing, and service delivery.

In the past, we often spoke of "rebuilding trust," but this perspective is insufficient and institution-centric. Instead of focusing on rebuilding trust, we should recognize its contextual and personalized nature and consider how technology can enhance it.

3. Building a Trustworthy AI System

How can we build a trustworthy AI system? We analyze this through four aspects: "core elements of trust, quantifying trust, technology and transparency, and continuing customer interaction".

3.1 Core Elements of Trust

Botsman argues that the best way to address a trust crisis is to make oneself "trustworthy." "Trustworthiness" has four traits (see image below): competence, reliability, integrity, and benevolence. Competence refers to the ability to fulfill promises; reliability is consistency over time; integrity encompasses honesty, fairness, and alignment of intent; and benevolence represents empathy and care.

These traits form the foundation of trust in individuals or organizations. In the insurance industry, they are equally applicable. Insurers must demonstrate competence in risk assessment, claims handling, service consistency, and customer care to earn trust.

Similarly, as insurance agents, how can we showcase these traits to earn customer trust? Building trust requires passing the consumer's "five layers of verification." When an agent conducts cold calls or visits to complete a sale, how can they gradually build trust from a starting point of "zero trust"? What processes and mechanisms are involved? First, we must view the problem from the consumer's perspective, not the agent's or institution's. In practice, beyond what agents can do themselves, we consider using AI as an agent's assistant (copilot) to serve consumers via online and telecommunication channels, aiming to pass the "five layers of verification." Below is an example of how AI and agents can collaborate to build trust:

FIVE LAYERS OF VERIFICATION

1. Identity Verification:

Who am I? Who do I represent? Who recommended me? Integrity AI uses technology like facial recognition and big data analysis to quickly verify the agent's identity, ensuring authenticity and credibility. The agent should clearly introduce themselves, provide credentials and company information, and offer referral details to enhance trust.

2. Qualification Verification:

What are my credentials? What certifications do I hold? What endorsements do I have? Competence AI can access online databases to verify the agent's certifications and training history, providing real-time results. The agent should display their qualifications and training records, share official endorsements, and strengthen consumer trust.

3. Data Verification:

Is consumer data obtained compliantly? How is it obtained? Are sensitive details hidden? Integrity AI can automatically monitor data collection and processing for compliance, ensuring all consumer information is obtained and used legally and transparently. The agent should explain the data collection process and legal basis, detail privacy protection measures, and demonstrate safeguards.

4. Motivation Verification:

Why am I calling or visiting? Will I provide follow-up service? Is there a complaint channel? Benevolence AI can analyze the agent's calls and behavior patterns to assess the genuineness of their intent and provide details on follow-up services and complaint channels. The agent should clearly state the purpose of contact and future service plans, offer detailed complaint procedures, and ensure quick resolution for consumers.

5. Process Verification:

Is the call recorded? Is the process reliable? Reliability AI can monitor the entire call or visit, provide recording functions, and document the process for later verification. The agent should inform consumers about recording arrangements, explain the purpose, and provide complete records to enhance trust.

Therefore, when institutions develop responsible AI to support agents (as users), they must understand that when we ask machines to perform tasks, we only need to focus on AI's competence and reliability. But when machines make decisions for us, we must also consider AI's integrity and benevolence — what we often call ethics. Moreover, just as platforms "exchange" passengers' trust demands by restricting drivers' freedom, we must consider using AI to supervise the entire transaction process, which protects both parties and facilitates the transaction.

However, while competence and reliability can be objectively evaluated, integrity and benevolence are subjective perceptions and emotional alignments, making them harder to structure and quantify.

3.2 Quantifying Trust

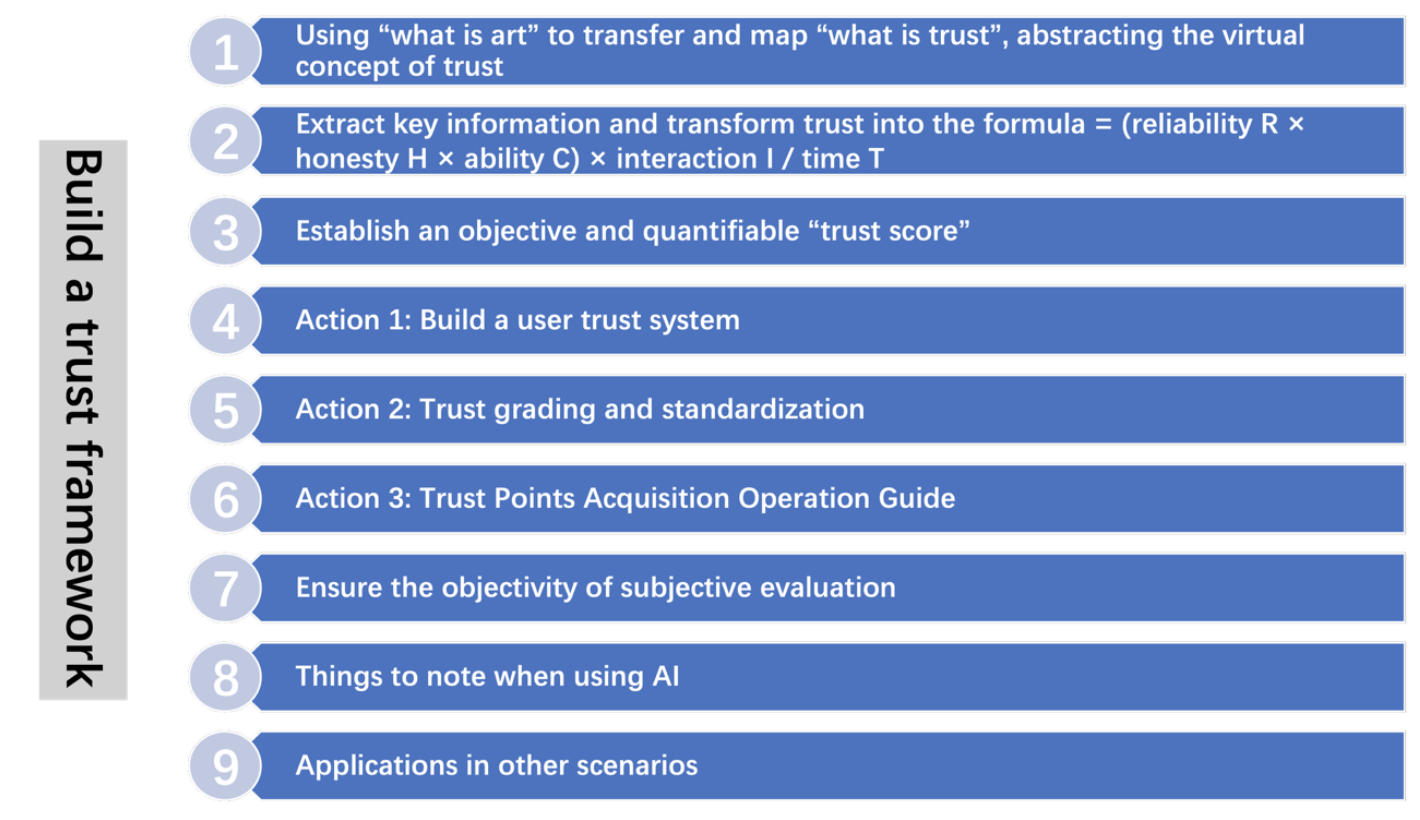

After understanding the core elements of trust, we explore how to quantify them. In [Experiment 23], we proposed methods to structure and quantify trust, enabling the creation of a structured trust framework. Below is a demonstration of building a "trust framework":

In [Experiment 23], we gradually explored and concretized the process of "trust AI-ification," distilling the "Trust Formula: Trust = (Reliability × Honesty × Competence) × Time / Interaction." This showcases AI's potential in handling complex concepts while revealing the multifaceted factors involved in building a trust framework, helping us further quantify and digitize trust. For details, readers may refer to the original article.

3.3 Technology and Transparency

As mentioned earlier, transparency is a key factor in building trust, but it is not synonymous with trust. More importantly, it is the intent behind transparency. In the AI era, when we "outsource" trust to algorithms, we must understand the machine's intent (i.e., the institution's intent behind developing the machine). Institutions must ensure consumers or users understand the intent behind algorithms.

Transparency can rebalance information asymmetry. As shown below, in the prisoner's dilemma, if one player knows the other's decision, trust becomes irrelevant. As Diego Gambetta said, "If we had unlimited computational power to map all possible contingencies, trust would not be an issue."

Insurers must ensure their AI systems operate transparently and clearly communicate the intent behind these operations to ensure users (including agents and customers) understand and accept them. For example, transparent algorithms and open feedback mechanisms can help customers better understand insurance products and services, thereby enhancing trust in the company.

While technology can improve transparency, trust remains context-dependent and highly subjective. It cannot yet be fully automated through technology or resolved solely through compliance and regulation. Thus, the most suitable model remains human-machine collaboration.

3.4 Continuing Customer Interaction

Trust is a dynamic process requiring customer interaction and feedback mechanisms to iteratively "upgrade" it. Insurers can regularly collect surveys and feedback to adjust and optimize services and products, ensuring they meet user (agent and customer) needs and expectations.

Focusing on customers, businesses can achieve continuing interaction through regular feedback collection, personalized communication strategies, round-the-clock support, loyalty programs, and community engagement. AI plays a pivotal role here, improving efficiency, personalizing services, and deeply analyzing customer data to better understand and meet needs.

For example, AI can analyze vast amounts of customer feedback to identify common issues and improvement opportunities, enabling faster adjustments. Natural language processing and causal AI can categorize open-ended feedback and propose solutions. AI can also create detailed customer profiles with smart tags, driving personalized recommendations based on context, preferences, and goals.

AI-powered chatbots can provide 24/7 support, handling queries or complaints instantly and escalating complex issues to human agents, improving overall efficiency and satisfaction.

AI can also design personalized rewards programs based on purchasing behavior, predict churn risks, and take preventive measures to retain customers. Additionally, AI can monitor community interactions, identify active users and common problems, and support offline event planning.

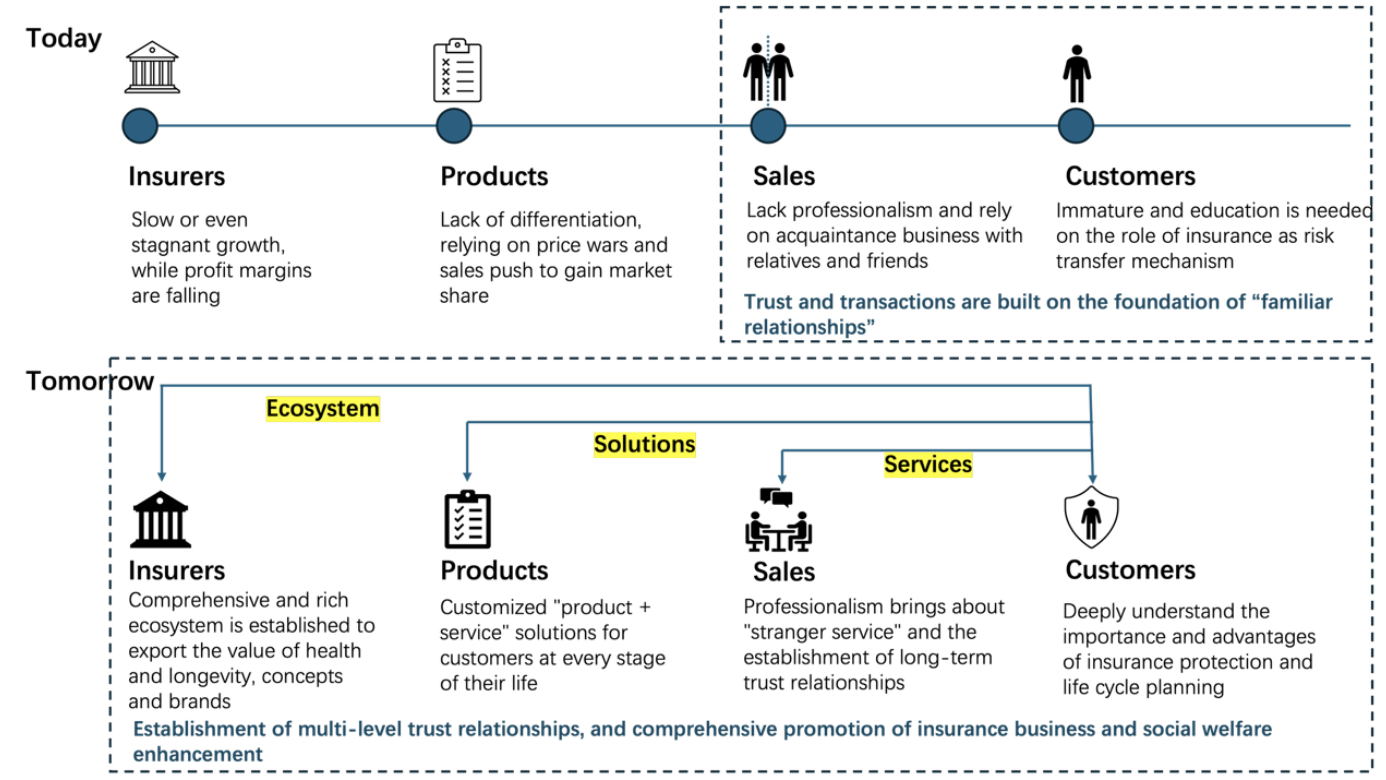

For the Chinese insurance market, Oliver Wyman proposes that building multi-layered trust relationships is central to realizing the blueprint for the life insurance sector (see image below).

This image shows how insurers can enhance customer interaction and service quality through multi-layered trust relationships. This multi-channel, multi-layered approach aligns closely with the key strategies discussed here for building and maintaining trust in insurance transactions in the AI era. For example, insurers can use AI to achieve seamless communication with customers, complete the five layers of verification, and build trust frameworks. Customers can access professional services through multiple channels and modalities, enjoying personalized communication and recommendations.

Conclusion

Trust is a complex, multi-faceted concept involving psychological, behavioral, and technological factors. Botsman defines trust as "a relationship of confidence with the unknown," explaining why it encompasses fear, hope, and expectation. To build and maintain this relationship, we must understand its core elements: competence, reliability, integrity, and benevolence. Additionally, the five layers of verification — identity, qualification, data, motivation, and process — are critical steps in constructing trust. Through trust frameworks and formulas, we can quantify and optimize the trust-building process.

Trust is at the heart of insurance transactions. Through previous articles, we have explored trust from various angles to comprehensively understand and apply these concepts. For example, in "After 3.5%: What's Next for the Insurance Industry?" (Note 4), we noted that agents must shift from "selling insurance" to "selling good insurance," with future technology serving as AI assistants to intervene in sales behaviors and correct shortcomings of purely human approaches. The strategy is: Use AI pre-sale to identify needs, ensuring customers buy with clarity; use AI during sales to reduce misrepresentation, ensuring customers buy with confidence; and use AI post-sale for service, ensuring satisfaction and referrals.

In "How AI Empowers Insurance Sales" (Note 5), we emphasized that AI assistants will replace traditional tools, with future transactions involving interactions between AI assistants representing consumers and agents. The focus will be on whose AI is stronger, more user-aware, and more trusted.

In "Vertical AI-Driven Insurance Sales Innovation: Unlocking Industry Pain Points and Strategic Turning Points" (Note 6), we explored AI's potential to identify and solve insurance pain points, proposing immersive experiences and strategic turning points to break trust barriers. Killer apps can address long-standing pain points and dismantle trust barriers.

In "From Insurance to Tech: The Transformative Power of Shifting from "Push and Pull" to "Self-Drive"" (Note 7), we discussed the real issues in insurance, noting that it is a long-term transaction requiring methods to rebuild consumer trust and uphold long-termism, ultimately creating a quality sales environment.

In "Smart Customer Experience: AI's Innovative Applications in Insurance Services" (Note 8), we highlighted that AI-era services are value-centric. AI helps businesses better understand needs and deliver personalized, intelligent services. The "customer autonomy" concept shifts service paradigms toward value, giving adhering businesses a competitive edge.

Ultimately, trust lies with each of us — we decide whom to trust. Healthy skepticism is constructive, driving continuous improvement. As Warren Buffett said, "It takes 20 years to build a reputation and five minutes to ruin it. If you think about that, you'll do things differently." By exploring trust from multiple angles, we better understand how to build and maintain trust in the AI era, standing out in a complex market. Future insurers must continuously monitor technological advancements and customer needs to remain competitive and achieve sustainable growth.

References:

1. Trust in the Digital Age: Why It Matters Now More Than Ever – Rachel Botsman

https://www.youtube.com/watch?v=cdOGqZz6Lqc&t=2961s

2. [Experiment 23] AI and Trust: The Future of the Insurance Industry

https://mp.weixin.qq.com/s/cLpa0BSKSkWeb3zlrsjqrQ

3. The Obsolescence of Trust – Hubert Beroche

https://medium.com/urban-ai/the-obsolescence-of-trust-b33c5d9fe76b

4. After 3.5%: What's Next for the Insurance Industry?

https://mp.weixin.qq.com/s/3ScD_QlhKeLHEeu0UwDe5g

5. How AI Empowers Insurance Sales

https://mp.weixin.qq.com/s/pmEvWEl-Ytp5hqHtP8ZQzA

6. Vertical AI-Driven Insurance Sales Innovation: Unlocking Industry Pain Points and Strategic Turning Points

https://mp.weixin.qq.com/s/dcXt07m4WqAmPmGb0Bjthg

7. From Insurance to Tech: The Transformative Power of Shifting from "Push and Pull" to "Self-Drive"

https://mp.weixin.qq.com/s/1OXxl1cWumi56EaWOoKi3w

8. Smart Customer Experience: AI's Innovative Applications in Insurance Services