Mid-August is supposed to be slow in the world of business, as people get their final bits of vacation in and prep for sending the kids back to school, but quite a number of items caught my eye this week.

I'll start with an analysis of the costs of third-party litigation funding, which projects that insurers could pay as much as $5 billion a year directly to those who are investing in lawsuits against insurers. Including all the indirect costs associated with fighting those lawsuits, insurers could be out as much as $10 billion a year, the research finds. Those numbers are scary—much higher than I, at least, would have guessed.

Then I'll share an item on how parents are increasingly getting some control over their teen drivers and their at-times-ill-considered behavior, which could bring down claims while advancing everyone's goal: fewer accidents.

Finally, I'll reflect on the end of AOL's dial-up internet service and what it says about technology life cycles, such as the generative AI revolution that is just moving out of its Wild West phase and into what I think of as the land grab era. That reflection will also let me tell my story about how an inability to find a modular phone jack in the Berkshires in the early 1990s almost kept me from filing an exclusive on IBM that led the Wall Street Journal the next morning.

Third-Party Litigation Funding

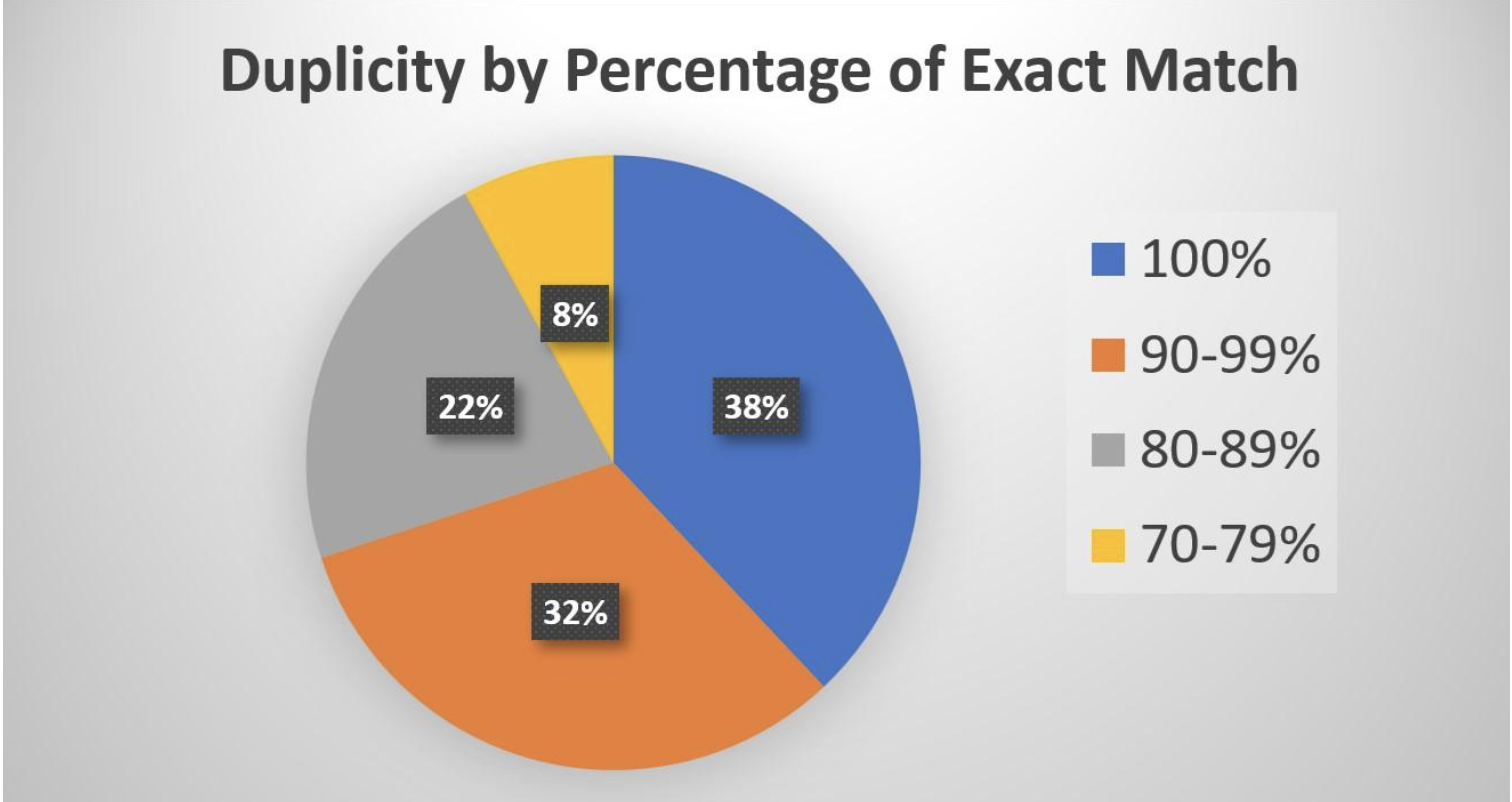

An article in Claims Management quotes "an actuary speaking at the Casualty Actuarial Society’s Seminar on Reinsurance [as saying] the top end of a range of estimates of direct costs that will be paid to funders by casualty insurers is $25 billion over a five-year period (2024-2028)."

The article also quotes another actuary who came up with a smaller, but still frightening, number. He ran 720 scenarios and found that "the five-year cost is most likely to fall between $13 billion and $18 billion (the 25th to 75th percentile), with a mid-range average coming in at around $15.6 billion for the five years from 2024-2028."

This actuary noted that the payments to those funding the litigation could snowball: The funds could let law firms advertise more, bring in more cases, and fight claims longer.

He also cited a study that "puts total costs, including indirect costs, at roughly double the amount of direct costs.... If this were true, the high end of the range—now $50 billion—could add 7.8 points to the commercial liability industry loss ratios for each of the next five years, with the most likely scenario (50th percentile) falling between 4.5 and 5.5 loss ratio points."

Those are scary numbers—that not only hurt insurers directly but will filter into much higher rates for everyone.

(If you're interested in how the industry can combat third-party litigation funding, you might check out the work being done by our colleagues at the Triple-I, including this piece on the need to increase transparency about who's putting up the money and about what suits they're funding.)

Safer Teen Drivers

When my older daughter, Shannon, was 16, my wife couldn't sleep one night and went off to watch some TV. CNN ran a long piece about dangerous rain, concluding with footage of a car driving through a stream of water crossing a road. The announcer intoned, "Whatever you do, don't do this." My wife was suddenly wide awake. "That's my car!" she said to herself.

It was, too. She ran the video back and confirmed that her low-set sports car was, in fact, being driven through a flash flood. Shannon made it fine, but our new driver was caught.

She explained the next day that she'd been driving to her early-morning horseback-riding lesson and desperately didn't want to be late. She only knew the backroads route to the barn, so she decided to press on, somehow not wondering why a camera crew was set up by the side of the road.

She made it, but her mom and I delivered a stern lecture with all kinds of threats attached. And at age 31, Shannon has not only not had an accident but hasn't even had a moving violation. The same goes for her 29-year-old sister.

I've joked for years that teaching a teen to drive is easy. You just need to have a CNN crew follow them around.

That's sort of what's happening through telematics, such as dashboard cameras, as this survey from Nationwide highlights.

There is a long way to go: The survey finds that 96% of parents think dashcams are valuable but that only 26% of teens currently use them. Still, I see encouraging signs. A recent report by Cambridge Mobile Telematics found that the use of games and social media while driving—a problem especially acute among young drivers—has plunged. And traffic deaths on U.S. highways have now fallen for 12 consecutive quarters and were at their lowest in six years in the first quarter of 2025. The number of deaths—8,055—was still ghastly, but was down 6.3% from a year earlier.

Here's to progress.

The End of AOL Dial-up

To most people, the news about AOL was probably that it still offered dial-up, not that it was finally ending the service. But technology has a "long tail," which is why 163,000 Americans were still using dial-up in 2023, why insurers and other business are still having to deal with programs written in COBOL (a language designed in 1959), etc.

The surprise about the long tail made me think it's worth spending a minute on the various stages of a technology megatrend like internet access or, say, generative AI, because there are some other surprises, too, that matter today.

The main one is the overinvestment that frequently happens. People wonder how companies building large language models can justify the hundreds of billions of dollars A YEAR that they're spending just on AI architecture. And the answer is... they can't. Not in the aggregate.

But each of the big players can justify its spending individually because the potential win is mind-boggling. Yes, Meta may be getting carried away by offering $100 million signing bonuses to individual AI researchers and by spending some $70 billion on AI infrastructure this year to overcome what's generally seen as a lagging position, but Meta will generate trillions of dollars in market cap if the bet pays off.

In general, a tech megatrend goes like this:

- The Wild West

- The land grab

- The near-monopoly

- The long tail

With AOL, the Wild West was the mid-1990s, when everyone wanted to get to the internet but wasn't quite sure how to do it. Dial-up was a known way to connect to a computer, but there were loads of competitors for AOL. Meanwhile, cable modems were becoming a thing, while DSL was also claiming to be the high-speed solution. WiFi was in its infancy, and Bluetooth was being positioned as a better wireless solution.

Dial-up was pretty quickly outpaced by cable modems as a technology but still won a mass audience, setting AOL up nicely for the land grab.

The land grab was when AOL blanketed the Earth with CDs that gave people immediate access to AOL's service (and played fast-and-loose enough with the accounting for its marketing expenses that it eventually paid a fine to the Securities and Exchange Commission). AOL won the land grab—and was fortunate enough to merge with TimeWarner at a widely inflated stock market valuation for AOL before internet access moved to its next phase, where AOL lost big time.

That next phase, the quasi-monopoly, actually didn't happen as quickly or decisively as it did with, say, IBM-compatible personal computers, Google's search engine or Facebook in the early days of social media. AT&T and Verizon to needed many years to emerge as the dominant players. But it did become clear quickly that AOL's "walled garden" approach was a loser. AOL wanted users to sign in through its dial-up and then never leave its site; users were to do all their banking, shopping, etc. through AOL. That approach has worked for Apple, but it became clear in the early 2000s that users were going to branch out across the internet as companies figured out how to make their sites more accessible. Whatever was going to emerge as the quasi-monopoly, AOL wasn't going to be part of it.

AOL had a market cap of $164 billion when it merged with TimeWarner in 2000, but fell so far that it was sold to private equity in 2021 for just $5 billion, even though stock market indices had roughly tripled in the interim—and that price included Yahoo, another former high flyer, and Verizon's ad tech business.

That sale just left the long tail, some 35 years after AOL was founded.

When you apply my model to AI, I'd say we're toward the end of the Wild West phase—we're not likely to again see something like Sam Altman getting fired as CEO of OpenAI, then almost immediately rehired.

We're starting to move into the land grab, even though the technology isn't fully sorted out. Depending on how quickly a new battleground takes shape for agentic AI, we might see the technology sorting out in the next couple of years. The sorting out is when you'll see the big shakeout in the stock market valuations as companies that spent many tens of billions of dollars on AI are identified as losers. (My bet is that Tesla will be the first to lose the hundreds of billions of dollars of market cap linked to its AI aspirations, but we'll see.)

I realize this piece has run far longer than normal, but here's my story about a near-catastrophe with dial-up:

Some friends had recently bought a house in the Berkshires, and I joined them for a weekend there in the early '90s. I had reported a scoop on IBM that I knew would lead the WSJ on Monday and wrote it Sunday afternoon on the crummy little TRS-80s that we still used in those days. We then realized that their home's phones were hard-wired into the walls, so there was no way to plug in a cord.

With deadline approaching, we drove to the nearest town, Huntington, Mass., but couldn't immediately find a solution. We finally went into a store so tiny that we could see the curtain that separated the store from the room in the back where the proprietor lived. She had a modular phone. I said I'd pay her $20 if she let me make a one-minute long-distance call to New York. She looked at me like I had two heads, but she agreed.

The problem wasn't over. Her phone was set rather high in the wall, and the cord that connected the wall jack to the phone was only about four inches long. To plug the cord into my computer, I had to hold it above my head. That meant feeling my way around the keyboard as I dialed the number for the computer in New York, waited for the exchange of tones, and then typing blind as I inputted the code that gave my computer access to the WSJ system.

It took a few tries -- as the proprietor watched anxiously, wondering what I was doing to her long-distance bill -- but it finally worked.

I hated dial-up. I'm glad it's finally on its last legs.

Cheers,

Paul