End of the Road for OBD in UBI Plans?

In theory, there are benefits from reading vehicle data and being connected to the car, but the reality has proven massively different.

In theory, there are benefits from reading vehicle data and being connected to the car, but the reality has proven massively different.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Max Kisvarday is managing director, Europe for TrueMotion. He is responsible for TrueMotion’s south and east European operations, customer acquisition, onboarding and management.

Seven questions that simplify the complexities of flood insurance in the midst of regulatory changes and extreme weather events.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

John Dickson is president and CEO of Aon Edge. In this role, Dickson oversees the delivery of primary, private flood insurance solutions as an alternative to federally backed flood insurance.

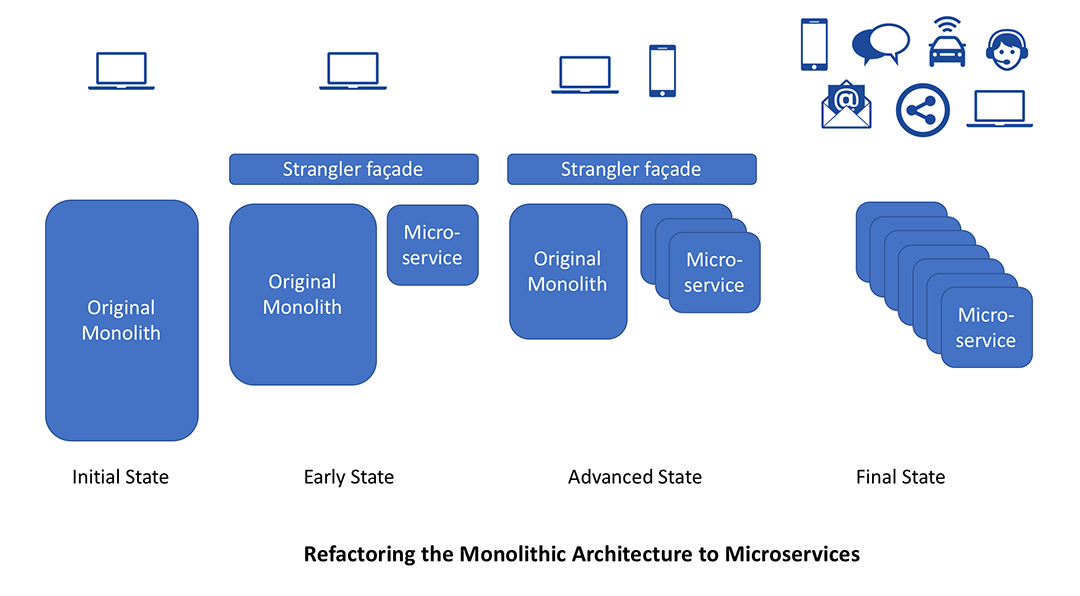

The microservices architecture solves a number of problems with legacy systems and can continue to evolve over time.

Over time, those services can be built directly using a microservices architecture, by eliminating calls to legacy application. This approach is more suited to large, legacy applications. Within smaller systems that are not very complex, the insurer may be better off rewriting the application.

How to make organizational changes to adopt microservices-driven development

Adopting microservices-driven development requires a change in organization culture and mindset. The DevOps practice tries to shift siloed operation responsibilities to the development organization. With the successful introduction of microservices best practices, it is not uncommon for the developers to do both. Even when the two teams exist, they have to communicate frequently, increase efficiencies and improve the quality of services they provide to customers. The quality assurance, performance testing and security teams also need to be tightly integrated with the DevOps teams by automating their tasks in the continuous delivery process.

See also: Who Is Innovating in Financial Services?

Organizations need to cultivate a culture of sharing responsibility, ownership and complete accountability in microservices teams. These teams need to have a complete view of the microservice from a functional, security and deployment infrastructure perspective, regardless of their stated roles. They take full ownership for their services, often beyond the scope of their roles or titles, by thinking about the end customer’s needs and how they can contribute to solving those needs. Embedding the operational skills within the delivery teams is important to reduce potential friction between the development and operations team.

It is important to facilitate increased communication and collaboration across all the teams. This could include the use of instant messaging apps, issue management systems and wikis. This also helps other teams like sales and marketing, thus allowing the complete enterprise to align effectively toward project goals.

As we have seen in these three blogs, the microservices architecture is an excellent solution to legacy transformation. It solves a number of problems and paves the path to a scalable, resilient system that can continue to evolve over time without becoming obsolete. It allows rapid innovation with positive customer experience. A successful implementation of the microservices architecture does, however, require:

Over time, those services can be built directly using a microservices architecture, by eliminating calls to legacy application. This approach is more suited to large, legacy applications. Within smaller systems that are not very complex, the insurer may be better off rewriting the application.

How to make organizational changes to adopt microservices-driven development

Adopting microservices-driven development requires a change in organization culture and mindset. The DevOps practice tries to shift siloed operation responsibilities to the development organization. With the successful introduction of microservices best practices, it is not uncommon for the developers to do both. Even when the two teams exist, they have to communicate frequently, increase efficiencies and improve the quality of services they provide to customers. The quality assurance, performance testing and security teams also need to be tightly integrated with the DevOps teams by automating their tasks in the continuous delivery process.

See also: Who Is Innovating in Financial Services?

Organizations need to cultivate a culture of sharing responsibility, ownership and complete accountability in microservices teams. These teams need to have a complete view of the microservice from a functional, security and deployment infrastructure perspective, regardless of their stated roles. They take full ownership for their services, often beyond the scope of their roles or titles, by thinking about the end customer’s needs and how they can contribute to solving those needs. Embedding the operational skills within the delivery teams is important to reduce potential friction between the development and operations team.

It is important to facilitate increased communication and collaboration across all the teams. This could include the use of instant messaging apps, issue management systems and wikis. This also helps other teams like sales and marketing, thus allowing the complete enterprise to align effectively toward project goals.

As we have seen in these three blogs, the microservices architecture is an excellent solution to legacy transformation. It solves a number of problems and paves the path to a scalable, resilient system that can continue to evolve over time without becoming obsolete. It allows rapid innovation with positive customer experience. A successful implementation of the microservices architecture does, however, require:

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Denise Garth is senior vice president, strategic marketing, responsible for leading marketing, industry relations and innovation in support of Majesco's client-centric strategy.

Even the most prominent blockchain company, Ripple, doesn’t use blockchain in its product. You read that right.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Kai Stinchcombe is cofounder and CEO of True Link Financial, a financial services firm focused on the diverse needs of today’s retirees: addressing longevity, long-term care costs, cognitive aging, investment, insurance and banking.

Streaming of data readings allows operational predictions 20 times earlier. What if risk management saw similar improvements?

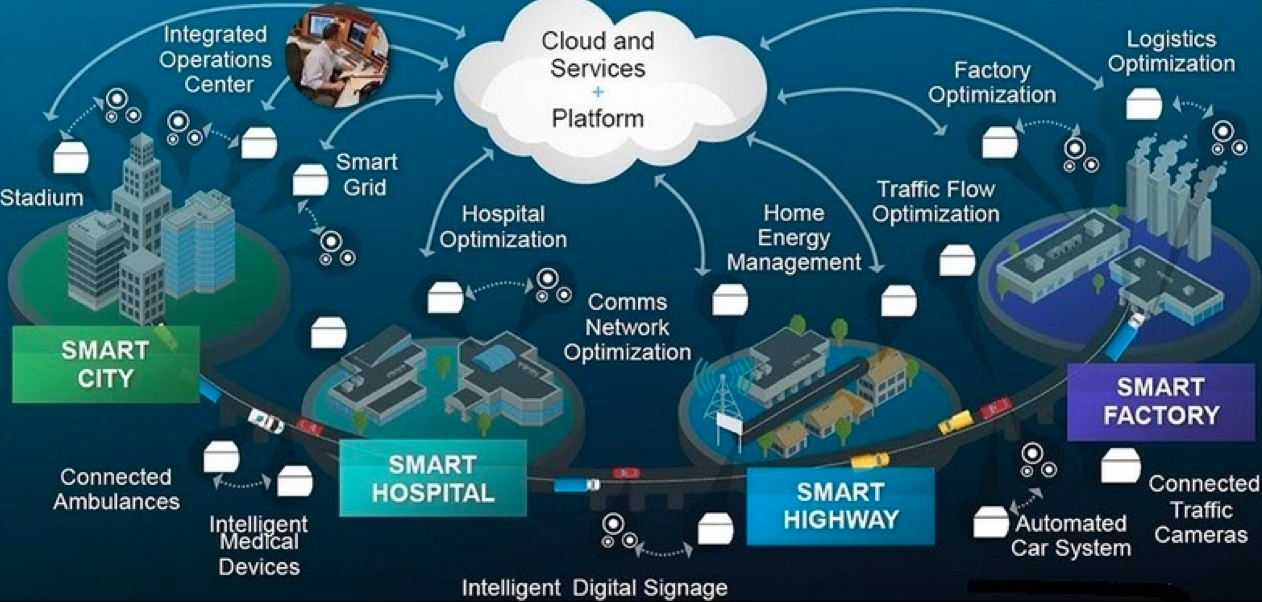

In my 2013 book “Mastering 21st Century Enterprise Risk Management,” I suggested “horizon scanning” as a method for monitoring risk and threats. With IoT, we have the opportunity to extend this from a series of discrete observations into continuous real-time monitoring. But let’s start with basics.

What Is IoT – Intelligent Things?

The IoT acronym for Internet of Things, like most IT acronyms, is meaningless, so it’s more recently being referred to as Intelligent Things, which is both more meaningful and allows for its expansion outside its original classification (I will come to that shortly).

IoT technology is about collecting and processing continuous readings from wireless sensors embedded in operational equipment. These tiny electronics devices transmit their readings on heat, weight, counters, chemical content, flow rates, etc., to a nearby computer, referred to as at the “edge,” which does some basic classification and consolidation and then uploads the data to the “cloud,” where some specialist analytic system monitors those readings for anomalies.

See also: Insurance and the Internet of Things

The benefits of IoT are already well-established in the fields of equipment maintenance and material processing (see Using Predictive Analytics in Risk Management). Deloitte found that predictive maintenance can reduce the time required to plan maintenance by 20% to 50%, increase equipment uptime and availability by 10% to 20% and reduce overall maintenance costs by 5% to 10%.

Just as the advent of streaming video finally made watching movies online a reality, so streaming of data readings has produced a real paradigm shift in traditional metrics monitoring, including being able to make operational predictions up to 20 times earlier and with greater accuracy than traditional threshold-based monitoring systems.

Think about it. What if we could achieve these sorts of improvement in risk management?

Monitoring Risk Management in Real Time

The real innovation from IoT is not from the hardware technology but from the software architecture built to process streaming IoT data. Traditionally, data was collected, then processed and analyzed. Like traditional risk management, it is historic and reactive. Traditional analytics used historical data to forecast what is likely to happen based on the historically set targets and thresholds, e.g. when a sensor hits a critical reading, a release valve would open to prevent overload. Processing and energy has already been expended (lost), and the cause still needs to be rectified.

IoT technology continuously streams data and processes it in real time. Streaming analytics attempt to forecast what data is coming. Instead of initiating controls in reaction to what has happened, IoT steaming aims to alter inputs or the system to maintain optimum performance conditions. In an IoT system, inputs and processing are continually being adjusted base on the streaming analytics expectations of future readings.

This technology will have its profound and transforming effect on risk management. When it migrates from being used to measure hardware environmental factors to software-based algorithms monitoring system processes and characteristics, we will be able to assess stresses and threats, both operational and behavioral.

See also: Predictive Analytics: Now You See It….

In the 2020s, risk management will be heavily driven by KRI metrics, and as such will be a prime target for monitoring by streaming analytics. In addition to obvious environmental monitoring, streaming metrics could be used to monitor in real time staff stress and behavior, mistake (error) rates, satisfaction/complaint levels, process delays, etc. All change over time and can be adjusted in-process to prevent issues arising.

In addition to existing general-purpose IoT platforms, such as Microsoft Azure IoT, IBM Watson IoT or Amazon AWS IoT, with the advent of “serverless apps” (this technology exists now), we will see an explosion in mobile apps available from public app stores to monitor every conceivable data flow, to which you will be able to subscribe and plug in to your individual data needs. We can then finally ditch the old reactive PDCA chestnut for the ROI method of process improvement and risk mitigation (see PDCA is NOT Best Practice).

In my 2013 book “Mastering 21st Century Enterprise Risk Management,” I suggested “horizon scanning” as a method for monitoring risk and threats. With IoT, we have the opportunity to extend this from a series of discrete observations into continuous real-time monitoring. But let’s start with basics.

What Is IoT – Intelligent Things?

The IoT acronym for Internet of Things, like most IT acronyms, is meaningless, so it’s more recently being referred to as Intelligent Things, which is both more meaningful and allows for its expansion outside its original classification (I will come to that shortly).

IoT technology is about collecting and processing continuous readings from wireless sensors embedded in operational equipment. These tiny electronics devices transmit their readings on heat, weight, counters, chemical content, flow rates, etc., to a nearby computer, referred to as at the “edge,” which does some basic classification and consolidation and then uploads the data to the “cloud,” where some specialist analytic system monitors those readings for anomalies.

See also: Insurance and the Internet of Things

The benefits of IoT are already well-established in the fields of equipment maintenance and material processing (see Using Predictive Analytics in Risk Management). Deloitte found that predictive maintenance can reduce the time required to plan maintenance by 20% to 50%, increase equipment uptime and availability by 10% to 20% and reduce overall maintenance costs by 5% to 10%.

Just as the advent of streaming video finally made watching movies online a reality, so streaming of data readings has produced a real paradigm shift in traditional metrics monitoring, including being able to make operational predictions up to 20 times earlier and with greater accuracy than traditional threshold-based monitoring systems.

Think about it. What if we could achieve these sorts of improvement in risk management?

Monitoring Risk Management in Real Time

The real innovation from IoT is not from the hardware technology but from the software architecture built to process streaming IoT data. Traditionally, data was collected, then processed and analyzed. Like traditional risk management, it is historic and reactive. Traditional analytics used historical data to forecast what is likely to happen based on the historically set targets and thresholds, e.g. when a sensor hits a critical reading, a release valve would open to prevent overload. Processing and energy has already been expended (lost), and the cause still needs to be rectified.

IoT technology continuously streams data and processes it in real time. Streaming analytics attempt to forecast what data is coming. Instead of initiating controls in reaction to what has happened, IoT steaming aims to alter inputs or the system to maintain optimum performance conditions. In an IoT system, inputs and processing are continually being adjusted base on the streaming analytics expectations of future readings.

This technology will have its profound and transforming effect on risk management. When it migrates from being used to measure hardware environmental factors to software-based algorithms monitoring system processes and characteristics, we will be able to assess stresses and threats, both operational and behavioral.

See also: Predictive Analytics: Now You See It….

In the 2020s, risk management will be heavily driven by KRI metrics, and as such will be a prime target for monitoring by streaming analytics. In addition to obvious environmental monitoring, streaming metrics could be used to monitor in real time staff stress and behavior, mistake (error) rates, satisfaction/complaint levels, process delays, etc. All change over time and can be adjusted in-process to prevent issues arising.

In addition to existing general-purpose IoT platforms, such as Microsoft Azure IoT, IBM Watson IoT or Amazon AWS IoT, with the advent of “serverless apps” (this technology exists now), we will see an explosion in mobile apps available from public app stores to monitor every conceivable data flow, to which you will be able to subscribe and plug in to your individual data needs. We can then finally ditch the old reactive PDCA chestnut for the ROI method of process improvement and risk mitigation (see PDCA is NOT Best Practice).

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Greg Carroll is the founder and technical director, Fast Track Australia. Carroll has 30 years’ experience addressing risk management systems in life-and-death environments like the Australian Department of Defence and the Victorian Infectious Diseases Laboratories, among others.

The focus can move from life protection to life enablement, support that helps people lead a long and healthy life.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Ross Campbell is chief underwriter, research and development, based in Gen Re’s London office.

It’s time to demand innovative solutions that leverage enhanced benefit plan design with emerging technology and contextual data.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Steven Schwartz is the founder of Global Cyber Consultants and has built the U.S. business of the international insurtech/regtech firm Cyberfense.

The process that many insurers currently use to capture underwriting data illustrates why digitization alone isn’t enough.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Laurie Kuhn is COO and cofounder of Flyreel, the most advanced AI-assisted underwriting solution for commercial and residential properties. She brings 20-plus years of experience in digital innovation to Flyreel, where she leads the company’s product, marketing and operations strategies.

Today's products must be customized, inexpensive and simple to configure and understand -- and insurtech makes all three possible.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Zeynep Stefan is a post-graduate student in Munich studying financial deepening and mentoring startup companies in insurtech, while writing for insurance publications in Turkey.

Social media has led to less human interaction, not more. It has suppressed human development, not stimulated it. We have regressed.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Vivek Wadhwa is a fellow at Arthur and Toni Rembe Rock Center for Corporate Governance, Stanford University; director of research at the Center for Entrepreneurship and Research Commercialization at the Pratt School of Engineering, Duke University; and distinguished fellow at Singularity University.