4 Ways to Improve Carrier-Broker Ties

If you want to be the carrier of choice for your brokers, use National Insurance Awareness Day to focus on broker relationships.

If you want to be the carrier of choice for your brokers, use National Insurance Awareness Day to focus on broker relationships.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

BJ Schaknowski is chief sales and marketing officer at Vertafore.

With a boom of new insurtechs targeting millennials, these four companies are actually delivering more value to millennial consumers.

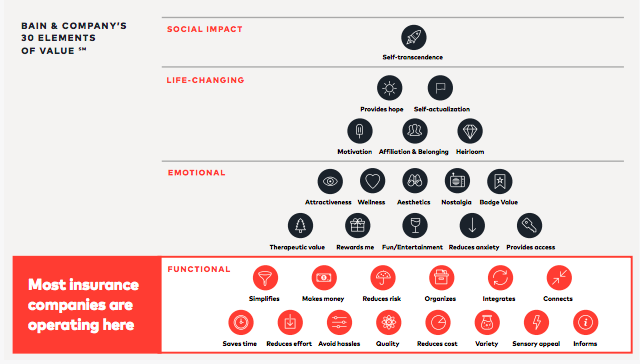

When we studied millennials, one of our research hypotheses was that to deliver value at the highest levels means resonating with millennial values, that is, the important and lasting beliefs that influence their behaviors, attitudes and priorities. Our research identified three key values driving millennials: Community & Authentic Connect, Interdependency & Social Good, Transparency & Autonomy. Through a sequence of ideation, design and user testing, we were able to validate that insurance products that resonate with these values ultimately deliver more value (and higher-level value) to millennials than traditional insurance products, fundamentally changing the way they think about insurance.

See also: Overcoming Concerns by Millennials

Looking at the industry at large, we found that there are a handful of insurance companies, mostly startups, that are taking these values to heart and designing solutions that not only address millennial needs and concerns but also resonate with their values to deliver value at the highest levels.

When we studied millennials, one of our research hypotheses was that to deliver value at the highest levels means resonating with millennial values, that is, the important and lasting beliefs that influence their behaviors, attitudes and priorities. Our research identified three key values driving millennials: Community & Authentic Connect, Interdependency & Social Good, Transparency & Autonomy. Through a sequence of ideation, design and user testing, we were able to validate that insurance products that resonate with these values ultimately deliver more value (and higher-level value) to millennials than traditional insurance products, fundamentally changing the way they think about insurance.

See also: Overcoming Concerns by Millennials

Looking at the industry at large, we found that there are a handful of insurance companies, mostly startups, that are taking these values to heart and designing solutions that not only address millennial needs and concerns but also resonate with their values to deliver value at the highest levels.

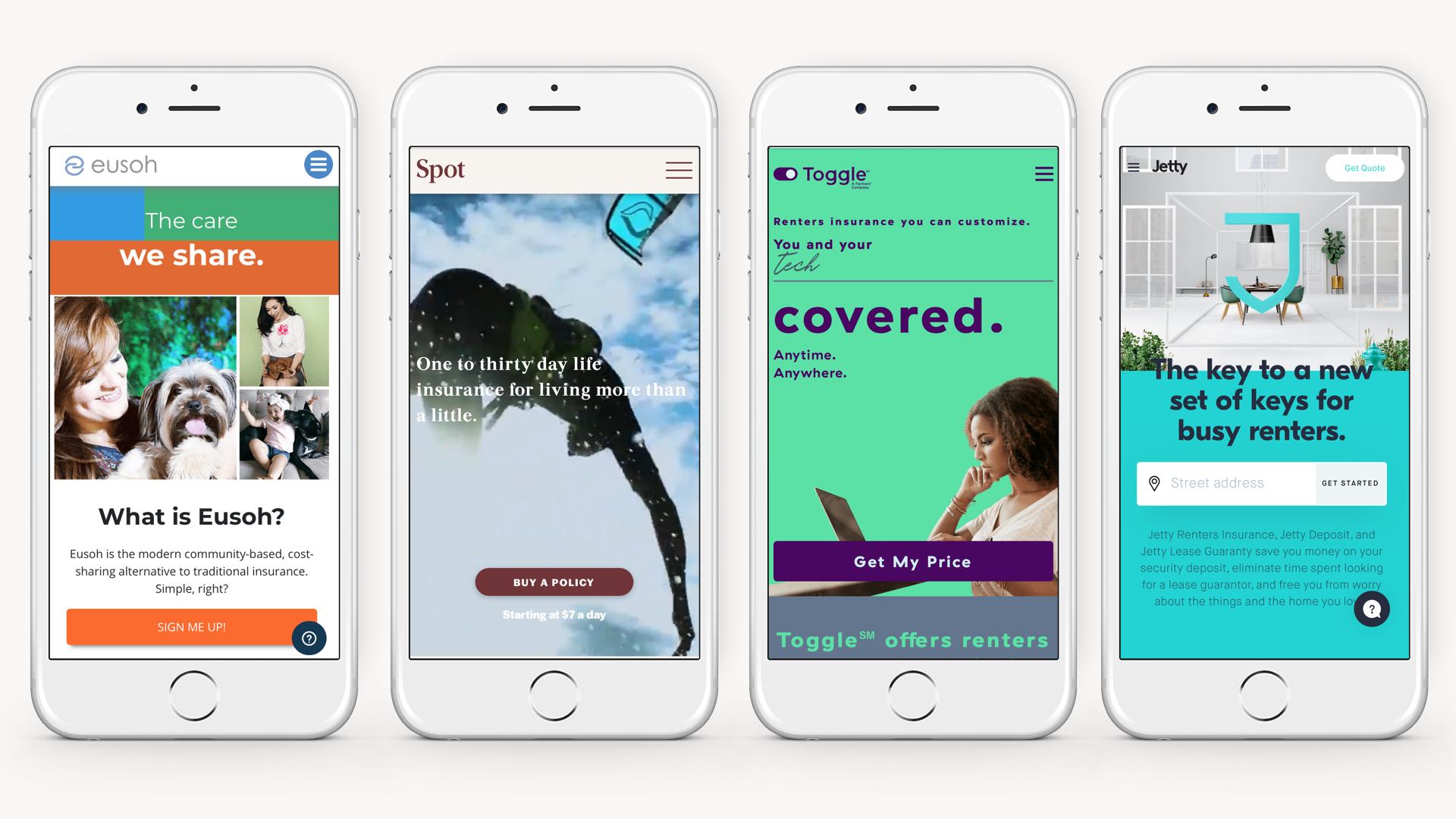

1. Eusoh

Eusoh actually isn’t insurance at all. It’s community-based cost sharing, offering an alternative to insurance. A small, little-known startup, Eusoh has built a cost-sharing platform for pet-related veterinary expenses. Unlike traditional insurance, Eusoh customers don’t pay monthly premiums. Instead, they pay a $10-a-month subscription fee to join a cost-sharing community group with like-minded pet owners (there are currently groups for Jewish Dog Lovers, Urban Dog Owners, Large Cats, LGBTQ+ Cat Lovers and more). Community members pay for their vet visits up front and submit veterinary expenses; these costs are then shared among the group, and members get reimbursed. Members are also able to see how their funds are being distributed among the group through a dashboard that displays all the different expenses submitted for different pets and how much money each member was reimbursed. Eusoh also promises significant savings to its members, with the average cost of traditional pet insurance being around $800 for 10 months and Eusoh averaging only $133.

What we like about Eusoh:

Traditional insurance isn’t all that different from Eusoh’s cost-sharing model. At the fundamental level, both are about distributing expenses and risks among a group of people to lower costs for everyone. The big difference is that, in traditional insurance, customers pay their monthly premiums, and, if they themselves don’t have a claim, they have no idea where their money goes. What we like about the Eusoh model is the way it surfaces the community aspect of insurance so that customers can see how their contributions are going to help others. In our research with millennials, we learned that being able to see and understand how the money they were paying to their insurance company was being used to help other members of their community made insurance feel more valuable to millennials, and more worth the money. By charging a subscription fee, and then only billing members for the actual costs incurred by the community, Eusoh is able to resist a problem that has long plagued the industry–customers feeling like their insurance companies are just trying to rip them off.

2. Life by Spot

Life by Spot offers flexible, short-term life insurance with one- to 30-day policies starting at as low as $7 a day. The insurance is geared toward travelers, athletes and other risk-takers. Life By Spot applicants are instantly approved, and there are few exclusions (Spot is like your cool older brother who “approves of the activities your mom wouldn’t”). While short-term life insurance might seem gimmicky at first, the founders see orienting life insurance around experiences (like skydiving or a surf trip to Brazil) rather than more traditional major life events (i.e., marriage and children) as a means of introducing millennials (who are increasingly prone to delaying traditional life events) to life insurance earlier on in their journey, creating a funnel for bigger life insurance policies down the road. Life by Spot also has plans to deepen its ties to outdoor and athletic communities with a new injury protection policy starting at $5 a day that would cover policy holders with high-deductible insurance plans who are injured up to the amount when their health insurance kicks in. Set to officially launch later this summer, the new product has already proved promising. The company recently soft-launched the product with the Austin Marathon with impressive results, offering the policy as an add-on to runners when registering for the marathon. While supplemental coverage like this is nothing new, the distribution approach is fresh and highly relevant to its target consumer.

What we like about Life by Spot:

Our research showed that people are more open to sharing costs and risks (as well as data and other information) when it is with a community of people they identify with. While Spot may seem niche, this is precisely what we like about it. We like that Spot takes a traditional product and spins it for a specific community, one with unique values, risks and behaviors. The spin on life insurance is more than just a marketing message (millennials can see right through this, according to our research); there is a change at the product level that corresponds with the values, risks and behaviors of this particular community, creating a sense of authenticity and trust in an industry where these things are increasingly difficult to come by. By making policy holders feel like they are involved in protecting a like-minded community and the kind of lifestyle this community values, Spot is able to create more value for millennial consumers, beyond what traditional insurers currently offer.

3. Toggle Insurance

Toggle is a new, millennial-focused insurance brand launched by Farmers Insurance late last year. Currently a renters insurance product, with adds-on like credit building and coverage for pets and side hustles, Toggle has plans to expand its insurance offering to create an entire ecosystem of modern insurance products geared toward millennials. The name Toggle refers to the customer’s ability to “toggle” different coverages on and off, and coverage levels up and down, to create completely customizable insurance that befits the individual customer’s budget, lifestyle and coverage needs.

What we like about Toggle:

One of the mistakes we see players in the insurance industry make is to assume that simply having a digital product is enough to capture millennial consumers. For these players, innovation often ends here - at direct-to-consumer digital products that, while making buying insurance simpler and easier, fail to deliver value at the highest levels. What we like about Toggle is that they understand that a digital product is simply a foundation. To deliver value to millennials requires going beyond digital. In fact, our research found that in the age of Facebook, data breaches and digital burnout, millennials are increasingly wary of new digital products, and autonomy and transparency are more important than ever to securing their loyalty and trust. Toggle takes both autonomy and transparency to the next level. Rather than simply packaging various coverages at different price points for customers to choose from, Toggle provides consumers with true autonomy, allowing them to select exactly which coverages they want to include (and those they don’t), and giving them control over precisely how much coverage they want. As far as transparency goes, the product goes above and beyond to ensure that customers are never caught off guard by any “gotcha moments.” Rather than burying limits and exclusions in the fine print, Toggle calls them out from the get-go and allows customers to “toggle” on more coverage where it might be needed.

See also: The Great Millennial Shift

4. Jetty

Jetty is just one of a handful of new renters insurance startups geared toward millennials. Founded in 2015, Jetty has set out to not only make renters insurance easier, faster and more affordable, but to make the overall experience of renting simpler, safer and more accessible for everyone. What makes Jetty unique from other startups in the renters space is the way the company is going beyond insurance to solve the most pressing problems for renters. In addition to a renters insurance product, the company also offers Jetty Deposit, a way for renters to bypass the financial burden of coming up with a security deposit by paying a one-time percentage fee of the would-be deposit amount, and Jetty Lease Guarantee, a service by which Jetty will act as a renter’s guarantor, for a small percentage of the yearly rent. In May, the company launched Student Housing Express, which enables student housing properties to “instantly approve qualifying students who aren't able to get a traditional guarantor.” While Jetty may not be the cheapest renters insurance on the market (most policies start around $9-10 a month compared with Lemonade’s $5), the company's service offerings beyond insurance demonstrate an understanding of the more holistic experience of being a renter, building loyalty with renters before they even start thinking about insurance.

What we like about Jetty:

Jetty first caught our attention a little over a year ago when we learned about Jetty Deposit and Jetty Lease Guarantee. To us, these products appeared to be novel solutions to the significant financial hurdles facing millennials (about 70% of whom are renters) and unlike anything other players in the industry were doing. At the time, a lot of the millennial-related insurance products we saw on the market seemed to ignore the financial realities of millennials, many of whom are burdened with student debt, have little money saved (millennials under 35 have a median savings of just $1,500 ) and haven’t had opportunities to build credit. While many of these insurance products were catering to elite millennials with disposable income, when we surveyed millennials last summer, we found that the two greatest challenges they face are financial security and stability and uncertainty in the future. While insurance products can certainly help create more certainty, Jetty’s supplementary products truly address the challenge of financial security and stability in a way that few other insurance products are able to do, freeing up capital that might otherwise be spent on a security deposit to help millennials do things like build up their savings, pay down debt or save for a major life purchase.

__

While all of these products boast seamless digital experiences that make buying insurance faster, easier and more convenient, what makes these four companies special isn’t fancy technology, low prices or convenience, but the way they connect with higher-order millennial values to offer tangible solutions to real-life problems, ultimately cutting through the digital din to deliver more value to millennial consumers.

To learn more about the insights from our millennial research, download our report, Millennials & Modern Insurance.

The article was originally published here.

1. Eusoh

Eusoh actually isn’t insurance at all. It’s community-based cost sharing, offering an alternative to insurance. A small, little-known startup, Eusoh has built a cost-sharing platform for pet-related veterinary expenses. Unlike traditional insurance, Eusoh customers don’t pay monthly premiums. Instead, they pay a $10-a-month subscription fee to join a cost-sharing community group with like-minded pet owners (there are currently groups for Jewish Dog Lovers, Urban Dog Owners, Large Cats, LGBTQ+ Cat Lovers and more). Community members pay for their vet visits up front and submit veterinary expenses; these costs are then shared among the group, and members get reimbursed. Members are also able to see how their funds are being distributed among the group through a dashboard that displays all the different expenses submitted for different pets and how much money each member was reimbursed. Eusoh also promises significant savings to its members, with the average cost of traditional pet insurance being around $800 for 10 months and Eusoh averaging only $133.

What we like about Eusoh:

Traditional insurance isn’t all that different from Eusoh’s cost-sharing model. At the fundamental level, both are about distributing expenses and risks among a group of people to lower costs for everyone. The big difference is that, in traditional insurance, customers pay their monthly premiums, and, if they themselves don’t have a claim, they have no idea where their money goes. What we like about the Eusoh model is the way it surfaces the community aspect of insurance so that customers can see how their contributions are going to help others. In our research with millennials, we learned that being able to see and understand how the money they were paying to their insurance company was being used to help other members of their community made insurance feel more valuable to millennials, and more worth the money. By charging a subscription fee, and then only billing members for the actual costs incurred by the community, Eusoh is able to resist a problem that has long plagued the industry–customers feeling like their insurance companies are just trying to rip them off.

2. Life by Spot

Life by Spot offers flexible, short-term life insurance with one- to 30-day policies starting at as low as $7 a day. The insurance is geared toward travelers, athletes and other risk-takers. Life By Spot applicants are instantly approved, and there are few exclusions (Spot is like your cool older brother who “approves of the activities your mom wouldn’t”). While short-term life insurance might seem gimmicky at first, the founders see orienting life insurance around experiences (like skydiving or a surf trip to Brazil) rather than more traditional major life events (i.e., marriage and children) as a means of introducing millennials (who are increasingly prone to delaying traditional life events) to life insurance earlier on in their journey, creating a funnel for bigger life insurance policies down the road. Life by Spot also has plans to deepen its ties to outdoor and athletic communities with a new injury protection policy starting at $5 a day that would cover policy holders with high-deductible insurance plans who are injured up to the amount when their health insurance kicks in. Set to officially launch later this summer, the new product has already proved promising. The company recently soft-launched the product with the Austin Marathon with impressive results, offering the policy as an add-on to runners when registering for the marathon. While supplemental coverage like this is nothing new, the distribution approach is fresh and highly relevant to its target consumer.

What we like about Life by Spot:

Our research showed that people are more open to sharing costs and risks (as well as data and other information) when it is with a community of people they identify with. While Spot may seem niche, this is precisely what we like about it. We like that Spot takes a traditional product and spins it for a specific community, one with unique values, risks and behaviors. The spin on life insurance is more than just a marketing message (millennials can see right through this, according to our research); there is a change at the product level that corresponds with the values, risks and behaviors of this particular community, creating a sense of authenticity and trust in an industry where these things are increasingly difficult to come by. By making policy holders feel like they are involved in protecting a like-minded community and the kind of lifestyle this community values, Spot is able to create more value for millennial consumers, beyond what traditional insurers currently offer.

3. Toggle Insurance

Toggle is a new, millennial-focused insurance brand launched by Farmers Insurance late last year. Currently a renters insurance product, with adds-on like credit building and coverage for pets and side hustles, Toggle has plans to expand its insurance offering to create an entire ecosystem of modern insurance products geared toward millennials. The name Toggle refers to the customer’s ability to “toggle” different coverages on and off, and coverage levels up and down, to create completely customizable insurance that befits the individual customer’s budget, lifestyle and coverage needs.

What we like about Toggle:

One of the mistakes we see players in the insurance industry make is to assume that simply having a digital product is enough to capture millennial consumers. For these players, innovation often ends here - at direct-to-consumer digital products that, while making buying insurance simpler and easier, fail to deliver value at the highest levels. What we like about Toggle is that they understand that a digital product is simply a foundation. To deliver value to millennials requires going beyond digital. In fact, our research found that in the age of Facebook, data breaches and digital burnout, millennials are increasingly wary of new digital products, and autonomy and transparency are more important than ever to securing their loyalty and trust. Toggle takes both autonomy and transparency to the next level. Rather than simply packaging various coverages at different price points for customers to choose from, Toggle provides consumers with true autonomy, allowing them to select exactly which coverages they want to include (and those they don’t), and giving them control over precisely how much coverage they want. As far as transparency goes, the product goes above and beyond to ensure that customers are never caught off guard by any “gotcha moments.” Rather than burying limits and exclusions in the fine print, Toggle calls them out from the get-go and allows customers to “toggle” on more coverage where it might be needed.

See also: The Great Millennial Shift

4. Jetty

Jetty is just one of a handful of new renters insurance startups geared toward millennials. Founded in 2015, Jetty has set out to not only make renters insurance easier, faster and more affordable, but to make the overall experience of renting simpler, safer and more accessible for everyone. What makes Jetty unique from other startups in the renters space is the way the company is going beyond insurance to solve the most pressing problems for renters. In addition to a renters insurance product, the company also offers Jetty Deposit, a way for renters to bypass the financial burden of coming up with a security deposit by paying a one-time percentage fee of the would-be deposit amount, and Jetty Lease Guarantee, a service by which Jetty will act as a renter’s guarantor, for a small percentage of the yearly rent. In May, the company launched Student Housing Express, which enables student housing properties to “instantly approve qualifying students who aren't able to get a traditional guarantor.” While Jetty may not be the cheapest renters insurance on the market (most policies start around $9-10 a month compared with Lemonade’s $5), the company's service offerings beyond insurance demonstrate an understanding of the more holistic experience of being a renter, building loyalty with renters before they even start thinking about insurance.

What we like about Jetty:

Jetty first caught our attention a little over a year ago when we learned about Jetty Deposit and Jetty Lease Guarantee. To us, these products appeared to be novel solutions to the significant financial hurdles facing millennials (about 70% of whom are renters) and unlike anything other players in the industry were doing. At the time, a lot of the millennial-related insurance products we saw on the market seemed to ignore the financial realities of millennials, many of whom are burdened with student debt, have little money saved (millennials under 35 have a median savings of just $1,500 ) and haven’t had opportunities to build credit. While many of these insurance products were catering to elite millennials with disposable income, when we surveyed millennials last summer, we found that the two greatest challenges they face are financial security and stability and uncertainty in the future. While insurance products can certainly help create more certainty, Jetty’s supplementary products truly address the challenge of financial security and stability in a way that few other insurance products are able to do, freeing up capital that might otherwise be spent on a security deposit to help millennials do things like build up their savings, pay down debt or save for a major life purchase.

__

While all of these products boast seamless digital experiences that make buying insurance faster, easier and more convenient, what makes these four companies special isn’t fancy technology, low prices or convenience, but the way they connect with higher-order millennial values to offer tangible solutions to real-life problems, ultimately cutting through the digital din to deliver more value to millennial consumers.

To learn more about the insights from our millennial research, download our report, Millennials & Modern Insurance.

The article was originally published here.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Emily Smith is the senior manager of communication and marketing at Cake & Arrow, a customer experience agency providing end-to-end digital products and services that help insurance companies redefine customer experience.

The number of wildfires has remained relatively constant, but damage has dramatically increased. Can risk mitigation techniques help?

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Scott Steinmetz brings broad industry experience coupled with nearly three decades of practical experience in applied engineering and risk management consultancy.

Stadiums can even set up “no drone zones” with equipment that can intercept drones within a periphery and turn them around.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Thom Rickert is vice president and emerging risks specialist at Trident Public Risk Solutions (an Argo affiliate). Rickert has over 35 years in the insurance industry, including extensive underwriting and marketing experience in all property and casualty lines of business.

Tired of telling risk managers to grow their quant competencies, the author applied the techniques to the Russian lottery. Therein lies a tale.

I started writing yet another article trying to convince risk managers to grow their quant competencies, to integrate risk analysis into decision-making processes and to use ranges instead of single-point planning, but then I thought, why bother? Why not show how risk analysis helps make better risk-based decisions instead?

After all, this is what Nassim Taleb teaches us. Skin in the game.

So I sent a message to the Russian risk management community asking who wants to join me to build a risk model for a typical life decision? Thirteen people responded, including some of the best risk managers in the country, and we set out to work.

We decided to solve an age-old problem – win the lottery. With help from Vose Software ModelRisk we set out to make history. (Not really: It's been done before. Still fun, though).

Here is some context:

We set out to test our risk management skills in a game of chance.

June 8, 2019

Whatsup group created. Started collecting data from past games. Some of the best risk managers in the country joined the team, 15 in total: head of risk of a sovereign fund, head of risk of one of the largest mining companies, head of corporate finance from an oil and gas company, risk manager from a huge oil and gas company, head of risk of one of the largest telecoms, infosecurity professionals from Monolith and many others.

June 9, 2019

Placing small bets to do some empirical testing.

June 10, 2019

First draft model is ready…

June 11, 2019

Created red team and blue team to simultaneously model potential strategies using two different approaches: bottom up and top down. Second model is created…

June 12, 2019

Testing if the lottery is fair, just in case we can game the system without much math. Yes, some numbers are more frequent than others, and there appears to be some correlation between different ball sets but not sufficient to produce a betting strategy. The conclusion – the lottery appears to be fair, so we will need to model various strategies.

June 13, 2019

Constantly updating red and blue models as we investigate and find more information about prize calculation, payment, tax implications and so on. The team is now genuinely excited. Running numerous simulations using free ModelRisk.

June 14, 2019

Did nothing, because all have to do actual work.

June 15, 2019

After running multiple simulations, we selected a low-risk, good-return strategy. Dozens more simulations later, here are the preliminary results, using very conservative assumptions:

Red and blue team models produced comparable results.

June 16, 2019

Started fundraising.

If we manage to collect more than the required budget, we decide to make two bets: one risk management bet (risk management strategy) and one speculative bet with much higher upside and as a result greater downside (risky strategy).

Full budget collected within just a few hours. Actually collected almost double the necessary amount and, as agreed, separated 50% of the funds into the second investment pool. Separate team set out to develop the risky strategy. While I was an active investor in the risk management strategy, I decided to play a role of a passive investor in the risky strategy and only invested 16% into the risky.

June 17, 2019

Continued to develop the model, improving estimates every time. Soon, we felt the financial risks were understood by the team members, and we needed to take care of other matters before the big day.

First, took care of legal and taxation risks. Drafted a legal agreement clearly stating the risks associated with the strategy, the distribution of funds and the responsibilities of team members. Each member signed. Agreed to have an independent treasurer.

Then started to deal with operational risks. Apparently transferring large sums of money, making large transactions and placing big bets is not plain vanilla and required multiple approvals, phone calls and even a Skype interview. Five team members in parallel were going through the approvals in case we needed multiple accounts to execute the strategy.

Probably the biggest risk was the ability of the lottery website to allow us to buy the tickets at the speed and volume necessary for our low-risk strategy. This turned out to be a huge issue, and we found an ingenious solution. The information security team at Monolith did something amazing to solve the problem, and I mean it, amazing. I have never seen anything like this. It’s a secret, unfortunately, because, you guessed it, we are going to use it again.

The strategy that the lottery company recommended for large bets is actually much riskier than the one we selected. How do we know that? Because we ran thousands of simulations and compared the results.

June 18, 2019

The lottery company changed the game rules slightly. Ironically, this slightly improved our 90% confidence interval and reduced the probability of loss. So, thank you, I guess.

More testing and final preparation. The list of lottery tickets waiting to be executed.

In the true sense of skin in the game, team members who worked on the actual model put up at least double the money of other team members.

June 19, 2019

8am. We were just about to make risk management history. A lot of money to be invested based on the model that we developed and had full trust in. I felt genuinely excited: Can proper risk management lead to better decisions? I am sure other team members were excited, too.

By about lunch time, the strategy was executed. We bought all the tickets. Now we just had to wait for the 10pm game. Don’t know about the others, but I couldn’t do any work all day. I couldn’t even sit still, let alone think clearly. Endorphins, dopamine, serotonin and more.

At 9:30pm, we did a team broadcast, showing the lottery game as well as our accounts to monitor the winnings, both for excitement purposes and as full disclosure.

Then came the winning numbers. Two team members actually managed to plug them into the model and calculate the expected winnings. We had the approximation before the lottery company did.

You guessed it: We won. Our actual return was close to 189% on the money invested after taxes (or 89% profit; remember, our estimate was 50% to 100% profit, so well within our model). We almost doubled our initial investment. Not bad for risk management. (Good luck solving this puzzle with a heat map.)

June 20, 2019

More excitement, model back-testing and lessons learned -- and, perhaps the most difficult part, explaining to non-quant risk management friends why, no, this was not luck; it was great decision making.

In fact, our final result was close to P50. We were actually unlucky, both because we didn’t get some of the high-ticket combinations and, more importantly because five other people did, significantly reducing our prize pool.

Let me repeat that: We were unlucky and still almost doubled our money.

June 21, 2019

Job well done!

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Alex Sidorenko has more than 13 years of strategic, innovation, risk and performance management experience across Australia, Russia, Poland and Kazakhstan. In 2014, he was named the risk manager of the year by the Russian Risk Management Association.

Real direct contracting can revolutionize the healthcare experience for employers by stripping out third parties that don’t add value.

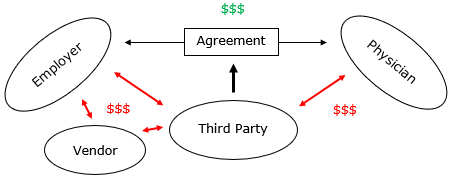

There are too many arrows. There’s nothing “direct” about this model. It’s a dishonest contract: a relationship of three (or more if a vendor is involved), not the two that the name implies.

Yet many employee benefits brokers offer this type of arrangement, replete with the administrative fees that such an arrangement entails. Those fees can add up quickly. Say a benefits broker or vendor charges 10% of the cost of care for implementing the agreement and uses a TPA that charges 15% for facilitating the employer-physician relationship.

See also: 4-Step Path to Better Customer Contacts

The cost of a $20,000 knee surgery replacement would balloon to $25,000 because of unnecessary third-party fees, leaving less value for the employer and physician. Yes, using a preferred provider organization (PPO) that discounted off a $55,000 charge for inpatient knee surgery would be even worse. But creating a less-bad PPO network via an improper “direct” contract shouldn’t be the goal for employers.

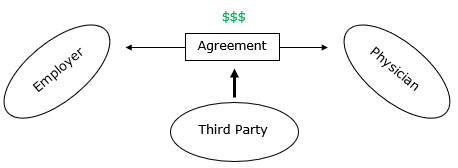

The Right Way to Implement Direct Contracting

A DC agreement should look direct—like this.

There are too many arrows. There’s nothing “direct” about this model. It’s a dishonest contract: a relationship of three (or more if a vendor is involved), not the two that the name implies.

Yet many employee benefits brokers offer this type of arrangement, replete with the administrative fees that such an arrangement entails. Those fees can add up quickly. Say a benefits broker or vendor charges 10% of the cost of care for implementing the agreement and uses a TPA that charges 15% for facilitating the employer-physician relationship.

See also: 4-Step Path to Better Customer Contacts

The cost of a $20,000 knee surgery replacement would balloon to $25,000 because of unnecessary third-party fees, leaving less value for the employer and physician. Yes, using a preferred provider organization (PPO) that discounted off a $55,000 charge for inpatient knee surgery would be even worse. But creating a less-bad PPO network via an improper “direct” contract shouldn’t be the goal for employers.

The Right Way to Implement Direct Contracting

A DC agreement should look direct—like this.

Think of it this way: Once a direct contract is in place, it should remain in place if you, the benefits adviser, were to disappear tomorrow. That requires a great deal of forethought in how the DCs are written, but it’s the right way to implement direct contracting. Establishing a DC this way maximizes and preserves value between your clients and their physicians. That, in turn, goes a long way toward demonstrating your value and commitment to transparency as an adviser.

3 Tips to Help Ensure “Direct” Contracting

Real direct contracting has the potential to revolutionize the healthcare experience for employers by stripping out third parties that don’t add value. Your job as a benefits adviser is to ensure that DC is simplified, streamlined and truly direct. Here are a few tips on doing that:

Think of it this way: Once a direct contract is in place, it should remain in place if you, the benefits adviser, were to disappear tomorrow. That requires a great deal of forethought in how the DCs are written, but it’s the right way to implement direct contracting. Establishing a DC this way maximizes and preserves value between your clients and their physicians. That, in turn, goes a long way toward demonstrating your value and commitment to transparency as an adviser.

3 Tips to Help Ensure “Direct” Contracting

Real direct contracting has the potential to revolutionize the healthcare experience for employers by stripping out third parties that don’t add value. Your job as a benefits adviser is to ensure that DC is simplified, streamlined and truly direct. Here are a few tips on doing that:

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

John Harvey is CEO and founder of Wincline, a fee-only benefits advisory firm in Phoenix who brings more than a decade of experience in the industry. He is an expert at lowering costs for his clients by moving them from fully insured to self-funded.

Insurers find it difficult to manage data at rest (in databases). Now layer in all the real-time data from sensors, connected devices, etc.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Mark Breading is a partner at Strategy Meets Action, a Resource Pro company that helps insurers develop and validate their IT strategies and plans, better understand how their investments measure up in today's highly competitive environment and gain clarity on solution options and vendor selection.

Through mobile devices, people can enjoy much-needed peace of mind not only about their assets but items of sentimental value, too.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

How does the industry change if companies can sell devices and toss in some insurance as a giveaway?

Back in 2015 and 2016, we heard from oh-so-many insurtechs whose business model hinged on having carriers buy their gadget and give it away to customers because of the benefits the gadget provided. This model was flawed from the outset because of the prohibition in the insurance industry against this thing called rebating.

Fast forward to 2019, and a lot of innovative companies are thinking about a similar business model, only in reverse. Instead of selling insurance and throwing in something of value, companies are considering selling something and throwing in the insurance. This time, the idea may pass muster.

The combination could be especially hard for regulators to turn down if it enhances consumer safety, which, after all, is a key goal for insurers. Let's say a transportation network company (TNC) such as Uber has a device like a two-way camera system that monitors driving behavior and offers free insurance to drivers who will install it. How does a regulator say no to safer drivers?

What if the TNC gets clever with the bookkeeping to meet regulatory requirements? Just because a driver sees herself as buying a device and getting free insurance doesn't mean the TNC has to account for revenue that way.

Regulators will face some key questions, in new forms. Does an offer represent an inducement beyond what is reasonable? Are newcomers being given an advantage over incumbents? While regulators have traditionally been more protective in personal lines than in commercial lines, figuring that businesses have more expertise at their disposal, does the digital blurring of personal/commercial boundaries change the thinking? In a world of on-demand insurance and microinsurance, where the cover is increasingly tied to a physical device, how do you separate the two?

If some sort of bundling/rebating does work this time, the changes could be profound. Buy a cellphone from T Mobile and get a little life insurance with that. Agree to rent a property via Airbnb and get some homeowners insurance. The possibilities are endless.

In many ways, the history of the computer industry can be traced to the 1969 consent decree between IBM and the Department of Justice, which threw out the longstanding structure of the industry. In that case, IBM was no longer allowed to bundle everything—hardware, software and services—and sell only to those that would buy a whole package. The unbundling created opportunities for mainframe clones, services companies such as today's big consultants, etc., eventually including Intel, Microsoft, Google, Facebook and so on. Allowing for a rebundling of insurance could lead to similar innovation.

During the first internet boom, a Harvard Business School professor described to me what he called the Las Vegas business model—it's hard to have a normal restaurant or hotel in Las Vegas when casinos are giving away good food and rooms to entice gamblers. With the new possibilities of bundling/rebating, many in the insurance world may soon see just what the Las Vegas business model feels like.

Cheers,

Paul Carroll

Editor-in-Chief

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Paul Carroll is the editor-in-chief of Insurance Thought Leadership.

He is also co-author of A Brief History of a Perfect Future: Inventing the Future We Can Proudly Leave Our Kids by 2050 and Billion Dollar Lessons: What You Can Learn From the Most Inexcusable Business Failures of the Last 25 Years and the author of a best-seller on IBM, published in 1993.

Carroll spent 17 years at the Wall Street Journal as an editor and reporter; he was nominated twice for the Pulitzer Prize. He later was a finalist for a National Magazine Award.

Tokenization is the key to significantly reducing the likelihood of a cyber event resulting in a claim.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Robin Roberson is the managing director of North America for Claim Central, a pioneer in claims fulfillment technology with an open two-sided ecosystem. As previous CEO and co-founder of WeGoLook, she grew the business to over 45,000 global independent contractors.

Alex Pezold is co-founder of TokenEx, whose mission is to provide organizations with the most secure, nonintrusive, flexible data-security solution on the market.