Successful portfolio management is enabled by the breadth, reliability and granularity of data used. In our research to establish the portfolio management capabilities of top-performing underwriters, we found that the granularity of data drove the gap between top-quartile performance (outperformers) and bottom-quartile performance (emerging performers).

The role of the underwriter is becoming ever more sophisticated, whether considering case-level, augmented underwriting or portfolio-traded, algorithmic underwriting. There are many challenges facing underwriters as they embark on building their portfolio management capabilities, but the journey starts with an appreciation of data assets. Of course, it is not just underwriters on this journey – but also brokers, cover holders, claims professionals, reinsurers, other capital providers and investors. To gain the greatest competitive advantage, the portfolio underwriter finds like-minded individuals in the value chain.

The level of sophistication will increase in line with the degree of atomization of the risk data. The more it can be broken down, the more opportunities for differentiation will present themselves. A topical example is how we as an industry support climate change transition. The ability to identify the various risk features as they pertain to transition risk enables underwriters to make more nuanced decisions, as well as assessing the impact of each decision on the wider portfolio. This also helps them demonstrate how their aggregate underwriting decisions are delivering their organization’s environment, social and governance (ESG) goals.

Fine-tuning the data machine

Insurers have often found it challenging to create a single data repository that brings together the various sources of internal data; Historical focus was mostly on quotes, policies and claims. These data are now routinely being supplemented with other sources – such as operational data and routing identifiers, as well as client or market data.

However, for the most data-savvy, the data universe is being expanded further with application programming interfaces (APIs), which provide access to multiple software and data sets. This allows organizations to access the huge amounts of additional data available, such as flood areas and other weather data, distance from emergency services and crime statistics. These all provide additional insights.

Although the range of data sources is important, there are other dimensions to consider. One is the length of an insurer’s data record, which can help provide robust and relevant historical perspectives. For example, examining World Trade Center data from 2001 and subsequent years could help an insurer understand the possible market reaction following a similarly large economic event. Likewise, harnessing data from previous natural catastrophes allows an insurer to see which classes were affected by certain types of events and the extent to which these classes were affected.

As found in our benchmarking research, organizations that achieved high levels of granularity in their portfolios went on to become outperformers. In fact, every single organization that was top quartile in terms of performance was also top quartile in terms of the granularity of data. This shows that if an insurer is able to get an appropriate grip on the granularity of their data assets, this facilitates outperformance in other areas.

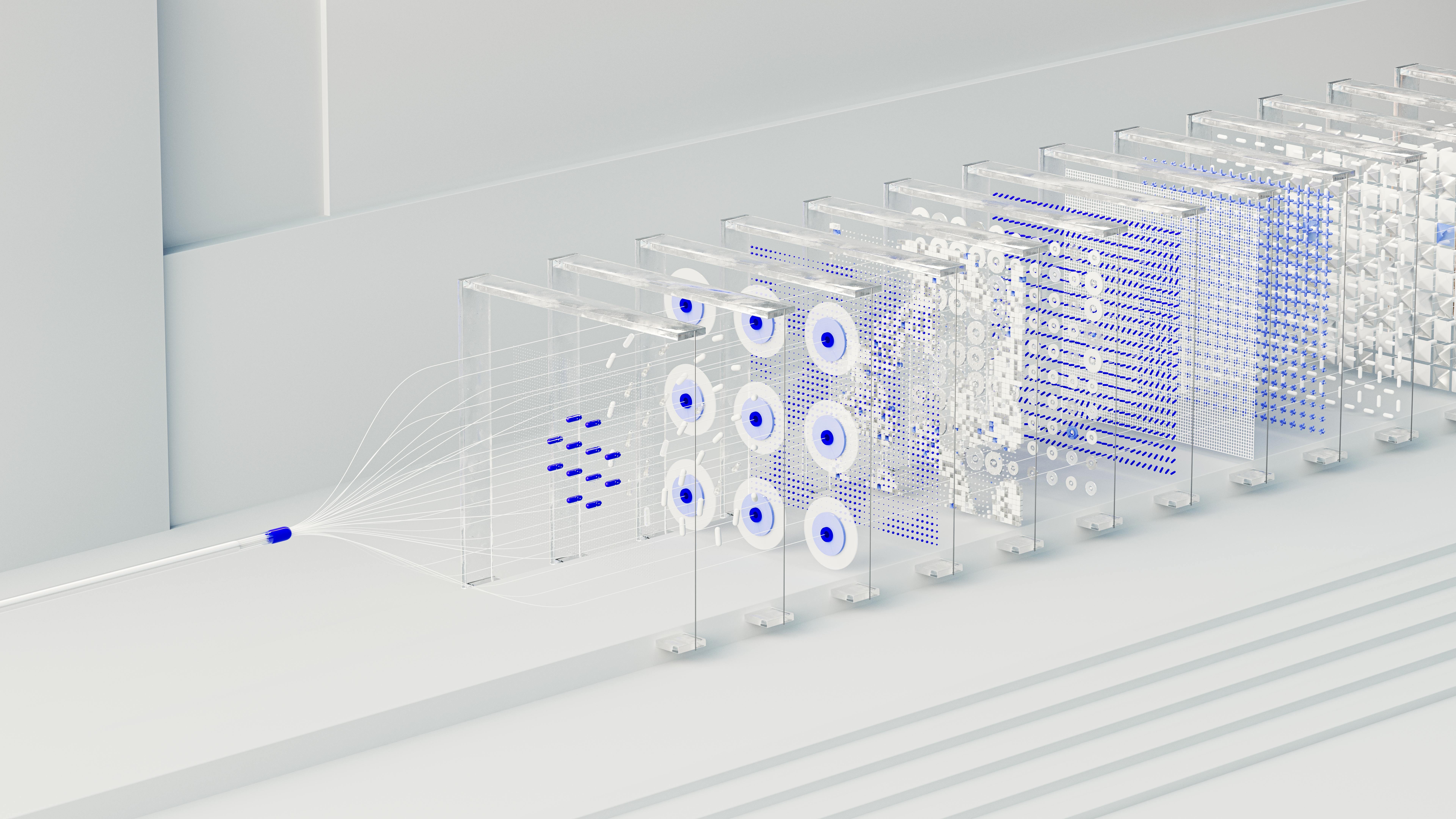

In the future, we expect the quality of calculation and decision engines to become an important factor in performance. In much the same way that the expertise of commercial underwriters is recognized today as a market differentiator, we suggest the way underwriters interact with the calculation or decision engine will set organizations apart in years to come.

A granular data asset inside the calculation engine allows the insurer to extrapolate performance at contract level, as well as run simulations at contract level to determine the optimum composition of the portfolio. The decision engine itself will also be important: Outperformance will depend on the combination of data assets with an appropriate algorithm and the skill with which this is deployed by underwriters in their trading models.

See also: Underwriting in the Digital Age

Delivering these portfolio insights to the “point of writing” sets each underwriting decision in context and determines whether it is accretive to the portfolio strategy.

The contract-level granularity can also be used to optimize capital, looking for correlations and diversification between contracts in a fast, easy way. This extends current approaches to capital optimization to add to the performance advantage on capital returns. Although common in classes that are currently considered data rich, extending the data asset makes these approaches applicable more broadly.

In this environment, an effective underwriter blends the ability to be data-driven, entrepreneurial and technology-proficient with the ability to build relationships and construct deals to drive profit.

Bridging the gap: underwriting and claims

There is a lot to think about when contemplating a data approach for active portfolio management. It is useful to understand how far you can analyze your exposures – to what level of detail – and assess the loss history for similar exposure types across the portfolio.

According to our benchmarking research, the ability of an organization to understand how its portfolios can be segmented (into geography, line of business, new business/renewal, etc.) was very different between the top and bottom performance quartiles in the study. This suggests that the successful atomization of the risk data can enhance performance across a wide range of portfolio management capabilities.

The extent to which exposure and claims data are still not effectively combined and matched is surprising. It is striking how often, for example, cause codes are not collected to a sufficiently granular or consistent level to gain the most benefit from this asset.

Closing the feedback loop between underwriting and claims, and how the claims team works with reserving and underwriting to help set the underwriting strategy, is another area of performance advantage. Often, these processes work well at a superficially high level, but a persistent feature of the emerging performer category is how often these communities were not aligned below that very high level.

Outperformers in our benchmarking survey further refined their portfolio optimization by rigorously including reserving data and pricing data, as well as quote data, to improve risk selection. By making available a credible, single data source for all functional areas to interrogate and use, outperformers can create a coherent portfolio management process, while maintaining a level of granularity appropriate for all users. This results in better decisions.

An organization must also be agile and versatile enough to adapt to the changing rating environment as the relative market importance of certain rating factors shifts. This agility could lead to an insurer being able to capitalize on their more advanced understanding of the market, either to carve out new opportunities by recognizing the changing needs of their insureds or to price the business more accurately to the underlying risk.

Reports of the underwriter’s demise greatly exaggerated

We see underwriters remaining core, but their role developing to take account of a wider set of inputs. Central to that role will be more high-quality data points to inform the underwriting decision-making process and the need to continue to interpret, challenge and, critically, integrate them. The best underwriters are able to anticipate to changes in the market and by good data models will help to detect change quickly and calibrate a response.

Collection of high-quality data throughout the organization enables the underwriting decision to be traced through its stages, particularly the stages at which prices/terms are adjusted. This clear focus will either improve competitiveness or present opportunities for learning.

See also: Pressure to Innovate Shifts Priorities

The good news is that the collection of data, and their subsequent analysis, are becoming easier, quicker and available at lower cost. In turn, the asset and analyses become ever more valuable as our understanding of the data-rich world, and the tools available to infiltrate and unlock it, becomes more advanced.

An enlightened view on data will be the bedrock for insurers in achieving actionable and active portfolio management. The wave of innovation we are experiencing in the London Market reinforces our conviction that portfolio management will not just be used for its own sake, but also as a gateway capability as businesses evolve to an increasingly digital operating level. Given that good data granularity is already associated with outperformance, we expect the importance of data-driven actionable portfolio management to continue to grow.