How to Measure Data Breach Costs?

A dispute between the Ponemon Institute and Verizon over how to estimate the value of a data breach may complicate the calculations.

A dispute between the Ponemon Institute and Verizon over how to estimate the value of a data breach may complicate the calculations.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Byron Acohido is a business journalist who has been writing about cybersecurity and privacy since 2004, and currently blogs at LastWatchdog.com.

Human capital is our biggest asset, and programs for leadership development can help employers attract and keep great people.

The Shift from HR to Talent Management

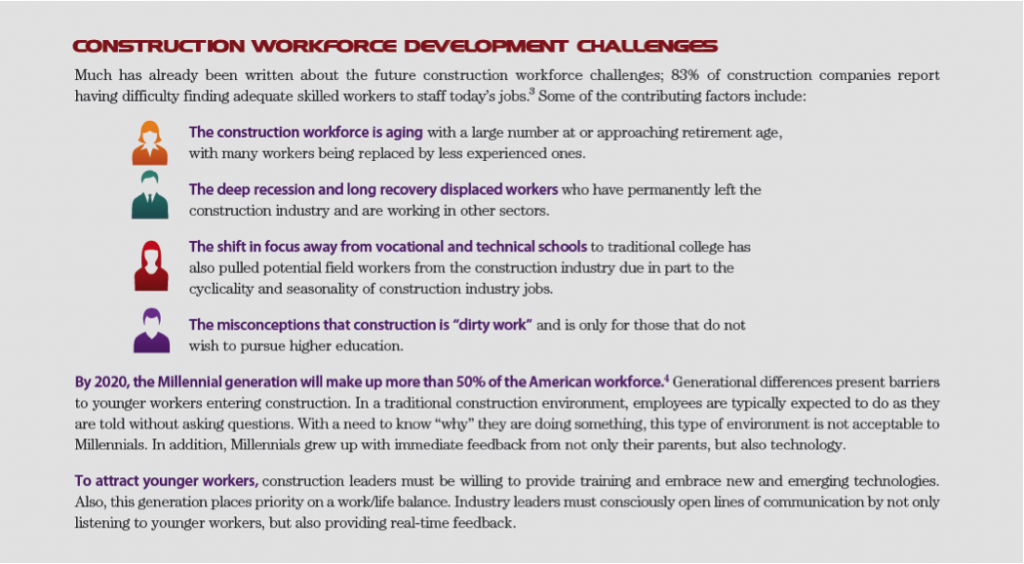

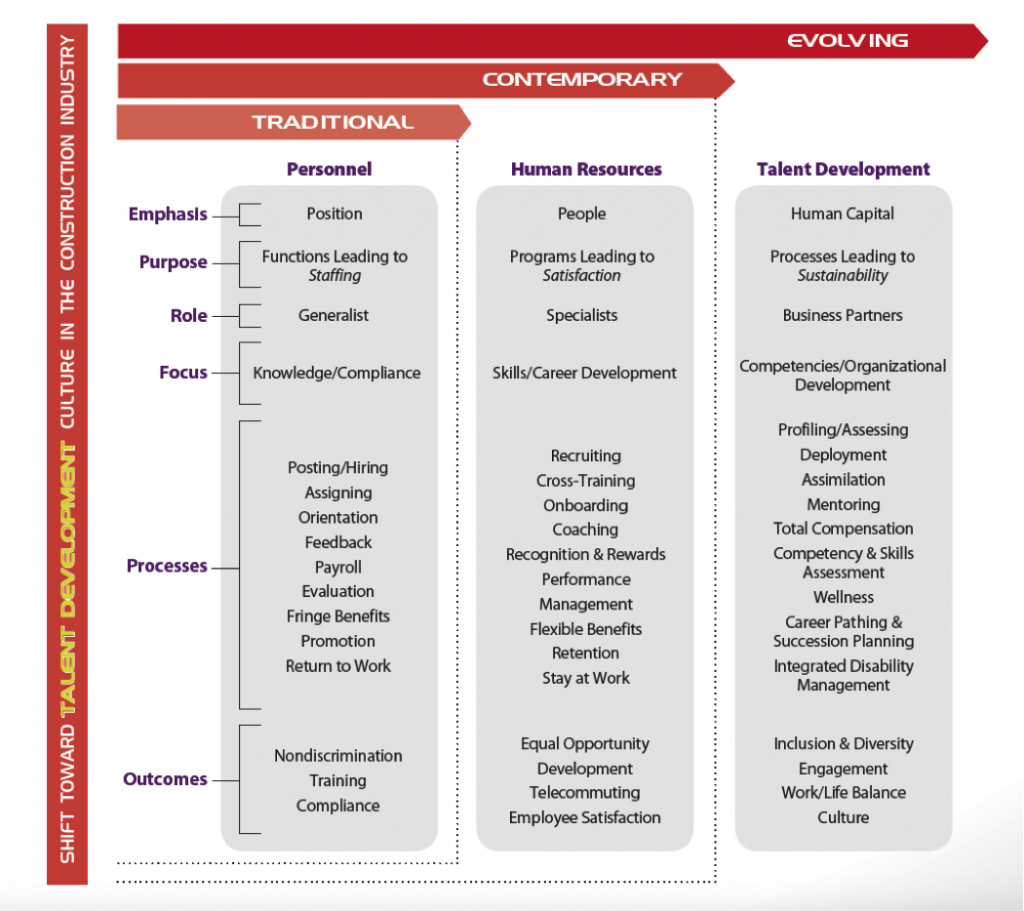

Two talent pipeline concerns are prevalent in the industry: the looming mass exodus of Baby Boomers from the construction workforce, and concerns about how to engage Millennials long enough to develop their skills and prepare them for future leadership roles.

The Shift from HR to Talent Management

Two talent pipeline concerns are prevalent in the industry: the looming mass exodus of Baby Boomers from the construction workforce, and concerns about how to engage Millennials long enough to develop their skills and prepare them for future leadership roles.

Today, senior business leaders are looking to the HR function to provide innovative solutions to attract, retain and grow their talent. The evolution of HR to a talent management model focuses on processes leading to organizational development. As a result, the modern HR department is responsible for seven fundamental functions:

1) Compliance – Ensure regulatory and legal compliance

2) Recruitment – Find a work force

3) Employee Relations – Manage a work force

4) Retention – Maintain a work force

5) Engagement – Build an engaged work force

6) Talent Development – Create a high-performing work force

7) Strategic Leadership – Plan for a future work force

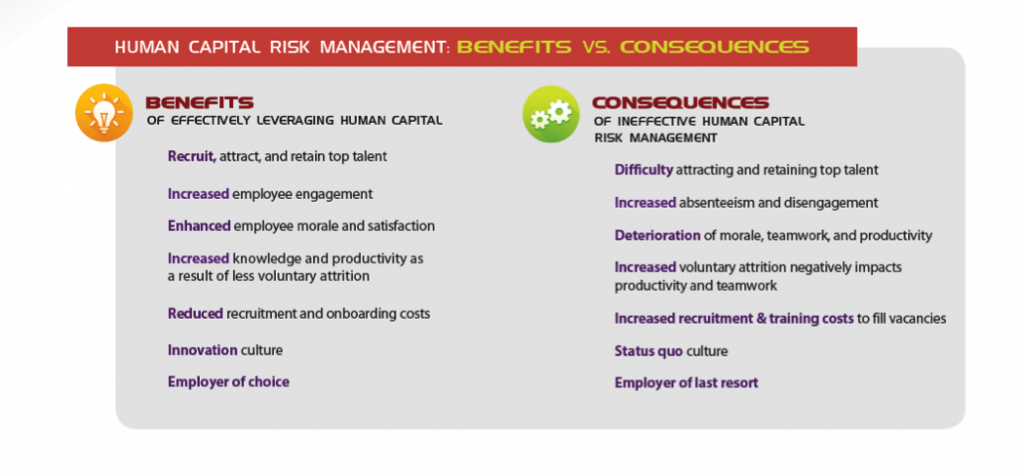

Investing in human capital makes good business sense, especially considering the costs to recruit, onboard and train a new employee. Not only is employment advertising and recruiting costly, but there are also other adverse impacts to the business. Work previously being done by the exiting employee still needs to be completed, so it falls to teammates and the supervisor.

A new employee typically does not reach full productivity until at least four to six months into her new role. In total, the lost productivity costs to turn over one employee is at least six months.

The Link Between Employee Engagement & Business Performance

Engaged employees want both themselves and the company to succeed. However, companies often only focus on employee satisfaction, which can lead to complacency and a sense of entitlement. Employee engagement is frequently defined as the discretionary effort put forth by employees – going above and beyond to make a difference in their work. Discretionary effort is the extra effort employees want to give because of the emotional commitment they have to their organization.

Unlocking employee potential to drive high performance results in business success. However, according to research by the Employee Engagement Group, 70% of all employees from all industries are disengaged. Employees with lower engagement are four times more likely to leave their jobs than highly engaged employees. And disengaged managers are three times more likely to have disengaged employees.

Research shows employees become more engaged when business leaders are trusted, care about their employees and demonstrate competence. By working to engage their employees, contractors can improve their productivity, innovation and customer service. They can reduce incident rates and decrease voluntary attrition.

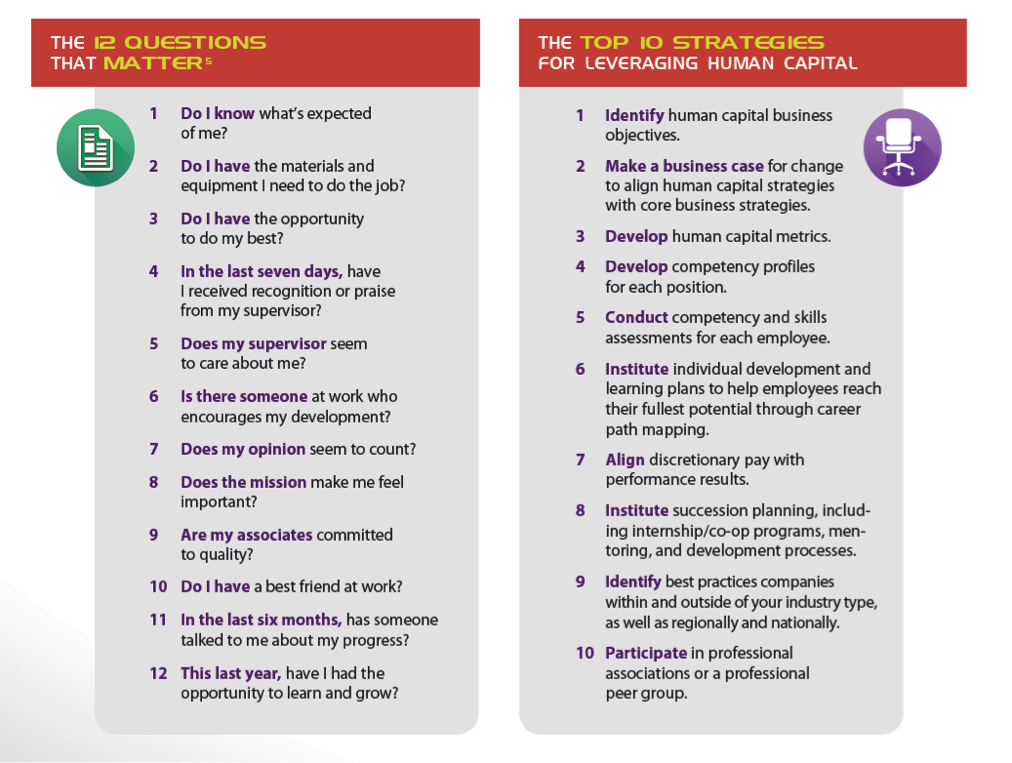

One of the earliest links between employee satisfaction and business performance appeared in First, Break All the Rules: What the World’s Greatest Managers Do Differently, which includes a cross-industry study that demonstrated a clear link among four business performance outcomes: productivity, profitability, employee retention and customer satisfaction.

Today, senior business leaders are looking to the HR function to provide innovative solutions to attract, retain and grow their talent. The evolution of HR to a talent management model focuses on processes leading to organizational development. As a result, the modern HR department is responsible for seven fundamental functions:

1) Compliance – Ensure regulatory and legal compliance

2) Recruitment – Find a work force

3) Employee Relations – Manage a work force

4) Retention – Maintain a work force

5) Engagement – Build an engaged work force

6) Talent Development – Create a high-performing work force

7) Strategic Leadership – Plan for a future work force

Investing in human capital makes good business sense, especially considering the costs to recruit, onboard and train a new employee. Not only is employment advertising and recruiting costly, but there are also other adverse impacts to the business. Work previously being done by the exiting employee still needs to be completed, so it falls to teammates and the supervisor.

A new employee typically does not reach full productivity until at least four to six months into her new role. In total, the lost productivity costs to turn over one employee is at least six months.

The Link Between Employee Engagement & Business Performance

Engaged employees want both themselves and the company to succeed. However, companies often only focus on employee satisfaction, which can lead to complacency and a sense of entitlement. Employee engagement is frequently defined as the discretionary effort put forth by employees – going above and beyond to make a difference in their work. Discretionary effort is the extra effort employees want to give because of the emotional commitment they have to their organization.

Unlocking employee potential to drive high performance results in business success. However, according to research by the Employee Engagement Group, 70% of all employees from all industries are disengaged. Employees with lower engagement are four times more likely to leave their jobs than highly engaged employees. And disengaged managers are three times more likely to have disengaged employees.

Research shows employees become more engaged when business leaders are trusted, care about their employees and demonstrate competence. By working to engage their employees, contractors can improve their productivity, innovation and customer service. They can reduce incident rates and decrease voluntary attrition.

One of the earliest links between employee satisfaction and business performance appeared in First, Break All the Rules: What the World’s Greatest Managers Do Differently, which includes a cross-industry study that demonstrated a clear link among four business performance outcomes: productivity, profitability, employee retention and customer satisfaction.

The organizations that ranked in the top quartile of that exercise reported these performance outcomes associated with increased employee engagement:

The organizations that ranked in the top quartile of that exercise reported these performance outcomes associated with increased employee engagement:

CFMs who think strategically recognize that employee, supervisory and leadership development programs, processes and practices can provide a competitive advantage. Investments in human capital yield tangible and intangible gains that improve productivity, quality, risk, safety and financial performance. This should neither be unexpected nor surprising: after all, people are our greatest asset.

This article was co-written by Tana Blair and Tammy Vibbert. Tana Blair is responsible for organizational and leadership development at Lakeside Industries in Issaquah, WA. S he can be reached attana.blair@lakesideindustries.com. Tammy Vibbert is the director of human resources at Lakeside Industries in Issaquah, WA. She can be reached at tammy.vibbert@lakesideindustries.com.

Copyright © 2015 by the Construction Financial Management Association. All rights reserved. This article first appeared in CFMA Building Profits. Reprinted with permission.

CFMs who think strategically recognize that employee, supervisory and leadership development programs, processes and practices can provide a competitive advantage. Investments in human capital yield tangible and intangible gains that improve productivity, quality, risk, safety and financial performance. This should neither be unexpected nor surprising: after all, people are our greatest asset.

This article was co-written by Tana Blair and Tammy Vibbert. Tana Blair is responsible for organizational and leadership development at Lakeside Industries in Issaquah, WA. S he can be reached attana.blair@lakesideindustries.com. Tammy Vibbert is the director of human resources at Lakeside Industries in Issaquah, WA. She can be reached at tammy.vibbert@lakesideindustries.com.

Copyright © 2015 by the Construction Financial Management Association. All rights reserved. This article first appeared in CFMA Building Profits. Reprinted with permission.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Cal Beyer is the vice president of Workforce Risk and Worker Wellbeing. He has over 30 years of safety, insurance and risk management experience, including 24 of those years serving the construction industry in various capacities.

More people are professing loneliness in their lives, and more evidence is piling up that loneliness, like dissatisfaction in life, is a killer.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Tom Emerick is president of Emerick Consulting and cofounder of EdisonHealth and Thera Advisors. Emerick’s years with Wal-Mart Stores, Burger King, British Petroleum and American Fidelity Assurance have provided him with an excellent blend of experience and contacts.

Bonds, long a safe haven for investors, will not be for long, as interest rates rise and government issuers and mega-buyers face new risks.

You may be old enough to remember that bond investments suffered meaningfully in the late 1970s as inflationary pressures rose unabated. We are not expecting a replay of that environment, but the potential for rising inflationary expectations in a generational low-interest-rate environment is not a positive for what many consider “safe” bond investments. Quite the opposite.

As we have discussed previously, total debt outstanding globally has grown very meaningfully since 2009. In this cycle, it is the governments that have been the credit expansion provocateurs via the issuance of bonds. In the U.S. alone, government debt has more than doubled from $8 trillion to more than $18.5 trillion since 2009. We have seen like circumstances in Japan, China and part of Europe. Globally, government debt has grown close to $40 trillion since 2009. It is investors and in part central banks that have purchased these bonds. What has allowed this to occur without consequence so far has been the fact that central banks have held interest rates at artificially low levels.

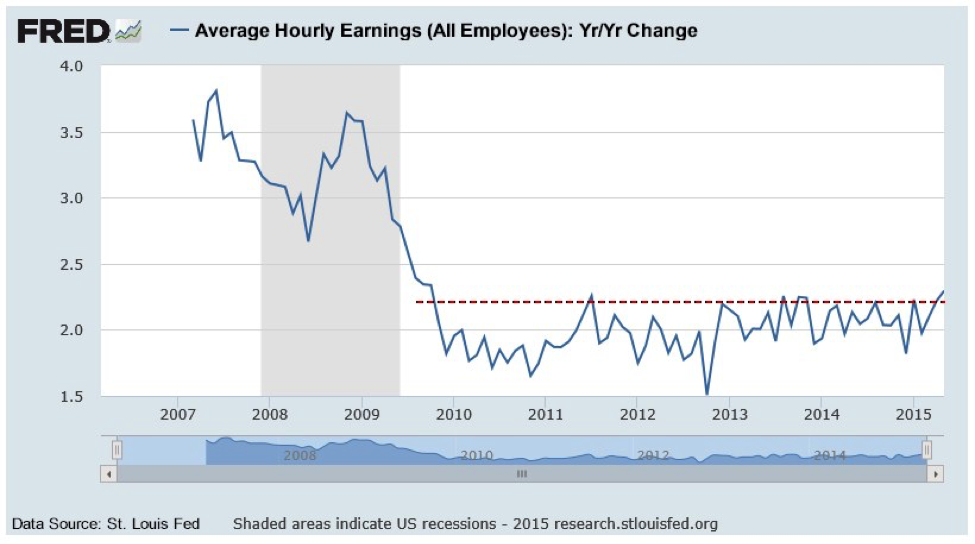

Although debt levels have surged, interest cost in 2014 was not much higher than we saw in 2007, 2008 and 2011. Of course, this was accomplished by the U.S. Fed dropping interest rates to zero. The U.S. has been able to issue one-year Treasury bonds at a cost of 0.1% for a number of years. 0% interest rates in many global markets have allowed governments to borrow more both to pay off old loans and finance continued expanding deficits. In late 2007, the yield on 10-year U.S. Treasuries was 4-5%. In mid-2012, it briefly dropped below 1.5%.

So here is the issue to be faced in the U.S., and we can assure you that conceptually identical circumstances exist in Japan, China and Europe. At the moment, the total cost of U.S. Government debt outstanding is approximately 2.2%. This number comes directly from the U.S. Treasury website and is documented monthly. At that level of debt cost, the U.S. paid approximately $500 billion in interest last year. In a rising-interest-rate environment, this number goes up. At just 4%, our interest costs alone would approach $1 trillion -- at 6%, probably $1.4 trillion in interest-only costs. It’s no wonder the Fed has been so reluctant to raise rates. Conceptually, as interest rates move higher, government balance sheets globally will deteriorate in quality (higher interest costs). Bond investors need to be fully aware of and monitoring this set of circumstances. Remember, we have not even discussed the enormity of off-balance-sheet government liabilities/commitments such as Social Security costs and exponential Medicare funding to come. Again, governments globally face very similar debt and social cost spirals. The “quality” of their balance sheets will be tested somewhere ahead.

Our final issue of current consideration for bond investors is one of global investment concentration risk. Just what has happened to all of the debt issued by governments and corporations (using the proceeds to repurchase stock) in the current cycle? It has ended up in bond investment pools. It has been purchased by investment funds, pension funds, the retail public, etc. Don Coxe of Coxe Advisors (long-tenured on Wall Street and an analyst we respect) recently reported that 70% of total bonds outstanding on planet Earth are held by 20 investment companies. Think the very large bond houses like PIMCO, Blackrock, etc. These pools are incredibly large in terms of dollar magnitude. You can see the punchline coming, can’t you?

If these large pools ever needed to (or were instructed to by their investors) sell to preserve capital, sell to whom becomes the question? These are behemoth holders that need a behemoth buyer. And as is typical of human behavior, it’s a very high probability a number of these funds would be looking to sell or lighten up at exactly the same time. Wall Street runs in herds. The massive concentration risk in global bond holdings is a key watch point for bond investors that we believe is underappreciated.

Is the world coming to an end for bond investors? Not at all. What is most important is to understand that, in the current market cycle, bonds are not the safe haven investments they have traditionally been in cycles of the last three-plus decades. Quite the opposite. Investment risk in current bond investments is real and must be managed. Most investors in today’s market have no experience in managing through a bond bear market. That will change before the current cycle has ended. As always, having a plan of action for anticipated market outcomes (whether they ever materialize) is the key to overall investment risk management.

You may be old enough to remember that bond investments suffered meaningfully in the late 1970s as inflationary pressures rose unabated. We are not expecting a replay of that environment, but the potential for rising inflationary expectations in a generational low-interest-rate environment is not a positive for what many consider “safe” bond investments. Quite the opposite.

As we have discussed previously, total debt outstanding globally has grown very meaningfully since 2009. In this cycle, it is the governments that have been the credit expansion provocateurs via the issuance of bonds. In the U.S. alone, government debt has more than doubled from $8 trillion to more than $18.5 trillion since 2009. We have seen like circumstances in Japan, China and part of Europe. Globally, government debt has grown close to $40 trillion since 2009. It is investors and in part central banks that have purchased these bonds. What has allowed this to occur without consequence so far has been the fact that central banks have held interest rates at artificially low levels.

Although debt levels have surged, interest cost in 2014 was not much higher than we saw in 2007, 2008 and 2011. Of course, this was accomplished by the U.S. Fed dropping interest rates to zero. The U.S. has been able to issue one-year Treasury bonds at a cost of 0.1% for a number of years. 0% interest rates in many global markets have allowed governments to borrow more both to pay off old loans and finance continued expanding deficits. In late 2007, the yield on 10-year U.S. Treasuries was 4-5%. In mid-2012, it briefly dropped below 1.5%.

So here is the issue to be faced in the U.S., and we can assure you that conceptually identical circumstances exist in Japan, China and Europe. At the moment, the total cost of U.S. Government debt outstanding is approximately 2.2%. This number comes directly from the U.S. Treasury website and is documented monthly. At that level of debt cost, the U.S. paid approximately $500 billion in interest last year. In a rising-interest-rate environment, this number goes up. At just 4%, our interest costs alone would approach $1 trillion -- at 6%, probably $1.4 trillion in interest-only costs. It’s no wonder the Fed has been so reluctant to raise rates. Conceptually, as interest rates move higher, government balance sheets globally will deteriorate in quality (higher interest costs). Bond investors need to be fully aware of and monitoring this set of circumstances. Remember, we have not even discussed the enormity of off-balance-sheet government liabilities/commitments such as Social Security costs and exponential Medicare funding to come. Again, governments globally face very similar debt and social cost spirals. The “quality” of their balance sheets will be tested somewhere ahead.

Our final issue of current consideration for bond investors is one of global investment concentration risk. Just what has happened to all of the debt issued by governments and corporations (using the proceeds to repurchase stock) in the current cycle? It has ended up in bond investment pools. It has been purchased by investment funds, pension funds, the retail public, etc. Don Coxe of Coxe Advisors (long-tenured on Wall Street and an analyst we respect) recently reported that 70% of total bonds outstanding on planet Earth are held by 20 investment companies. Think the very large bond houses like PIMCO, Blackrock, etc. These pools are incredibly large in terms of dollar magnitude. You can see the punchline coming, can’t you?

If these large pools ever needed to (or were instructed to by their investors) sell to preserve capital, sell to whom becomes the question? These are behemoth holders that need a behemoth buyer. And as is typical of human behavior, it’s a very high probability a number of these funds would be looking to sell or lighten up at exactly the same time. Wall Street runs in herds. The massive concentration risk in global bond holdings is a key watch point for bond investors that we believe is underappreciated.

Is the world coming to an end for bond investors? Not at all. What is most important is to understand that, in the current market cycle, bonds are not the safe haven investments they have traditionally been in cycles of the last three-plus decades. Quite the opposite. Investment risk in current bond investments is real and must be managed. Most investors in today’s market have no experience in managing through a bond bear market. That will change before the current cycle has ended. As always, having a plan of action for anticipated market outcomes (whether they ever materialize) is the key to overall investment risk management.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Brian Pretti is a partner and chief investment officer at Capital Planning Advisors. He has been an investment management professional for more than three decades. He served as senior vice president and chief investment officer for Mechanics Bank Wealth Management, where he was instrumental in growing assets under management from $150 million to more than $1.4 billion.

A change to federal health rules on "embedded MOOP" threatens to cost employers, and employees, hundreds of millions of dollars.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Leah Binder is president and CEO of <a href="http://www.leapfroggroup.org">the Leapfrog Group</a> (Leapfrog), a national organization based in Washington, DC, representing employer purchasers of healthcare. Under her leadership, Leapfrog launched the <a href="http://www.hospitalsafetyscore.org/">Hospital Safety Score</a>, which assigns letter grades assessing the safety of general hospitals across the country.

Criticisms of ERM can be justified when a program is executed poorly, but issues are often taken out of the proper context.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Donna Galer is a consultant, author and lecturer.

She has written three books on ERM: Enterprise Risk Management – Straight To The Point, Enterprise Risk Management – Straight To The Value and Enterprise Risk Management – Straight Talk For Nonprofits, with co-author Al Decker. She is an active contributor to the Insurance Thought Leadership website and other industry publications. In addition, she has given presentations at RIMS, CPCU, PCI (now APCIA) and university events.

Currently, she is an independent consultant on ERM, ESG and strategic planning. She was recently a senior adviser at Hanover Stone Solutions. She served as the chairwoman of the Spencer Educational Foundation from 2006-2010. From 1989 to 2006, she was with Zurich Insurance Group, where she held many positions both in the U.S. and in Switzerland, including: EVP corporate development, global head of investor relations, EVP compliance and governance and regional manager for North America. Her last position at Zurich was executive vice president and chief administrative officer for Zurich’s world-wide general insurance business ($36 Billion GWP), with responsibility for strategic planning and other areas. She began her insurance career at Crum & Forster Insurance.

She has served on numerous industry and academic boards. Among these are: NC State’s Poole School of Business’ Enterprise Risk Management’s Advisory Board, Illinois State University’s Katie School of Insurance, Spencer Educational Foundation. She won “The Editor’s Choice Award” from the Society of Financial Examiners in 2017 for her co-written articles on KRIs/KPIs and related subjects. She was named among the “Top 100 Insurance Women” by Business Insurance in 2000.

Domiciles have no responsibility to consider federal tax issues when licensing captives -- but they should do so anyway.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

A product recall can devastate a company's reputation and cut market share -- even if it is handled perfectly and the brand is a great one.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Should it really be possible to spend minutes on a continuing education course and get hours of credit? One that's open book? On ethics?

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Some myths are based on misunderstanding -- some on misinformation spread by those with a vested interest in preserving a flawed system.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Bill Minick is the president of PartnerSource, a consulting firm that has helped deliver better benefits and improved outcomes for tens of thousands of injured workers and billions of dollars in economic development through "options" to workers' compensation over the past 20 years.