Over the past 20 years, many large, sleepy industries have heard a familiar story line – “you will be disrupted due to emerging technology.” Some of those who embraced technology change found massive opportunities to improve product offerings, drive higher margins and streamline customer experiences. Others, resistant to technology or unable to move fast enough, found themselves quickly replaced by upstarts.

Looking retrospectively, I would argue that no industry to date is more on the cusp of opportunity/exposed to disruption than the property and casualty (P&C) insurance sector is today. And, of the many technology breakthroughs (insurtech) available to the P&C insurance sector as a whole, ranging from internet-based distribution to “bots” for automated customer claims, the one technology that is both the most opportunistic and the most misunderstood, is the Internet of Things (IoT) for smart home and its potential to change homeowners insurance.

Put simply, IoT reflects a growing technology movement to connect any device with electronics to the internet. By doing so, these devices and their data can be interacted with anywhere in the world. On a fundamental level, this connectivity allows for remote control of devices, changing how consumers interact with their homes. But on a deeper level this technological evolution allows for new third-party services, especially as diagnostics and performance data is made available by manufacturers.

Many sectors will benefit from this technological evolution, and as more and more home systems and appliances (everything from dishwashers and refrigerators to heating/cooling and hot water tanks) are connected the homeowners insurance space has an incredible amount to gain.

Most consumers today have a limited understanding of the smart home road map, and many assume it starts and stops with home automation – examples such as controlling your thermostat from your phone and your lights automatically turning on when you pull the car into the driveway. However, as I have discussed in an earlier online post, even the near future of smart home is much, much more.

Soon, the smart home will optimize its performance around your behaviors and recognize when something is not right, notifying the appropriate people of problems and steps for remediation. As futuristic as this sounds, you have grown to expect your car dashboard to notify you of an engine problem or your computer to let you know of a hard drive issue – these indications are nothing short of what we expect from products today and are similarly available for your home. Likewise, home systems and appliance can be carefully watched by computers capable of instantaneously notifying you, or third parties, of problems.

As huge benefactors of this technology, P&C insurers should be rushing to enable their customers to embrace this technology.

See also: How Smart Is a ‘Smart’ Home, Really?

Here, at a high level, are some of the ways how home insurers stand to benefit:

1) Early Peril Detection Leading to Loss Avoidance – already in-market today. There are many IoT devices capable of sensing almost all of the perils associated with homeowner loss: theft, water, fire, even destructive weather such as hail and wind. Of course, minimizing loss from these perils requires action, but earlier detection helps homeowners, emergency responders and authorized third parties to respond faster, minimizing loss.

How many times have we heard stories about coming home from a vacation finding a pipe had burst and mold had grown for days, or a fire burned for hours in an unoccupied home? With devices such as remote locks and wireless cameras, you could even imagine a world where a service technician, like a plumber, could enter your home in an emergency even in your absence and could be monitored while there.

Beyond notification, devices themselves will also soon have the intelligence to respond. For instance, a hot water tank on the verge of rupture will not only notify the owner, it will also shut off its water intake and self-drain.

2) Claims Processing – Precision data from sensors is at the core of IoT innovation. In the home, practically all sensors have continual readings and time/date stamp on data points such as temperature, moisture and motion. As events happen, data is essentially cataloged for consumption by other systems like claims.

Automatically validating or measuring loss is not an unreasonable expectation of this sensor data soon. And first notice of loss (FNOL) moves from the responsibility of the claimant to an automated and sophisticated process where all parties are better-served and better-protected.

3) Fraud Analysis – The very data used to facilitate FNOL will also serve as a record-of-truth in claims analysis. Machine learning algorithms will take data from these sensors and dramatically improve models for predictive loss behavior. Just as consumers have credit scores or driving records, it’s not hard to see how home data will one day drive home safety assessments.

Probability of loss based on behavior will be a front-line indicator for likelihood of fraud. Additionally, data from these sensors, uploaded to the cloud typically at the time of capture, serves as the immutable record of truth when loss occurs. Be it rapid increases in temperature or moisture detection, re-creating how, when and where a loss started and ended will no longer be an exercise in on-site investigation but rather a review of clear data points, making the process easier and more honest for everyone.

I am willing to bet that the mere knowledge that sensor data is collected will, in itself, begin to reduce fraudulent claims.

Of course, with all of this progress, there are considerations. We are on the frontier of a new world, and, as with many technology advancements, the true impact is hard for many to truly understand.

The industry needs to think through key implications:

Consumer Privacy – As with all things in life, there needs to be balance between what you give up and what you get. Privacy is a hot topic right now, but the reality is that every day people choose to opt into programs that consume their data in return for benefit.

Transparency and parity are critical in these transactions – e.g., “You are letting us know about something about you in return for something else of this value.” When clearly presented with the facts, many consumers will willingly give data to get better service and lower premiums.

Security – Of course there are security risks with devices being connected to the internet, and they need to be thoroughly protected. But the risks are manageable. Professional organizations focused on IoT have massive security staffs trained to ensure that consumers and business using IoT data are protected.

Data Consumption – Even in an industry as sophisticated at using data as the P&C space is, the capabilities to ingest this level of data likely do not yet exist. Organizations will need to assess which data is the low-hanging fruit for their businesses and build from there. Ultimately, new partnerships in technology need to be built to ensure that data is provided in a manageable form to the insurer.

See also: What Smart Speakers Mean for Insurtech

For those of you feeling these concepts are futuristic – they are not. From a technology perspective, everything discussed is in-market today -- at least in its early form. And while arguably many IoT products have a ways to go before being truly ready for important insurance applications, the process is aligned with a fairly typical technology life cycle.

Partnering in these early stages will help IoT vendors and insurers ensure that product features will satisfy the requirements of the larger market need. In other words, when the insurance market gets its arms around true requirements for IoT and helps create the business case for mass consumer adoption – the Internet of Things market will respond, and quickly.

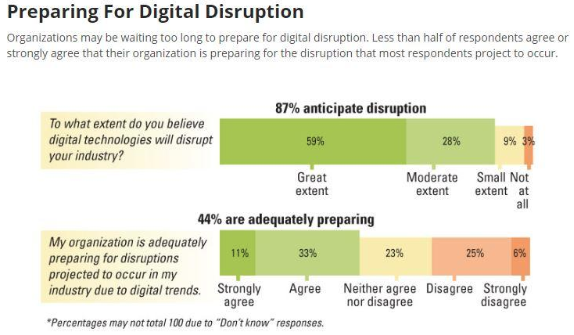

Source: MIT Sloan Management Review[/caption]

So, why isn’t adoption of digital technologies a priority for more small- to medium-sized insurers? While many see the opportunities presented by digital technologies, perhaps they don’t believe the likelihood is high that digital will actually disrupt their own organization. But the authors of the research note that, if digital technologies represent an opportunity for your organization, they also represent a threat for your competitors — and vice versa.

I get it, change is hard… but, the argument, “we are not in a financial position to prioritize” is irrelevant to the discussion of digital technology investments. Competitors aren’t waiting for your company to be in a better “financial position” before they act. Moreover, because at some point in the coming years insurers will need to replace their growing faction of retirement-age employees with a younger, more tech-savvy labor force. And in a war for the best talent, the A and B players have absolutely no desire to work on outdated systems. So, what does that mean for the future of your company?

See also: Digital Insurance, Anyone?

Just remember, technology is an accelerator for your company and your staff. In other words, the more digital technologies that are put into play, the greater and faster the return. Those insurers that ignore its call will fall further and further behind until they reach the tipping point and slowly fade away. Remember what happened to Blockbuster Video when it failed to adapt in a time of digital change. Don’t be a Blockbuster in a Netflix world.

Source: MIT Sloan Management Review[/caption]

So, why isn’t adoption of digital technologies a priority for more small- to medium-sized insurers? While many see the opportunities presented by digital technologies, perhaps they don’t believe the likelihood is high that digital will actually disrupt their own organization. But the authors of the research note that, if digital technologies represent an opportunity for your organization, they also represent a threat for your competitors — and vice versa.

I get it, change is hard… but, the argument, “we are not in a financial position to prioritize” is irrelevant to the discussion of digital technology investments. Competitors aren’t waiting for your company to be in a better “financial position” before they act. Moreover, because at some point in the coming years insurers will need to replace their growing faction of retirement-age employees with a younger, more tech-savvy labor force. And in a war for the best talent, the A and B players have absolutely no desire to work on outdated systems. So, what does that mean for the future of your company?

See also: Digital Insurance, Anyone?

Just remember, technology is an accelerator for your company and your staff. In other words, the more digital technologies that are put into play, the greater and faster the return. Those insurers that ignore its call will fall further and further behind until they reach the tipping point and slowly fade away. Remember what happened to Blockbuster Video when it failed to adapt in a time of digital change. Don’t be a Blockbuster in a Netflix world.

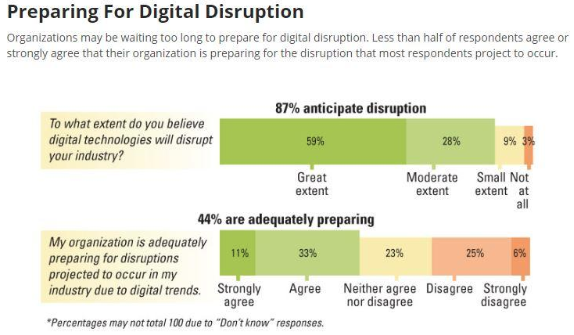

Source: MIT Sloan Management Review[/caption]

So, why isn’t adoption of digital technologies a priority for more small- to medium-sized insurers? While many see the opportunities presented by digital technologies, perhaps they don’t believe the likelihood is high that digital will actually disrupt their own organization. But the authors of the research note that, if digital technologies represent an opportunity for your organization, they also represent a threat for your competitors — and vice versa.

I get it, change is hard… but, the argument, “we are not in a financial position to prioritize” is irrelevant to the discussion of digital technology investments. Competitors aren’t waiting for your company to be in a better “financial position” before they act. Moreover, because at some point in the coming years insurers will need to replace their growing faction of retirement-age employees with a younger, more tech-savvy labor force. And in a war for the best talent, the A and B players have absolutely no desire to work on outdated systems. So, what does that mean for the future of your company?

See also: Digital Insurance, Anyone?

Just remember, technology is an accelerator for your company and your staff. In other words, the more digital technologies that are put into play, the greater and faster the return. Those insurers that ignore its call will fall further and further behind until they reach the tipping point and slowly fade away. Remember what happened to Blockbuster Video when it failed to adapt in a time of digital change. Don’t be a Blockbuster in a Netflix world.

Source: MIT Sloan Management Review[/caption]

So, why isn’t adoption of digital technologies a priority for more small- to medium-sized insurers? While many see the opportunities presented by digital technologies, perhaps they don’t believe the likelihood is high that digital will actually disrupt their own organization. But the authors of the research note that, if digital technologies represent an opportunity for your organization, they also represent a threat for your competitors — and vice versa.

I get it, change is hard… but, the argument, “we are not in a financial position to prioritize” is irrelevant to the discussion of digital technology investments. Competitors aren’t waiting for your company to be in a better “financial position” before they act. Moreover, because at some point in the coming years insurers will need to replace their growing faction of retirement-age employees with a younger, more tech-savvy labor force. And in a war for the best talent, the A and B players have absolutely no desire to work on outdated systems. So, what does that mean for the future of your company?

See also: Digital Insurance, Anyone?

Just remember, technology is an accelerator for your company and your staff. In other words, the more digital technologies that are put into play, the greater and faster the return. Those insurers that ignore its call will fall further and further behind until they reach the tipping point and slowly fade away. Remember what happened to Blockbuster Video when it failed to adapt in a time of digital change. Don’t be a Blockbuster in a Netflix world.