Long-time Costa Rican National Champion

Bernal Gonzalez told a very young me in 1994 that the world’s best chess-playing computer wasn’t quite strong enough to be among the top 100 players in the world.

Technology can advance exponentially, and just three years later world champion Garry Kasparov

was defeated by IBM’s chess playing supercomputer Deep Blue. But chess is a game of logic where all potential moves are sharply defined and a powerful enough computer can simulate many moves ahead.

Things got much more interesting in 2011, when IBM’s Jeopardy-playing computer

Watson defeated Ken Jennings, who held the record of winning 74 Jeopardy matches in a row, and Brad Rutter, who has won the most money on the show. Winning at Jeopardy required Watson to understand clues in

natural spoken language, learn from its own mistakes, buzz in and answer in natural language faster than the best Jeopardy-playing humans. According to IBM, ”more than 100 different techniques are used to analyze natural language, identify sources, find and generate hypotheses, find and score evidence and merge and rank hypotheses.” Now that’s impressive -- and much more worrisome for those employed as knowledge workers.

What do game-playing computers have to do with white collar, knowledge jobs? Well,

Big Blue didn’t spend

$1 billion developing Watson just to win a million bucks playing Jeopardy. It was a proof of concept and a marketing move. A computer that can understand and respond in natural language can be adapted to do things we currently use white collar, educated workers to do, starting with automating call centers and, sooner rather than later, moving on up to more complex, higher-level roles, just like we have seen with automation of blue collar jobs.

In the four years since its Jeopardy success, Watson has continued advancing and is now being used for legal research and to

help hospitals provide better care. And Watson is just getting started. Up until very recently, the cost of using this type of technology was in the millions of dollars, making it unlikely that any but the largest companies could make the business case to replace knowledge jobs with AIs (artificial intelligence). In late 2013, IBM put

Watson “on the cloud,” meaning that you can now rent Watson time without having to buy the very expensive servers.

Watson is cool but requires up-front programming of apps for very specific activities and, while incredibly smart, lacks any sort of

emotional intelligence, making it uncomfortable for regular people to deal with it. In other words, even if you spent the millions of dollars to automate your call center with Watson, it wouldn’t be able to connect with your customer, because it has no sense of emotions. It would be like having

Data answering your phones.

Then came Amelia…

Amelia

Amelia is an AI platform that aims to automate business processes that up until now had required educated human labor. She’s different from Watson in many ways that make her much better-suited to actually replace you at the office. According to IPsoft, Amelia aims at working alongside humans to “shoulder the burden of tedious, often laborious tasks.”

She doesn’t require expensive up-front programming to learn how to do a task and is hosted on the cloud, so there is no need to buy million-dollar servers. To train her, you literally feed her your entire set of employee training manuals, and she reads and digests them in a matter of a few seconds. Literally, just upload the text files, and she can grasp the implications and apply logic to make connections between the concepts. Once she has that, she can start working customer emails and phone calls and even recognize what she doesn’t know and search the Internet and the company’s intranet to find an answer. If she can’t find an answer, then she’ll transfer the customer to a human employee for help. You can even let her listen to any conversations she doesn’t handle herself, and she literally learns how to do the job from the existing staff, like a new employee would, except exponentially faster and with perfect memory. She also is fluent in 20 languages.

Like Watson, Amelia learns from every interaction and builds a mind-map that eventually is able to handle just about anything your staff handled before. Her most significant advantage is that Amelia has an emotional component to go with her super brains. She draws on research in the field of

affective computing, “the study of the interaction between humans and computing systems capable of detecting and responding to the user’s emotional state.” Amelia can read your facial expressions, gestures, speech and even the rhythm of your keystrokes to understand your emotional state, and she can respond accordingly in a way that will make you feel better. Her

EQ is modeled in a three-dimensional space of pleasure, arousal and dominance through a modeling system called PAD. If you’re starting to think this is mind-blowing, you are correct!

The magic is in the context. Instead of deciphering words like insurance jargon when a policyholder calls in to add a vehicle or change an address, IPsoft explains that Amelia will engage with the actual question asked. For example, Amelia would understand the same requests that are phrased different but essentially mean the same thing: “My address changed” and “I need to change my address.” Or, “I want to increase my BI limits” and “I need to increase my bodily injury limits”.

Amelia was unveiled in late 2014, after a secretive 16-year-long development process, and is now being tested in the real world at companies like Shell Oil, Accenture, NNT Group and Baker Hughes on a variety of tasks from overseeing a help desk to advising remote workers in the field.

Chetan Dube, long-time CEO of IPSoft, Amelia’s creator, was

interviewed by Entrepreneur magazine:

“A large part of your brain is shackled by the boredom and drudgery of everyday existence. [...] But imagine if technology could come along and take care of all the mundane chores for you, and allow you to indulge in the forms of creative expression that only the human brain can indulge in. What a beautiful world we would be able to create around us.”

His vision sounds noble, but the reality is that most of the employees whose jobs get automated away by Watson, Amelia and their successors, won’t be able to make the move to higher-level, less mundane and less routine tasks. If you think about it, a big percentage of white collar workers have largely repetitive service type jobs. And even those of us in higher-level roles will eventually get automated out of the system; it’s a matter of time, and less time than you think.

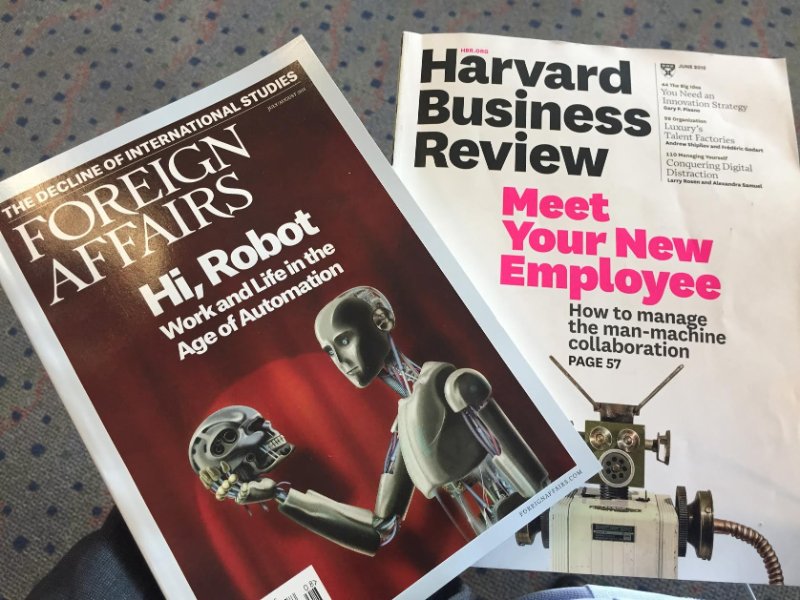

I'm not saying that the technology can or should be stopped; that’s simply not realistic. I am saying that, as a society, there are some important conversations we need to start having about what we want things to look like in 10 to 20 years. If we don’t have those discussions, we are going to end up in a world with very high unemployment, where the very few people who hold large capital and those with the

STEM skills to design and run the AIs will do very well, while the other 80-90% of us could potentially be unemployable. This is truly scary stuff, McKinsey

predicts that by 2025 technology will take over tasks currently performed by hundreds of millions of knowledge workers. This is no longer science fiction.

As humans, our brains evolved to work linearly, and

we have a hard time understanding and predicting change that happens exponentially. For example, merely 30 years ago, it was unimaginable that most people would walk around with a device in their pockets that could perform more sophisticated computing than computers at MIT in the 1950s. The huge improvement in power is a result of exponential growth of the kind explained by Moore’s law, which is the prediction that the number of transistors that fit on a chip will double every two years while the chip's cost stays constant. There is every reason to believe that AI will see similar exponential growth. Just five years ago, the world’s top AI experts at MIT were confident that cars could never drive themselves, and now Google has proven them wrong. Things can advance unimaginably fast when growth becomes exponential.

Some of the most brilliant minds of our times are sounding the alarm bells.

Elon Musk said, “I think we should be very careful about AI. If I had to guess, our biggest existential threat is probably that we are summoning the demon.”

Stephen Hawking warned, “The development of full-artificial intelligence could spell the end of the human race.”