A Crucial Role for Annuity 'Structures'

Given the tax-exempt status and safety of structured annuity settlements, is any post-injury financial plan complete without one?

Given the tax-exempt status and safety of structured annuity settlements, is any post-injury financial plan complete without one?

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Ken Paradis is CEO of Chronovo, a new force in the structured settlement annuity marketplace. Unwavering in his conviction that critical performance metrics, innovative product design, evangelical customers and inspired employees all fuel each other, Paradis has founded and led high-growth, niche-defining companies in the property and casualty industry.

Real-time video is making an impact, but video doesn't have to be live to make claims processing more efficient and satisfying to customers.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Alex Polyakov is CEO of Livegenic, which delivers real-time video solutions to help organizations reduce costs, improve customer satisfaction and mitigate risks. Polyakov has more than 15 years designing enterprise solutions in many industries, including IT, government, insurance, pharmaceuticals and talent management.

Amid all the volatility in prices of financial assets, it's important to understand the long-term trends -- which could favor stocks.

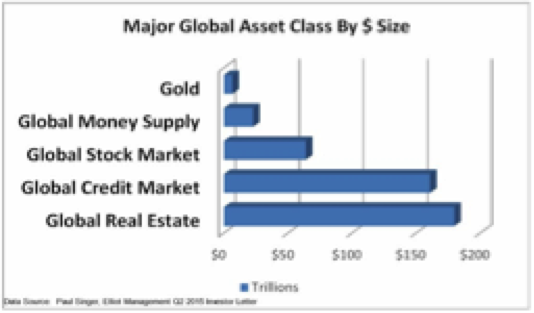

One of the key takeaways from this data is that the global credit/bond market is about 2.5 times as large as the global equity market. We have expressed our longer-term concern over bonds, especially government bonds. After 35 years of a bull market in bonds, will we have another 35 years of such good fortune? Not a chance. With interest rates at generational lows, the 35-year bond bull market isn’t in the final innings; it’s already in extra innings, thanks to the money printing antics of global central banks. So as we think ahead, we need to contemplate a very important question. What happens to this $160 trillion-plus investment in the global bond market when the 35-year bond bull market breathes its last and the downside begins?

One answer is that some of this capital will go to what is termed “money heaven.” It will never be seen again; it will simply be lost. Another possible outcome is that the money reallocates to an alternative asset class. Could 5% of the total bond market move to gold? Probably not, as this is a sum larger than total global gold holdings. Will it move to real estate? Potentially, but real estate is already the largest asset class in nominal dollar size globally. Could it reallocate to stocks? This is another potential outcome. Think about pension funds that are not only underfunded but have specific rate-of-return mandates. Can they stand there and watch their bond holdings decline? Never. They will be forced to sell bonds and reallocate the proceeds. The question is where. Other large institutional investors face the same issue. Equities may be a key repository in a world where global capital is seeking safety and liquidity. Again, only a potential outcome.

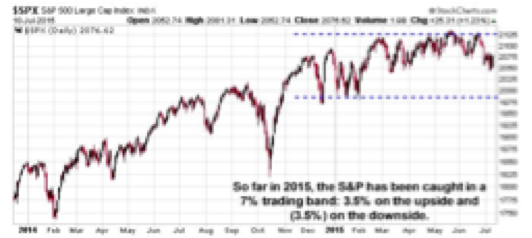

We simply need to watch the movement of global capital and how that is expressed in the forward price of these key global asset classes. Watching where the S&P ultimately moves out of this currently tight trading range seen this year will be very important. It will be a signal as to where global capital is moving at the margin among the major global assets classes.

Checking our emotions at the door is essential. Not getting caught up or emotionally influenced in the up and down of day-to-day price movement is essential. Putting price volatility and market movement into much broader perspective allows us to step back and see the larger global picture of capital movement.

These are the important issues, not where the S&P closes tomorrow, or the next day. Or, for that matter, the day after that.

One of the key takeaways from this data is that the global credit/bond market is about 2.5 times as large as the global equity market. We have expressed our longer-term concern over bonds, especially government bonds. After 35 years of a bull market in bonds, will we have another 35 years of such good fortune? Not a chance. With interest rates at generational lows, the 35-year bond bull market isn’t in the final innings; it’s already in extra innings, thanks to the money printing antics of global central banks. So as we think ahead, we need to contemplate a very important question. What happens to this $160 trillion-plus investment in the global bond market when the 35-year bond bull market breathes its last and the downside begins?

One answer is that some of this capital will go to what is termed “money heaven.” It will never be seen again; it will simply be lost. Another possible outcome is that the money reallocates to an alternative asset class. Could 5% of the total bond market move to gold? Probably not, as this is a sum larger than total global gold holdings. Will it move to real estate? Potentially, but real estate is already the largest asset class in nominal dollar size globally. Could it reallocate to stocks? This is another potential outcome. Think about pension funds that are not only underfunded but have specific rate-of-return mandates. Can they stand there and watch their bond holdings decline? Never. They will be forced to sell bonds and reallocate the proceeds. The question is where. Other large institutional investors face the same issue. Equities may be a key repository in a world where global capital is seeking safety and liquidity. Again, only a potential outcome.

We simply need to watch the movement of global capital and how that is expressed in the forward price of these key global asset classes. Watching where the S&P ultimately moves out of this currently tight trading range seen this year will be very important. It will be a signal as to where global capital is moving at the margin among the major global assets classes.

Checking our emotions at the door is essential. Not getting caught up or emotionally influenced in the up and down of day-to-day price movement is essential. Putting price volatility and market movement into much broader perspective allows us to step back and see the larger global picture of capital movement.

These are the important issues, not where the S&P closes tomorrow, or the next day. Or, for that matter, the day after that.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Brian Pretti is a partner and chief investment officer at Capital Planning Advisors. He has been an investment management professional for more than three decades. He served as senior vice president and chief investment officer for Mechanics Bank Wealth Management, where he was instrumental in growing assets under management from $150 million to more than $1.4 billion.

The short answer is: Just say no -- in a zero-tolerance policy, because safety must be paramount even as states legalize recreational marijuana.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Dan Holden is the manager of corporate risk and insurance for Daimler Trucks North America (formerly Freightliner), a multinational truck manufacturer with total annual revenue of $15 billion. Holden has been in the insurance field for more than 30 years.

A detailed analysis of the closed drug formulary in Texas suggests that the benefits that proponents claim would not materialize in California.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

John Bobik has actively participated in establishing disability insurance operations during an insurance career spanning 35 years, with emphasis on workers' compensation in the U.S., Argentina, Hong Kong, Australia and New Zealand.

Monitoring your credit report is an important way to detect identity theft, but it is reactive, not proactive. Protection needs to be more aggressive.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Brad Barron founded CLC in 1986 as a manufacturer of various types of legal and financial benefit programs. CLC's programs have become the legal, identity-protection and financial assistance component for approximately 150 employee-assistance programs and their more than 15,000 employer groups.

Don't think about just using insurance technology to connect to your customers. Think about connecting your risk management team.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Chris Cheatham is the CEO of <a href="http://riskgenius.com/">Riskgenius</a>, a collaborative contract review application for the insurance industry. Cheatham previously worked as an insurance attorney in Washington, D.C. before deciding to solve the messy document problems he was encountering.

Insurers must follow the lead of airlines and retailers and use quote data to fine-tune prices and features based on each customer's situation.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

John Johansen is a senior vice president at Majesco. He leads the company's data strategy and business intelligence consulting practice areas. Johansen consults to the insurance industry on the effective use of advanced analytics, data warehousing, business intelligence and strategic application architectures.

When insurers get things right and captivate customers, they see a 34% increase in customer retention and a 37% rise in satisfaction.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Bhuvan Thakur is a vice president within the Enterprise Cloud Services business for Capgemini in North America, UK and Asia-Pacific. Thakur has more than 18 years of consulting experience, primarily in the customer relationship management (CRM) and customer experience domain.

Jeff To is the insurance leader for Salesforce. He has led strategic innovation projects in insurance as part of Salesforce's Ignite program. Before that, To was a Lean Six Sigma black belt leading process transformation and software projects for IBM and PwC's financial services vertical.

Market cycles are diminishing greatly because sophisticated analytics let insurers price risks individually, not based on market psychology.

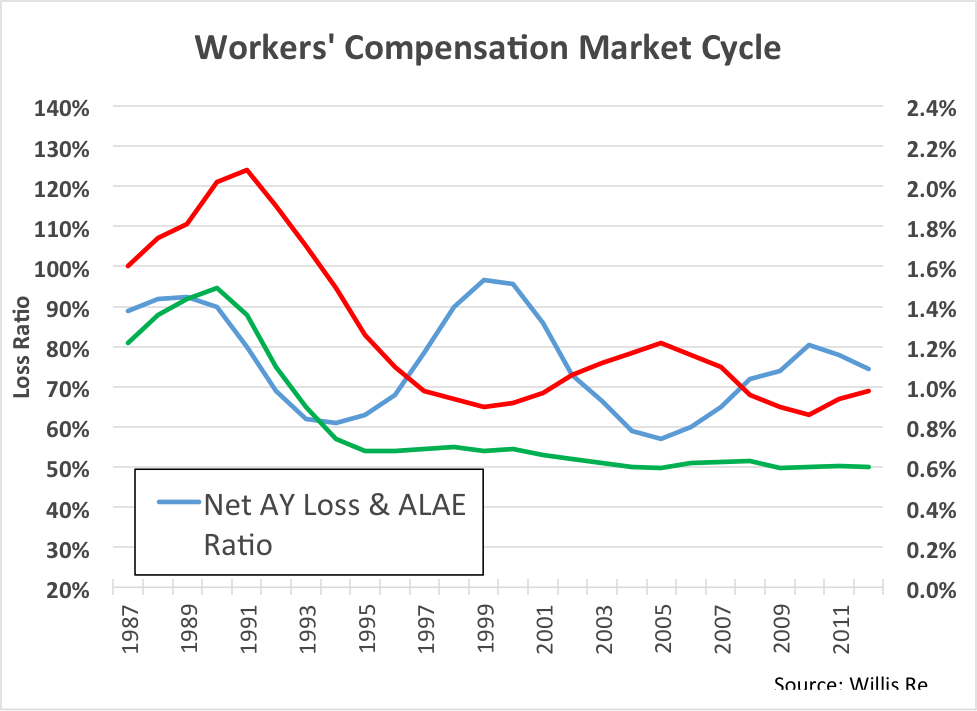

This is an aggregate view of the work comp industry results. The blue line is accident year loss ratio, 1987 to present. See the volatility? Loss ratio is bouncing up and down between 60% and 100%.

Now look at the red line. This is the price line. We see volatility in price, as well, and this makes sense. But what’s the driver here? Is price reacting to loss ratio, or are movements in loss ratio a result of changes in price?

To find the answer, look at the green line. This is the historic loss rate per dollar of payroll. Surprisingly, this line is totally flat from 1995 to the present. In other words, on an aggregate basis, there has been no fundamental change in loss rate for the past 20 years. All of the cycles in the market are the result of just one thing: price movement.

Unfortunately, it appears we have done this to ourselves.

This is an aggregate view of the work comp industry results. The blue line is accident year loss ratio, 1987 to present. See the volatility? Loss ratio is bouncing up and down between 60% and 100%.

Now look at the red line. This is the price line. We see volatility in price, as well, and this makes sense. But what’s the driver here? Is price reacting to loss ratio, or are movements in loss ratio a result of changes in price?

To find the answer, look at the green line. This is the historic loss rate per dollar of payroll. Surprisingly, this line is totally flat from 1995 to the present. In other words, on an aggregate basis, there has been no fundamental change in loss rate for the past 20 years. All of the cycles in the market are the result of just one thing: price movement.

Unfortunately, it appears we have done this to ourselves.

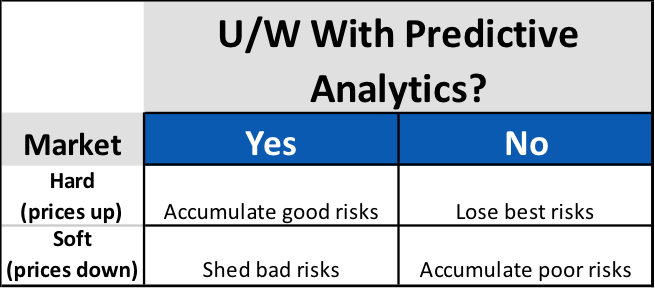

Surprisingly, for carriers using predictive analytics, market cycles present an opportunity to increase profitability, regardless of cycle direction. For the unfortunate carriers not using predictive analytics, the onset of each new cycle phase presents a new threat to portfolio profitability.

Simply accepting that profitability will wax and wane with market cycles isn’t keeping up with the times. Though the length and intensity may change, markets will continue to cycle. Sophisticated carriers know that these cycles present not a threat to profits, but new opportunities for differentiation. Modern approaches to policy acquisition and retention are much more focused on individual risk pricing and selection that incorporate data analytics. The good news is that these data-driven carriers are much more in control of their own destiny, and less subject to market fluctuations as a result.

Surprisingly, for carriers using predictive analytics, market cycles present an opportunity to increase profitability, regardless of cycle direction. For the unfortunate carriers not using predictive analytics, the onset of each new cycle phase presents a new threat to portfolio profitability.

Simply accepting that profitability will wax and wane with market cycles isn’t keeping up with the times. Though the length and intensity may change, markets will continue to cycle. Sophisticated carriers know that these cycles present not a threat to profits, but new opportunities for differentiation. Modern approaches to policy acquisition and retention are much more focused on individual risk pricing and selection that incorporate data analytics. The good news is that these data-driven carriers are much more in control of their own destiny, and less subject to market fluctuations as a result.

Get Involved

Our authors are what set Insurance Thought Leadership apart.

|

Partner with us

We’d love to talk to you about how we can improve your marketing ROI.

|

Bret Shroyer is the solutions architect at Valen Analytics, a provider of proprietary data, analytics and predictive modeling to help all insurance carriers manage and drive underwriting profitability. Bret identifies practical solutions for client success, identifying opportunities to bring tangible benefits from technical modeling.