A new playing field

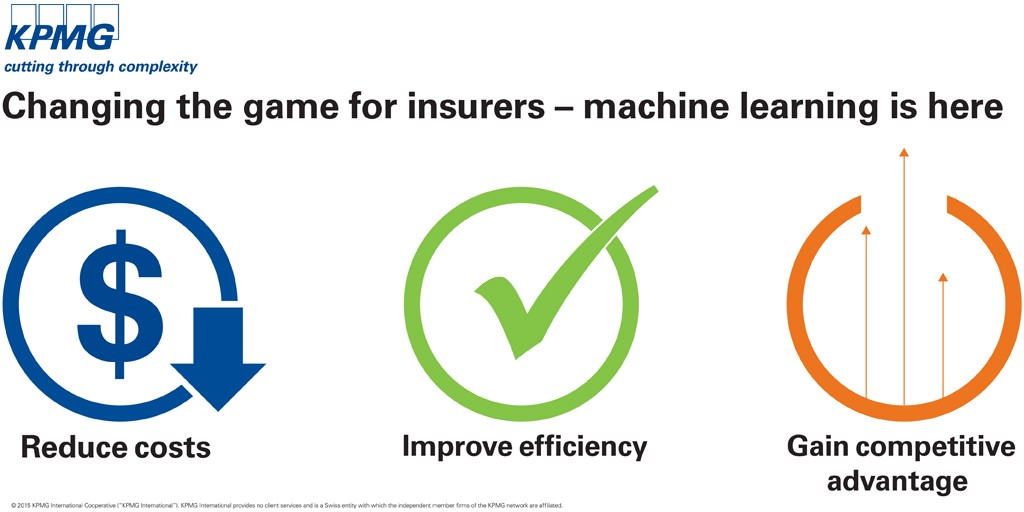

For the insurance sector, we see machine learning as a game-changer. The reality is that most insurance organizations today are focused on three main objectives: improving compliance, improving cost structures and improving competitiveness. It is not difficult to envision how machine learning will form (at least part of) the answer to all three.

Improving compliance: Today’s machine learning algorithms, techniques and technologies can be used on much more than just hard data like facts and figures. They can also be used to analyze information in pictures, videos and voice conversations. Insurers could, for example, use machine learning algorithms to better monitor and understand interactions between customers and sales agents to improve their controls over the mis-selling of products.

Improving cost structures: With a significant portion of an insurer’s cost structure devoted to human resources, any shift toward automation should deliver significant cost savings. Our experience working with insurers suggests that – by using machines instead of humans – insurers could cut their claims processing time down from a number of months to a matter of minutes. What is more, machine learning is often more accurate than humans, meaning that insurers could also cut down the number of denials that result in appeals they may ultimately need to pay out.

Improving competitiveness: While reduced cost structures and improved efficiency can certainly lead to competitive advantage, there are many other ways that machine learning can give insurers the competitive edge. Many insurance customers, for example, may be willing to pay a premium for a product that guarantees frictionless claim payout without the hassle of having to make a call to the claims team. Others may find that they can enhance customer loyalty by simplifying re-enrollment processes and client on-boarding processes to just a handful of questions.

Overcoming cultural differences

It is surprising, therefore, that insurers are only now recognizing the value of machine learning. Insurance organizations are founded on data, and most have already digitized existing records. Insurance is also a resource-intensive business; legions of claims processors, adjustors and assessors are required to pore over the thousands – sometimes millions – of claims submitted in the course of a year. One would therefore expect the insurance sector to be leading the charge toward machine learning. But it is not.

One of the biggest reasons insurers have been slow to adopt machine learning clearly comes down to culture. Generally speaking, the insurance sector is not widely viewed as being "early adopters" of technologies and approaches, preferring instead to wait until technologies have become mature through adoption in other sectors. However, with everyone from governments through to bankers now using machine learning algorithms, this challenge is quickly falling away.

The risk-averse culture of most insurers also dampens the organization’s willingness to experiment and – if necessary – fail in its quest to uncover new approaches. The challenge is that machine learning is all about experimentation and learning from failure; sometimes organizations need to test dozens of algorithms before they find the most suitable one for their purposes. Until "controlled failure" is no longer seen as a career-limiting move, insurance organizations will shy away from testing new approaches.

Insurance organizations also suffer from a cultural challenge common in information-intensive sectors: data hoarding. Indeed, until recently, common wisdom within the business world suggested that those who held the information also held the power. Today, many organizations are starting to realize that it is actually those who share the information who have the most power. As a result, many organizations are now keenly focused on moving toward a "data-driven" culture that rewards information sharing and collaboration and discourages hoarding.

Starting small and growing up

The first thing insurers should realize is that this is not an arms race. The winners will probably not be the organizations with the most data, nor will they likely be the ones that spent the most money on technology. Rather, they will be the ones that took a measured and scientific approach to building their machine learning capabilities and capacities and – over time – found new ways to incorporate machine learning into ever-more aspects of their business.

Insurers may want to embrace the idea of starting small. Our experience and research suggest that – given the cultural and risk challenges facing the insurance sector – insurers will want to start by developing a "proof of concept" model that can safely be tested and adapted in a risk-free environment. Not only will this allow the organization time to improve and test its algorithms, it will also help the designers to better understand exactly what data is required to generate the desired outcome.

More importantly, perhaps, starting with pilots and "proof of concepts" will also provide management and staff with the time they need to get comfortable with the idea of sharing their work with machines. It will take executive-level support and sponsorship as well as keen focus on key change management requirements.

Take the next steps

Recognizing that machines excel at routine tasks and that algorithms learn over time, insurers will want to focus their early "proof of concept" efforts on those processes or assessments that are widely understood and add low value. The more decisions the machine makes and the more data it analyzes, the more prepared it will be to take on more complex tasks and decisions.

Only once the proof of concept has been thoroughly tested and potential applications are understood should business leaders start to think about developing the business case for industrialization (which, to succeed in the long term, must include appropriate frameworks for the governance, monitoring and management of the system).

While this may – on the surface – seem like just another IT implementation plan, the reality is that it machine learning should be championed not by IT but rather by the business itself. It is the business that must decide how and where machines will deliver the most value, and it is the business that owns the data and processes that machines will take over. Ultimately, the business must also be the one that champions machine learning.

All hail, machines!

At KPMG, we have worked with a number of insurers to develop their "proof of concept" machine learning strategies over the past year, and we can say with absolute certainty that the Battle of Machines in the insurance sector has already started. The only other certainty is that those that remain on the sidelines will likely suffer the most as their competitors find new ways to harness machines to drive increasing levels of efficiency and value.

The bottom line is that the machines have arrived. Insurance executives should be welcoming them with open arms.

A new playing field

For the insurance sector, we see machine learning as a game-changer. The reality is that most insurance organizations today are focused on three main objectives: improving compliance, improving cost structures and improving competitiveness. It is not difficult to envision how machine learning will form (at least part of) the answer to all three.

Improving compliance: Today’s machine learning algorithms, techniques and technologies can be used on much more than just hard data like facts and figures. They can also be used to analyze information in pictures, videos and voice conversations. Insurers could, for example, use machine learning algorithms to better monitor and understand interactions between customers and sales agents to improve their controls over the mis-selling of products.

Improving cost structures: With a significant portion of an insurer’s cost structure devoted to human resources, any shift toward automation should deliver significant cost savings. Our experience working with insurers suggests that – by using machines instead of humans – insurers could cut their claims processing time down from a number of months to a matter of minutes. What is more, machine learning is often more accurate than humans, meaning that insurers could also cut down the number of denials that result in appeals they may ultimately need to pay out.

Improving competitiveness: While reduced cost structures and improved efficiency can certainly lead to competitive advantage, there are many other ways that machine learning can give insurers the competitive edge. Many insurance customers, for example, may be willing to pay a premium for a product that guarantees frictionless claim payout without the hassle of having to make a call to the claims team. Others may find that they can enhance customer loyalty by simplifying re-enrollment processes and client on-boarding processes to just a handful of questions.

Overcoming cultural differences

It is surprising, therefore, that insurers are only now recognizing the value of machine learning. Insurance organizations are founded on data, and most have already digitized existing records. Insurance is also a resource-intensive business; legions of claims processors, adjustors and assessors are required to pore over the thousands – sometimes millions – of claims submitted in the course of a year. One would therefore expect the insurance sector to be leading the charge toward machine learning. But it is not.

One of the biggest reasons insurers have been slow to adopt machine learning clearly comes down to culture. Generally speaking, the insurance sector is not widely viewed as being "early adopters" of technologies and approaches, preferring instead to wait until technologies have become mature through adoption in other sectors. However, with everyone from governments through to bankers now using machine learning algorithms, this challenge is quickly falling away.

The risk-averse culture of most insurers also dampens the organization’s willingness to experiment and – if necessary – fail in its quest to uncover new approaches. The challenge is that machine learning is all about experimentation and learning from failure; sometimes organizations need to test dozens of algorithms before they find the most suitable one for their purposes. Until "controlled failure" is no longer seen as a career-limiting move, insurance organizations will shy away from testing new approaches.

Insurance organizations also suffer from a cultural challenge common in information-intensive sectors: data hoarding. Indeed, until recently, common wisdom within the business world suggested that those who held the information also held the power. Today, many organizations are starting to realize that it is actually those who share the information who have the most power. As a result, many organizations are now keenly focused on moving toward a "data-driven" culture that rewards information sharing and collaboration and discourages hoarding.

Starting small and growing up

The first thing insurers should realize is that this is not an arms race. The winners will probably not be the organizations with the most data, nor will they likely be the ones that spent the most money on technology. Rather, they will be the ones that took a measured and scientific approach to building their machine learning capabilities and capacities and – over time – found new ways to incorporate machine learning into ever-more aspects of their business.

Insurers may want to embrace the idea of starting small. Our experience and research suggest that – given the cultural and risk challenges facing the insurance sector – insurers will want to start by developing a "proof of concept" model that can safely be tested and adapted in a risk-free environment. Not only will this allow the organization time to improve and test its algorithms, it will also help the designers to better understand exactly what data is required to generate the desired outcome.

More importantly, perhaps, starting with pilots and "proof of concepts" will also provide management and staff with the time they need to get comfortable with the idea of sharing their work with machines. It will take executive-level support and sponsorship as well as keen focus on key change management requirements.

Take the next steps

Recognizing that machines excel at routine tasks and that algorithms learn over time, insurers will want to focus their early "proof of concept" efforts on those processes or assessments that are widely understood and add low value. The more decisions the machine makes and the more data it analyzes, the more prepared it will be to take on more complex tasks and decisions.

Only once the proof of concept has been thoroughly tested and potential applications are understood should business leaders start to think about developing the business case for industrialization (which, to succeed in the long term, must include appropriate frameworks for the governance, monitoring and management of the system).

While this may – on the surface – seem like just another IT implementation plan, the reality is that it machine learning should be championed not by IT but rather by the business itself. It is the business that must decide how and where machines will deliver the most value, and it is the business that owns the data and processes that machines will take over. Ultimately, the business must also be the one that champions machine learning.

All hail, machines!

At KPMG, we have worked with a number of insurers to develop their "proof of concept" machine learning strategies over the past year, and we can say with absolute certainty that the Battle of Machines in the insurance sector has already started. The only other certainty is that those that remain on the sidelines will likely suffer the most as their competitors find new ways to harness machines to drive increasing levels of efficiency and value.

The bottom line is that the machines have arrived. Insurance executives should be welcoming them with open arms.How Machine Learning Changes the Game

Machine learning will improve compliance, cost structures and competitiveness -- but insurers must overcome cultural obstacles.

A new playing field

For the insurance sector, we see machine learning as a game-changer. The reality is that most insurance organizations today are focused on three main objectives: improving compliance, improving cost structures and improving competitiveness. It is not difficult to envision how machine learning will form (at least part of) the answer to all three.

Improving compliance: Today’s machine learning algorithms, techniques and technologies can be used on much more than just hard data like facts and figures. They can also be used to analyze information in pictures, videos and voice conversations. Insurers could, for example, use machine learning algorithms to better monitor and understand interactions between customers and sales agents to improve their controls over the mis-selling of products.

Improving cost structures: With a significant portion of an insurer’s cost structure devoted to human resources, any shift toward automation should deliver significant cost savings. Our experience working with insurers suggests that – by using machines instead of humans – insurers could cut their claims processing time down from a number of months to a matter of minutes. What is more, machine learning is often more accurate than humans, meaning that insurers could also cut down the number of denials that result in appeals they may ultimately need to pay out.

Improving competitiveness: While reduced cost structures and improved efficiency can certainly lead to competitive advantage, there are many other ways that machine learning can give insurers the competitive edge. Many insurance customers, for example, may be willing to pay a premium for a product that guarantees frictionless claim payout without the hassle of having to make a call to the claims team. Others may find that they can enhance customer loyalty by simplifying re-enrollment processes and client on-boarding processes to just a handful of questions.

Overcoming cultural differences

It is surprising, therefore, that insurers are only now recognizing the value of machine learning. Insurance organizations are founded on data, and most have already digitized existing records. Insurance is also a resource-intensive business; legions of claims processors, adjustors and assessors are required to pore over the thousands – sometimes millions – of claims submitted in the course of a year. One would therefore expect the insurance sector to be leading the charge toward machine learning. But it is not.

One of the biggest reasons insurers have been slow to adopt machine learning clearly comes down to culture. Generally speaking, the insurance sector is not widely viewed as being "early adopters" of technologies and approaches, preferring instead to wait until technologies have become mature through adoption in other sectors. However, with everyone from governments through to bankers now using machine learning algorithms, this challenge is quickly falling away.

The risk-averse culture of most insurers also dampens the organization’s willingness to experiment and – if necessary – fail in its quest to uncover new approaches. The challenge is that machine learning is all about experimentation and learning from failure; sometimes organizations need to test dozens of algorithms before they find the most suitable one for their purposes. Until "controlled failure" is no longer seen as a career-limiting move, insurance organizations will shy away from testing new approaches.

Insurance organizations also suffer from a cultural challenge common in information-intensive sectors: data hoarding. Indeed, until recently, common wisdom within the business world suggested that those who held the information also held the power. Today, many organizations are starting to realize that it is actually those who share the information who have the most power. As a result, many organizations are now keenly focused on moving toward a "data-driven" culture that rewards information sharing and collaboration and discourages hoarding.

Starting small and growing up

The first thing insurers should realize is that this is not an arms race. The winners will probably not be the organizations with the most data, nor will they likely be the ones that spent the most money on technology. Rather, they will be the ones that took a measured and scientific approach to building their machine learning capabilities and capacities and – over time – found new ways to incorporate machine learning into ever-more aspects of their business.

Insurers may want to embrace the idea of starting small. Our experience and research suggest that – given the cultural and risk challenges facing the insurance sector – insurers will want to start by developing a "proof of concept" model that can safely be tested and adapted in a risk-free environment. Not only will this allow the organization time to improve and test its algorithms, it will also help the designers to better understand exactly what data is required to generate the desired outcome.

More importantly, perhaps, starting with pilots and "proof of concepts" will also provide management and staff with the time they need to get comfortable with the idea of sharing their work with machines. It will take executive-level support and sponsorship as well as keen focus on key change management requirements.

Take the next steps

Recognizing that machines excel at routine tasks and that algorithms learn over time, insurers will want to focus their early "proof of concept" efforts on those processes or assessments that are widely understood and add low value. The more decisions the machine makes and the more data it analyzes, the more prepared it will be to take on more complex tasks and decisions.

Only once the proof of concept has been thoroughly tested and potential applications are understood should business leaders start to think about developing the business case for industrialization (which, to succeed in the long term, must include appropriate frameworks for the governance, monitoring and management of the system).

While this may – on the surface – seem like just another IT implementation plan, the reality is that it machine learning should be championed not by IT but rather by the business itself. It is the business that must decide how and where machines will deliver the most value, and it is the business that owns the data and processes that machines will take over. Ultimately, the business must also be the one that champions machine learning.

All hail, machines!

At KPMG, we have worked with a number of insurers to develop their "proof of concept" machine learning strategies over the past year, and we can say with absolute certainty that the Battle of Machines in the insurance sector has already started. The only other certainty is that those that remain on the sidelines will likely suffer the most as their competitors find new ways to harness machines to drive increasing levels of efficiency and value.

The bottom line is that the machines have arrived. Insurance executives should be welcoming them with open arms.

A new playing field

For the insurance sector, we see machine learning as a game-changer. The reality is that most insurance organizations today are focused on three main objectives: improving compliance, improving cost structures and improving competitiveness. It is not difficult to envision how machine learning will form (at least part of) the answer to all three.

Improving compliance: Today’s machine learning algorithms, techniques and technologies can be used on much more than just hard data like facts and figures. They can also be used to analyze information in pictures, videos and voice conversations. Insurers could, for example, use machine learning algorithms to better monitor and understand interactions between customers and sales agents to improve their controls over the mis-selling of products.

Improving cost structures: With a significant portion of an insurer’s cost structure devoted to human resources, any shift toward automation should deliver significant cost savings. Our experience working with insurers suggests that – by using machines instead of humans – insurers could cut their claims processing time down from a number of months to a matter of minutes. What is more, machine learning is often more accurate than humans, meaning that insurers could also cut down the number of denials that result in appeals they may ultimately need to pay out.

Improving competitiveness: While reduced cost structures and improved efficiency can certainly lead to competitive advantage, there are many other ways that machine learning can give insurers the competitive edge. Many insurance customers, for example, may be willing to pay a premium for a product that guarantees frictionless claim payout without the hassle of having to make a call to the claims team. Others may find that they can enhance customer loyalty by simplifying re-enrollment processes and client on-boarding processes to just a handful of questions.

Overcoming cultural differences

It is surprising, therefore, that insurers are only now recognizing the value of machine learning. Insurance organizations are founded on data, and most have already digitized existing records. Insurance is also a resource-intensive business; legions of claims processors, adjustors and assessors are required to pore over the thousands – sometimes millions – of claims submitted in the course of a year. One would therefore expect the insurance sector to be leading the charge toward machine learning. But it is not.

One of the biggest reasons insurers have been slow to adopt machine learning clearly comes down to culture. Generally speaking, the insurance sector is not widely viewed as being "early adopters" of technologies and approaches, preferring instead to wait until technologies have become mature through adoption in other sectors. However, with everyone from governments through to bankers now using machine learning algorithms, this challenge is quickly falling away.

The risk-averse culture of most insurers also dampens the organization’s willingness to experiment and – if necessary – fail in its quest to uncover new approaches. The challenge is that machine learning is all about experimentation and learning from failure; sometimes organizations need to test dozens of algorithms before they find the most suitable one for their purposes. Until "controlled failure" is no longer seen as a career-limiting move, insurance organizations will shy away from testing new approaches.

Insurance organizations also suffer from a cultural challenge common in information-intensive sectors: data hoarding. Indeed, until recently, common wisdom within the business world suggested that those who held the information also held the power. Today, many organizations are starting to realize that it is actually those who share the information who have the most power. As a result, many organizations are now keenly focused on moving toward a "data-driven" culture that rewards information sharing and collaboration and discourages hoarding.

Starting small and growing up

The first thing insurers should realize is that this is not an arms race. The winners will probably not be the organizations with the most data, nor will they likely be the ones that spent the most money on technology. Rather, they will be the ones that took a measured and scientific approach to building their machine learning capabilities and capacities and – over time – found new ways to incorporate machine learning into ever-more aspects of their business.

Insurers may want to embrace the idea of starting small. Our experience and research suggest that – given the cultural and risk challenges facing the insurance sector – insurers will want to start by developing a "proof of concept" model that can safely be tested and adapted in a risk-free environment. Not only will this allow the organization time to improve and test its algorithms, it will also help the designers to better understand exactly what data is required to generate the desired outcome.

More importantly, perhaps, starting with pilots and "proof of concepts" will also provide management and staff with the time they need to get comfortable with the idea of sharing their work with machines. It will take executive-level support and sponsorship as well as keen focus on key change management requirements.

Take the next steps

Recognizing that machines excel at routine tasks and that algorithms learn over time, insurers will want to focus their early "proof of concept" efforts on those processes or assessments that are widely understood and add low value. The more decisions the machine makes and the more data it analyzes, the more prepared it will be to take on more complex tasks and decisions.

Only once the proof of concept has been thoroughly tested and potential applications are understood should business leaders start to think about developing the business case for industrialization (which, to succeed in the long term, must include appropriate frameworks for the governance, monitoring and management of the system).

While this may – on the surface – seem like just another IT implementation plan, the reality is that it machine learning should be championed not by IT but rather by the business itself. It is the business that must decide how and where machines will deliver the most value, and it is the business that owns the data and processes that machines will take over. Ultimately, the business must also be the one that champions machine learning.

All hail, machines!

At KPMG, we have worked with a number of insurers to develop their "proof of concept" machine learning strategies over the past year, and we can say with absolute certainty that the Battle of Machines in the insurance sector has already started. The only other certainty is that those that remain on the sidelines will likely suffer the most as their competitors find new ways to harness machines to drive increasing levels of efficiency and value.

The bottom line is that the machines have arrived. Insurance executives should be welcoming them with open arms.