Meet Richard Macias. He is 65 years old, born on Dec. 18, 1959. He lives at 2721 Prospect St. in Marlton, N.J.

Richard is 5-foot-7 and weighs 237 pounds. He works as a radar controller, and his mother's maiden name is Walters. Richard has an email address (richardtmacias@jourrapide.com), a phone number (856-596-####), and a Social Security number (136-18-####). He pays for most of his purchases with his Visa card (4532-3836-4287-####, expiring on 4/2028, with a security code of 056).

Richard is also completely made up.

It took less than a minute to create Richard Macias on a site that will deliver a spreadsheet of thousands of synthetic identities with detailed personal information directly to your inbox – for free. The website's FAQ asserts: "We do not condone, support, or encourage illegal activity of any kind." Information is pulled from available public databases in random combinations. Using the street address as an example, this randomness means, "Odds are that the generated street address is not valid," according to the FAQ.

A different free artificial intelligence (AI) program provided a photo of Richard outside New Jersey's famous theme park, Six Flags Great Adventure. That took less than five minutes.

When he looked a little lonely, that same AI added a troupe of grandkids.

Richard's creators used their knowledge of the dark web and other nefarious corners of the internet to find illicit services that, for a small fee, could produce convincing fake documents such as driver's licenses, passports, bank statements, and medical records.

That effort to bring Richard to some form of life stopped short of committing actual fraud. But many don't stop.

The scale of the problem

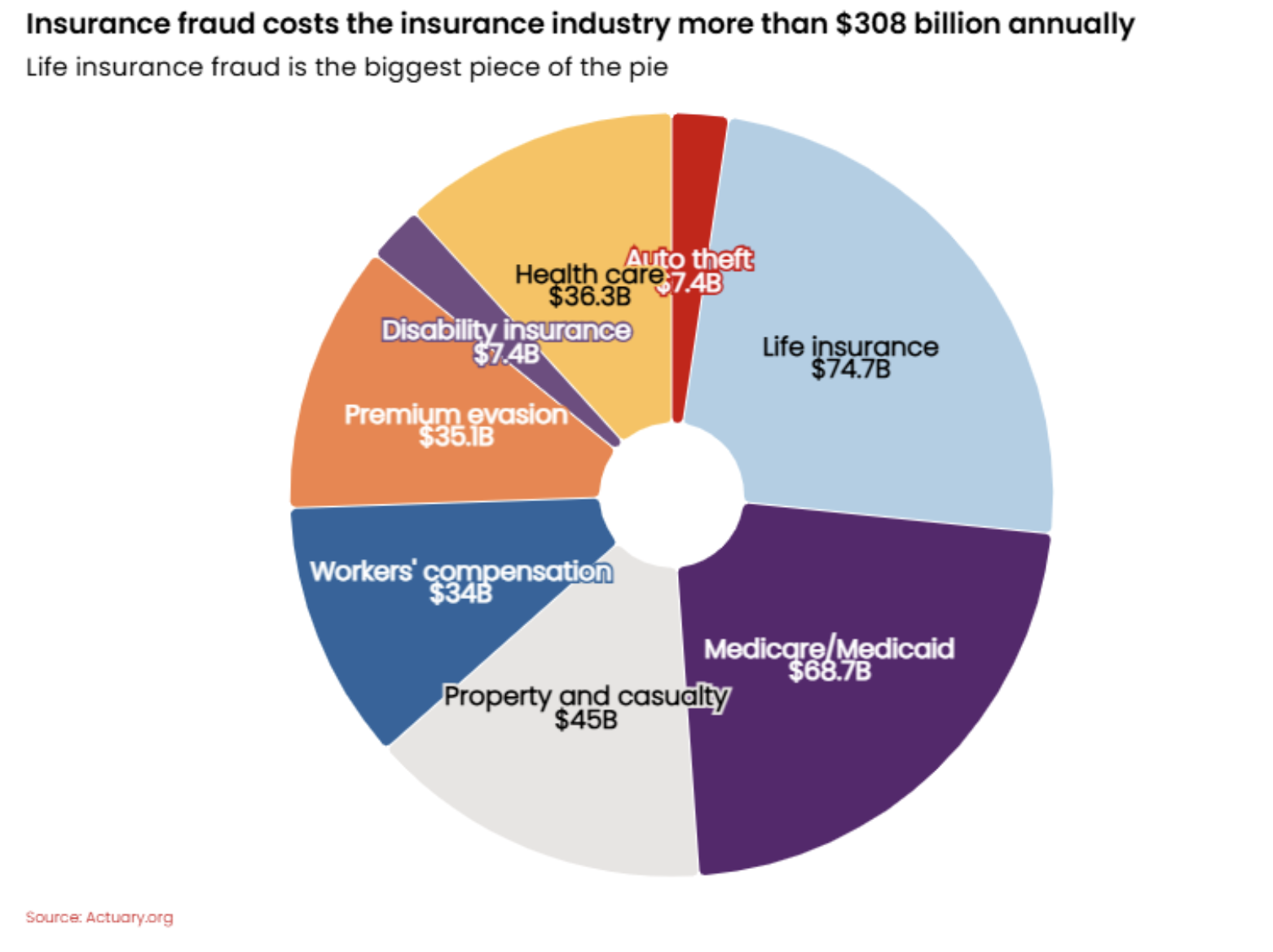

The life insurance industry loses an estimated $74.7 billion to fraud each year. The fastest growing form of this fraud involves synthetic identities – fictitious personas like Richard Macias built from a mix of real and fabricated information.

The cost of synthetic identity fraud in the financial industry has grown from approximately $8 billion in 2020 to more than $30 billion today, a nearly 300% increase in just five years. The Federal Reserve estimates that synthetic identity fraud now accounts for 80%-85% of all identity fraud cases.

Life insurance fraud is a particular target for ne'er-do-wells using synthetic identities. Fraudsters have been known to secure life insurance policies on these fake identities and then "kill them off" to collect benefits. Children younger than 15 years old and elderly populations are particularly vulnerable, as their Social Security numbers are either unused for years or not actively monitored.

Connections to organized crime

These schemes are occasionally mentioned as being part of organized crime efforts. While specific statistics on fraudulent death claims tied to organized crime are limited, life insurance fraud represents a massive cost center, with experts warning AI will make it easier and faster to create realistic fake identities – and harder for insurance companies to detect them.

For example, a recent case in India exposed a multi-state syndicate labeled an "insurance mafia" that created fraudulent life insurance policies for terminally ill or deceased individuals. This group used fake identities and forged documents to siphon the equivalent of $64 million or more from major insurers.

The challenge with synthetic identity fraud in life insurance is that it can appear to be a victimless crime. Richard Macias and the thousands of synthetic identities that apply for insurance products via the web are not real people, so it can appear that no human being would be harmed in fraudulently creating their profiles – at least initially. This makes these particular schemes incredibly attractive to organized crime groups, which prefer to stay under the radar while raking in millions of dollars in ill-gotten gains.

Of course, these schemes are not victimless. Recouping losses from fraudulent claims drives up premiums for everyone, costing the average family $400-$700 a year in additional premiums, the FBI estimates.

AI could make this easier and more costly. But it is also making it easier for insurers to fight back.

Building an AI defense system

The same technological advances bad actors are weaponizing to commit fraud, insurance companies can turn into a highly advanced fraud-detection shield.

Insurers are using new technology, including AI, to fight fraud in numerous innovative and powerful ways. For example:

- Omnichannel verification – Vetting individuals across multiple channels (digital, phone, in-person) to confirm their authenticity.

- Machine learning – Analyzing patterns in claims and application data to detect anomalies indicative of synthetic identities or coordinated fraud schemes.

- Biometric authentication – Using facial recognition, voice analysis, and fingerprint scanning to verify the identity of policyholders and claimants.

- Cross-industry data sharing – Collaborating with other insurers, banks, and law enforcement to identify and track synthetic identities and organized crime activity.

- Continuous monitoring – Real-time, 24-hour monitoring of transactions and claims for suspicious activity, enabling faster detection and response.

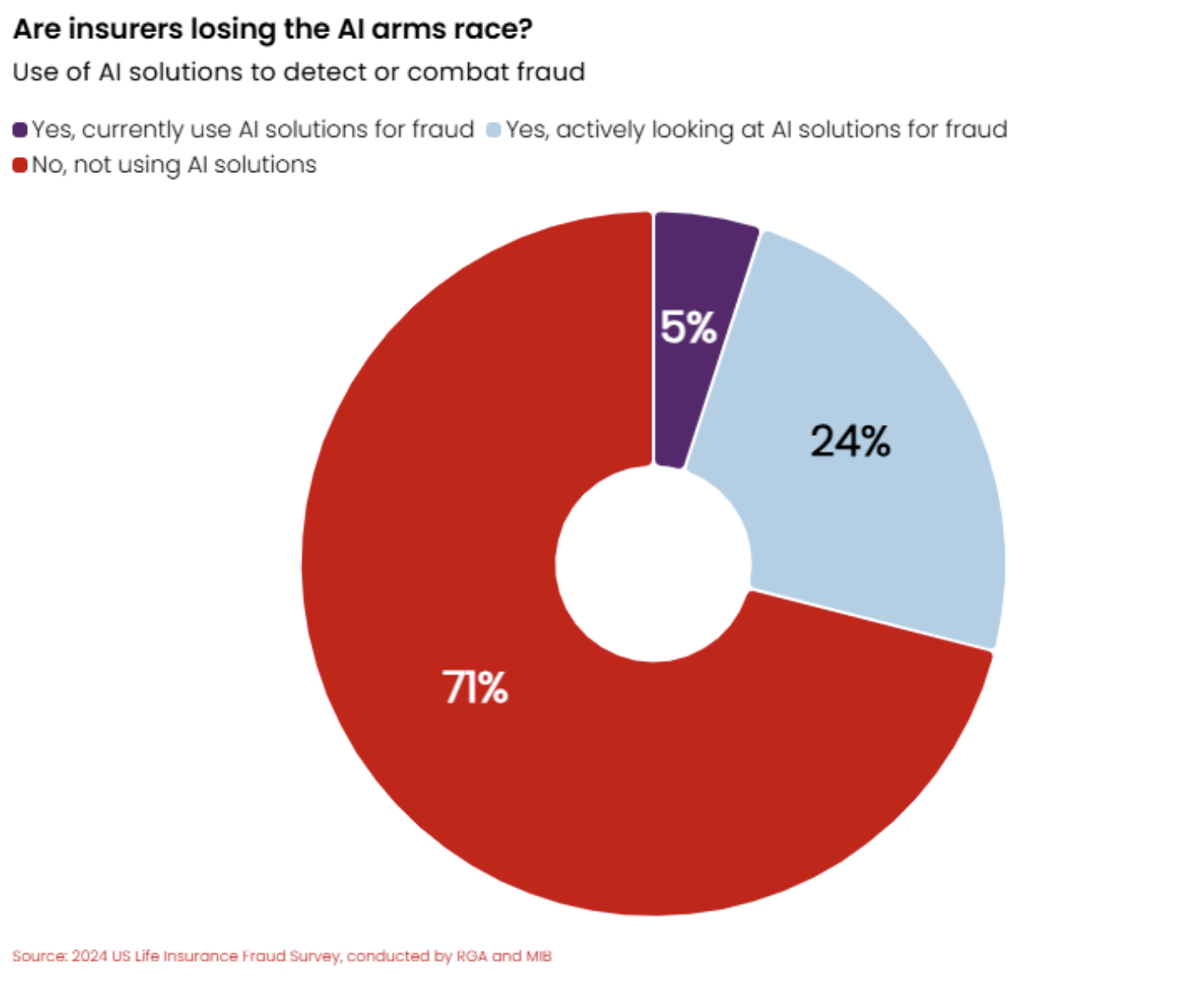

But less than one-third of respondents in the 2024 U.S. Life Insurance Fraud Survey, conducted by RGA and MIB, indicated they are using algorithms or analytics tools to flag questionable underwriting applications.

More than 70% of insurers said they are interested in using data analytics or technology-based tools to detect fraudulent applications, but only 5% of carriers currently use AI as part of the fight, and only 24% are actively exploring AI solutions.

Looked at in full, insurers are potentially falling behind in the AI arms race and ceding too much of the battlefield to those who would use AI for harm.

Conclusion: Eliminating Richard Macias

Proving Richard Macias to be fake is not difficult. A search of Google Maps reveals there is no Prospect Street in Marlton, N.J.; calling his phone number leads to the rapid busy signal of an out-of-service line; trying to buy groceries with his Visa card will leave bare cupboards.

That said, it is increasingly easy to create fake people with addresses, phone numbers, and credit cards that can pass for the real thing and be tapped to commit costly fraud that hurts insurance companies' reputations – and their customers' wallets.

The key for insurers is to use the very tools that criminals weaponize to augment the fraud-detection skills of their employees and create a potent one-two counterpunch against illegal activity. One smart path forward is for insurers to partner with experts in technology-driven anti-fraud solutions to rapidly scale their fraud-fighting arsenal to meet the growing challenge.

You can register for the 13th Annual RGA Fraud Conference here: https://events.bizzabo.com/715418