Artificial intelligence (AI) has quickly become the productivity tool employees can't live without. From drafting emails to analyzing documents to using AI coding assistants, workers are bringing AI into their daily workflows.

There is a growing problem, however. Most companies struggle to understand and address the associated security risks of an expanding ecosystem of AI tools, so they outright ban most (if not all) tools and only approve use of a few (if any) that they deem secure and compliant. Unfortunately, not all employees adhere to their company's policies, with many opting to use tools that are unapproved and unsanctioned – referred to as "shadow AI."

Similar to shadow IT, shadow AI is when employees use external AI tools – generative AI, coding assistants, or analytics tools, for example – of which the IT team has no knowledge or oversight. Shadow AI is far riskier than shadow IT, because tools like ChatGPT and Claude, and open-source large language models (LLMs) like Llama, are easily accessible, easy to use, and not easily visible. This creates an unseen, rapidly expanding risk surface that only expands as unapproved AI usage grows.

Recent research underscores the dangers of shadow AI: 84% of AI tools have already experienced data breaches, and over half (51%) of tools have been the victims of credential theft. Additionally, a late 2024 survey of 7,000 employed workers by CybSafe and the National Cybersecurity Alliance (NCA) shows that about 38% of employees share confidential data with AI platforms without approval.

Imagine that each unsanctioned query or prompt gives rise to the potential to leak sensitive corporate data to malicious or unauthorized users, and you can understand how severe the risks of shadow AI are.

The Dangers Posed by Shadow AI

As companies put the brakes on use of AI tools – unless they have built-in security mechanisms and are proven to adhere to data protection laws and regulations like HIPAA and GDPR – there is a broad ecosystem of unsanctioned tools available in the wild, the use of which can introduce risks and consequences:

- Data leakage → confidential queries and context sent to insecure AI tools.

- Intellectual property loss → sensitive product or strategy details exposed.

- Compliance failures → regulated data (health, financial, personal) used in unapproved tools.

- Credential theft → as half of AI tools have shown, even access controls aren't guaranteed.

Simply put: Shadow AI is not just a nuisance – it's an open door for attackers and a compliance nightmare waiting to happen.

Despite the risks, many employees use AI for a few reasons:

- Productivity pressure is real → workers want faster, smarter ways to get tasks done. AI feels like the only way to keep up.

- Corporate tools often lag behind → slow approvals or outdated platforms drive workers to "bring their own AI."

- They're unaware of the risks → employees may know they're using unsanctioned tools, but they may not understand the level of risk this introduces.

- AI feels intuitive and indispensable → once employees experience the value, they rarely go back.

Out of the Shadows

The way to mitigate shadow AI is not to ban use of AI tools. Not only is banning AI ineffective, it can actually introduce more risks because employees' use hides in the shadows, beyond the scope of IT. And the truth is, employees won't stop using AI. But enterprises can do more to provide secure, sanctioned channels and safe AI tools that actually meet employee needs.

Doing so requires a few elements:

- Confidentiality built in → continuous encryption so neither the model nor the data ever appears in plaintext

- Enterprise-grade controls → visibility into how AI is used without stifling innovation

- Performance at scale → tools that are as fast and intuitive as the consumer alternatives employees are drawn to

The CISO's Opportunity: Safe, Compliant AI Adoption

Shadow AI may cause headaches and sleepless nights for CISOs, but there are tools they can leverage to allow their companies to embrace the power of AI by ensuring end-to-end protection of data and LLMs.

Organizations need safe, secure AI adoption for securing both sides of the story:

- Models are encrypted → protecting IP, weights, and parameters from theft or tampering.

- Data is encrypted → ensuring training sets, queries, and outputs are never leaked in plaintext.

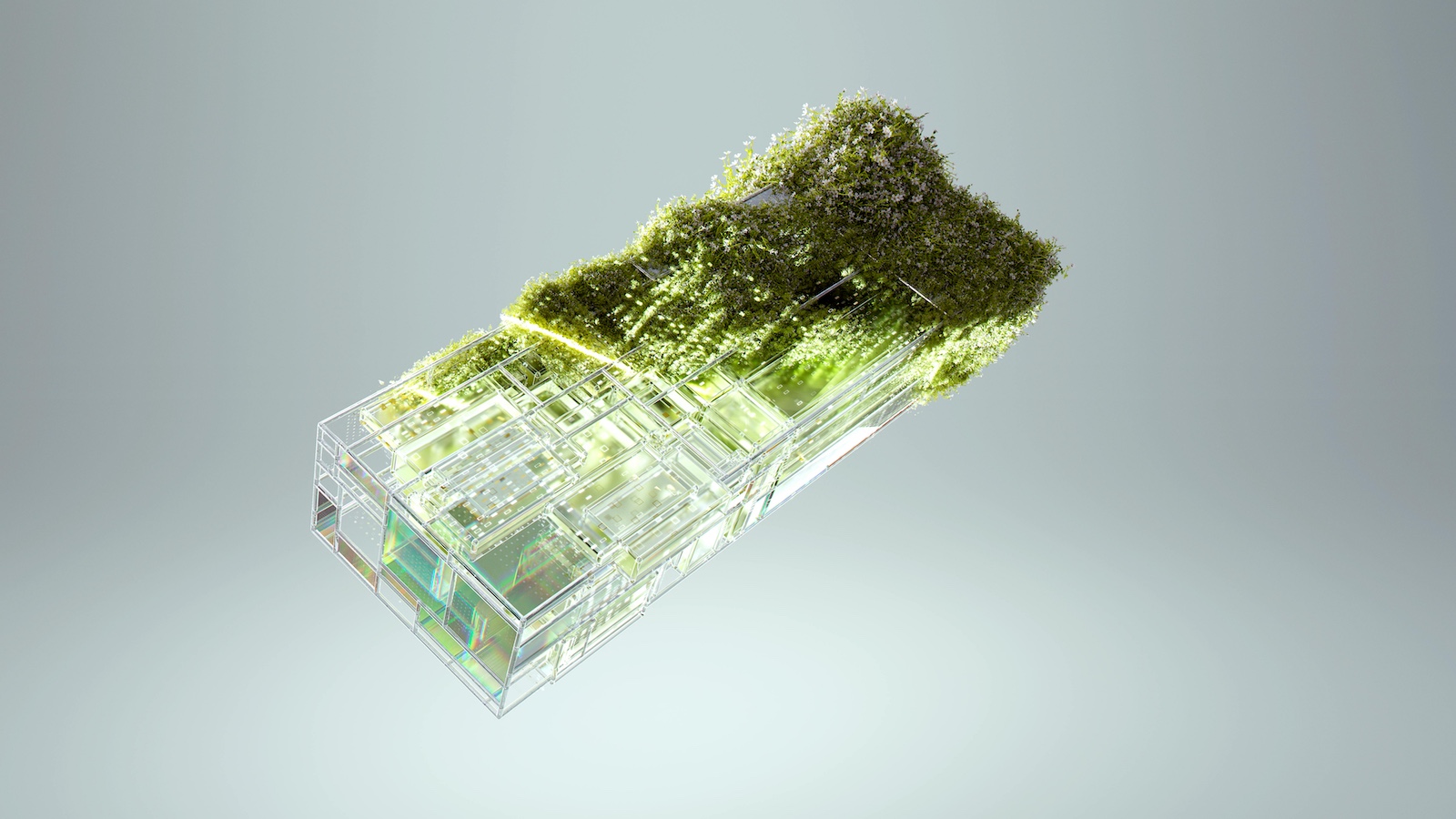

If an AI model is stolen, it will be useless, as it can only function within the trusted execution environment (TEE). The encryption key, which only exists within the TEE, protects data by ensuring that only users with the key can view the results generated by each query.

A dual-layer approach (Fully Homomorphic Encryption (FHE) and TEE), ensures AI providers cannot reconstruct raw user inputs/outputs, even during sensitive transformations. The TEE briefly manages plaintext operations within its secure memory space and then immediately re-encrypts the results, while FHE guarantees data remains encrypted during all operations.

FHE can be deployed to protect any number of AI tools, enabling companies to embrace AI tools with confidence that data leakage will not occur and regulatory compliance will not be jeopardized.

The result: enterprises regain control. Employees gain productivity. AI adoption is embraced with confidence and peace of mind.

Confidential AI Makes Shadow Usage a Thing of the Past

Shadow AI exists because employees are desperate for better tools. The only sustainable way to combat it is to offer safe, powerful alternatives that protect both corporate data and AI models.