Many insurers are in an AI pilot purgatory, where promising experiments rarely scale into everyday operations. The models perform adequately, and the business cases hold up. The primary barrier is data architecture. Systems built for reporting and analytics simply cannot support the demands of production AI.

Core insurance decisions depend on synthesizing information from multiple sources. Underwriters assess application data, loss histories, external data, and regulations to evaluate risk. Claims handlers review photos, repair estimates, medical notes, and witness statements to settle cases. Investigators pull together scattered, sometimes conflicting information to pursue recovery. Transforming this expertise into AI capability requires data architecture that supports learning, generation, and contextual requirements.

Why traditional architectures fall short

Organizations have long separated day-to-day transaction systems from analytical warehouses. This division supported dashboards and compliance reporting effectively. However, AI blurs these boundaries because it learns from historical patterns to make real-time operational decisions.

When AI evaluates a new claim, it needs current policy data, historical loss patterns, regulatory requirements, and market conditions simultaneously. It needs real-time transactional data integrated with comprehensive historical context.

Unstructured documents create an even larger hurdle. Applications, claims notes, legal filings, and reports hold the most valuable intel for decision making. Many architectures treat this as a mere storage challenge. AI needs to understand documents at a much deeper level - identifying key elements, mapping connections, and pulling meaning in real time alongside structured records.

This matters most for complex workflows. When AI processes legal documents, filings, or investigation files, it can handle work that once demanded years of specialist knowledge. Document intelligence must sit at the same level as core transactional and analytical data in the architecture to enable this.

What AI-ready data architecture requires

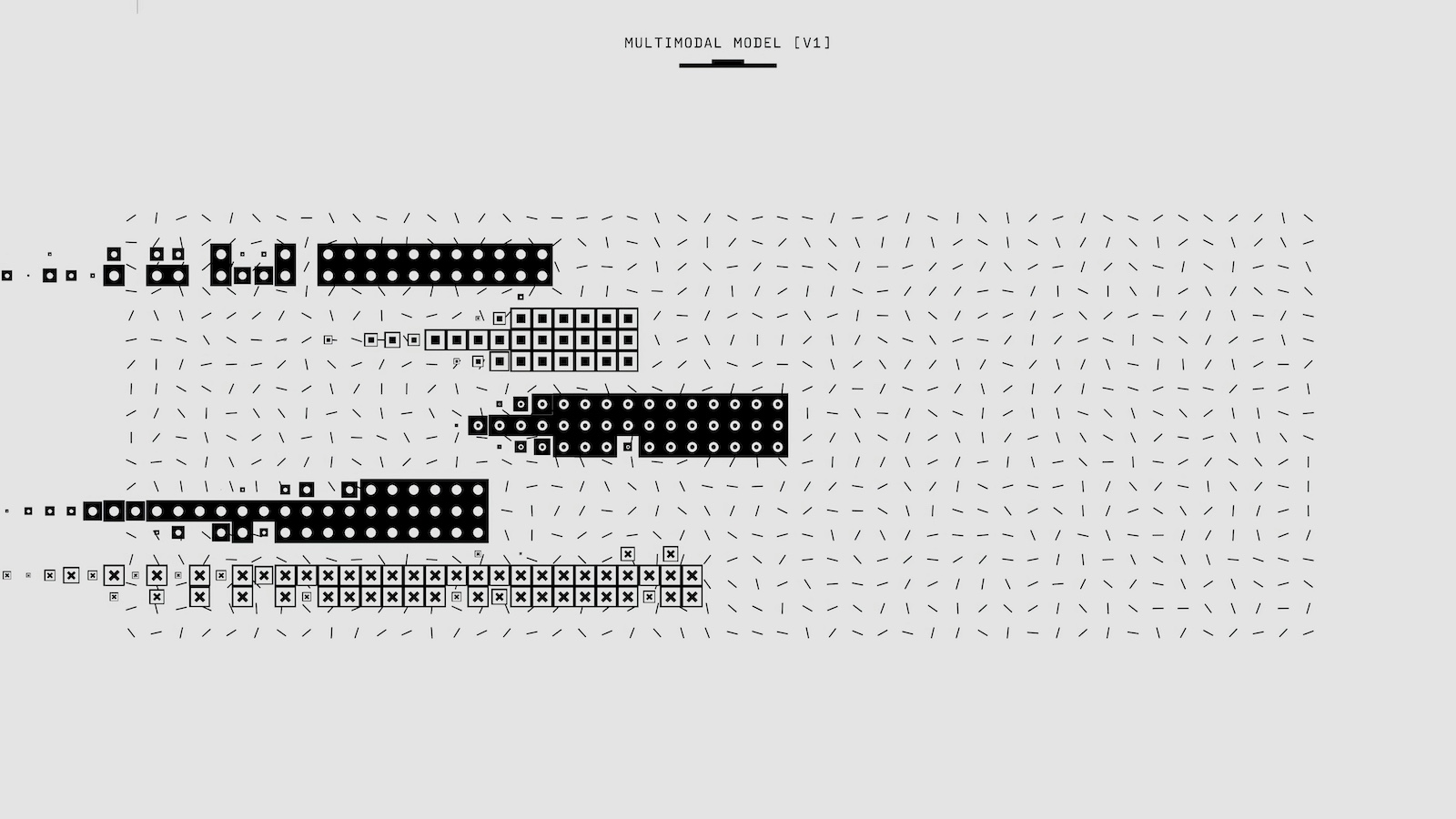

Getting data ready for AI means building four specific capabilities that work together. Each closes a critical gap between how traditional systems operate and what AI applications need to work in production.

1. Bridging architecture and data silos

AI applications need access to policy systems, claims platforms, finance, and external data without long delays. This means real-time operational data alongside historical context, structured tables alongside document content, internal data alongside external feeds. This doesn't mean consolidating everything into one repository or platform. Focus on connecting data where it lives, with clear tracking of its path and origins. This architecture enables AI to navigate existing systems securely with proper lineage and control.

2. Capturing and using expert knowledge

Every time an underwriter overrides an AI suggestion or a claims handler adjusts an estimate, that action contains valuable knowledge. The capability to capture, curate, and organize expert feedback into training datasets separates competitive AI from generic tools. Raw corrections alone aren't sufficient. The architecture must support structured approaches that validate expert feedback, enrich it with context and reasoning, and organize it into training datasets that prevent bias while maximizing learning signal.

3. Managing context data for AI

Experienced underwriters or claims adjusters don't evaluate evidence in isolation. They build a growing understanding as new information arrives, drawing inferences, applying rules, and tracking reasoning. AI needs the same ability: to maintain and evolve understanding throughout a process. Context is this accumulated understanding that an AI system builds, stores, and shares as it works through a process. AI requires context as a managed data type with its own lifecycle, access controls, and transformation rules.

4. Creating data environments for AI development and testing

Moving AI from pilots to production deployment requires infrastructure that can provision realistic environments on demand. The data architecture must support replicating production data with appropriate privacy controls and generating synthetic data for edge cases. As AI programs scale across use cases and product lines, the ability to spin up multiple isolated environments lets teams work in parallel without interference. Provisioning environments quickly with realistic data, then tearing them down when complete, becomes critical infrastructure for scaling AI operations.

Competitive reality

AI will transform insurance operations. Organizations that address these data foundations build compounding advantages: faster decisions, greater accuracy, reduced leakage, and teams freed for higher-impact work.

Start with use cases where the business case is clearest. Focus on the data and capabilities those require, and build incrementally on current investments.